Superconductivity beautifully illustrates the characteristics of emergent properties.

Novelty.

Distinct properties of the superconducting state include zero resistivity, the Meissner effect, and the Josephson effect. The normal metallic state does not exhibit these properties.

At low temperatures, solid tin exhibits the property of superconductivity. However, a single atom of tin is not a superconductor. A small number of tin atoms has an energy gap due to pairing interactions, but not bulk superconductivity.

There is more than one superconducting state of matter. The order parameter may have the same symmetry as a non-trivial representation of the crystal symmetry and it can have spin singlet or triplet symmetry. Type II superconductors in a magnetic field have an Abrikosov vortex lattice, another distinct state of matter.

Unpredictability.

Even though the underlying laws describing the interactions between electrons in a crystal have been known for one hundred years, the discovery of superconductivity in many specific materials was not predicted. Even after the BCS theory was worked out in 1957 the discovery of superconductivity in intermetallic compounds, cuprates, organic charge transfer salts, fullerenes, and heavy fermion compounds was not predicted.71

Order and structure.

In the superconducting state, the electrons become ordered in a particular way. The motion of the electrons relative to one another is not independent but correlated. Long-range order is reflected in the generalised rigidity, which is responsible for the zero resistivity. Properties of individual atoms (e.g., NMR chemical shifts) are different in vacuum, metallic state, and superconducting state.

Universality.

Properties of superconductivity such as zero electrical resistance, the expulsion of magnetic fields, quantisation of magnetic flux, and the Josephson effects are universal. The existence and description of these properties are independent of the chemical and structural details of the material in which the superconductivity is observed. This is why the Ginzburg-Landau theory works so well. In BCS theory, the temperature dependences of thermodynamic and transport properties are given by universal functions of T/Tc where Tc is the transition temperature. Experimental data is consistent with this for a wide range of superconducting materials, particularly elemental metals for which the electron-phonon coupling is weak.

Modularity at the mesoscale.

Emergent entities include Cooper pairs and vortices. There are two associated emergent length scales, typically much larger than the microscopic scales defined by the interatomic spacing or the Fermi wavelength of electrons. The coherence length is associated with the energy cost of spatial variations in the order parameter. It defines the extent of the proximity effect where the surface of a non-superconducting metal can become superconducting when it is in electrical contact with a superconductor. The coherence length turns out to be of the order of the size of Cooper pairs in BCS theory. The second length scale is the magnetic penetration depth (also known as the London length) which determines the extent that an external magnetic field can penetrate the surface of a superconductor. It is determined by the superfluid density. The relative size of the coherence length and the penetration depth determines whether the formation of an Abrikosov vortex lattice is stable in a large enough magnetic field.

Quasiparticles.

The elementary excitations are Bogoliubov quasiparticles that are qualitatively different to particle and hole excitations in a normal metal. They are a coherent superposition of a particle and hole excitation (relative to the Fermi sea), have zero charge and only exist above the energy gap. The mixed particle-hole character of the quasiparticles is reflected in the phenomenom of Andreev reflection.

Singularities.

Superconductivity is a non-perturbative phenomenon. In BCS theory the transition temperature, Tc, and the excitation energy gap are a non-analytic function of the electron-phonon coupling constant lambda, Tc \sim exp(-1/lambda).

A singular structure is also evident in the properties of the current-current correlation function. Interchange of the limits of zero wavevector and zero frequency do not commute, this being intimately connected with the non-zero superfluid density.

Effective theories.

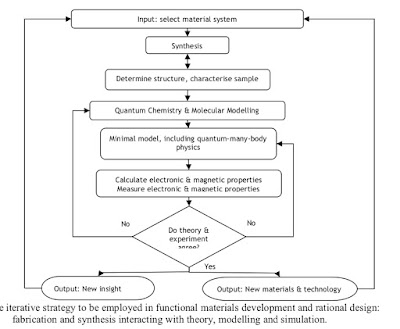

These are illustrated in the Figure below. The many-particle Schrodinger equation describes electrons and atomic nuclei interacting with one another. Many-body theory can be used to justify considering the electrons as a jellium liquid of non-interacting fermions interacting with phonons. Bardeen, Pines, and Frohlich showed that for that system there is an effective interaction between fermions that is attractive. The BCS theory includes a truncated version of this attractive interaction. Gorkov showed that Ginzburg-Landau theory could be derived from BCS theory. The London equations can be derived from Ginzburg-Landau theory. The Josephson equations only include the phase of order parameter to describe a pair of coupled superconductors.

The historical of the development of theories mostly went downwards. London preceded Ginzburg-Landau which preceded BCS theory. Today for specific materials where superconductivity is known to be due to electron-phonon coupling and the electron gas is weakly correlated one can now work upwards using computational methods such as Density Functional Theory (DFT) for Superconductors or the Eliashberg theory with input parameters calculated from DFT-based methods. However, in reality this has debatable success. The superconducting transition temperatures calculated typically vary with the approximations used in the DFT such as the choice of functional and basis set, and often differ from experimental results by the order of 50 percent. This illustrates how hard prediction is for emergent phenomena.

Potential and pitfalls of mean-field theory.

Mean-field approximations and theories can provide a useful guide as what emergent properties are possible and as a starting point to map out properties such as phase diagrams. For some systems and properties, they work incredibly well and for others they fail spectacularly and are misleading.

Ginzburg-Landau theory and BCS theory are both mean-field theories. For three-dimensional superconductors they work extremely well. However, in two dimensions as long-range order and breaking of a continuous symmetry cannot occur and the physics associated with the Berezinskii-Kosterlitz-Thouless transition occurs. Nevertheless, the Ginzburg-Landau theory provides the background to understand the justification for the XY model and the presence of vortices to proceed. Similarly, the BCS theory fails for strongly correlated electron systems, but a version of the BCS theory does give a surprisingly good description of the superconducting state.

Cross-fertilisation of fields.

Concepts and methods developed for the theory of superconductivity bore fruit in other sub-fields of physics including nuclear physics, elementary particles, and astrophysics. Considering the matter fields (associated with the electrons) coupled to electromagnetic fields (a U(1) gauge theory) the matter fields can be integrated out to give a theory in which the photon has mass. This is a perspective on the Meissner effect in which the magnitude of an external magnetic field decays exponentially as it penetrates a superconductor. This idea of a massless gauge field acquiring a mass due to spontaneous symmetry breaking was central to steps towards the Standard Model made by Nambu and by Weinberg.