At the end of 2009 I posted the most viewed posts on this blog. Here is the list from 2010. The number of pageviews are from Google Analytics and are an under-estimate.

1. Nature publishes 17 parameter fit to 20 data points 2,900 pageviews

2. There is no perfect Ph.D project 1,000

3. A Ph.D without scholarship? 310

4. Breakdown of the Born-Oppenheimer approximation 300

5. Beware of curve fitting 280

6. OPV cell efficiency is an emergent property 230

7. Artificial photosynthesis 224

8. Want ad: measure for quantum frustration 180

9. 100 most influential living British scientists 176

10. Ph.D without knowledge 154

Wednesday, December 29, 2010

Sunday, December 26, 2010

Science or metaphor?

I have just started reading a beautiful little book by Denis Noble entitled The Music of Life: Biology beyond genes. It highlights the limitations of a reductionistic approach to biology and the value of an emergent perspective, as in systems biology.

Some of the material is in an earlier article. He considers the following two paragraphs which both discuss the role of genes in an organism:

Which do you agree with? Is there any experiment which could be used to distinguish the scientific validity of the two statements?

Some of the material is in an earlier article. He considers the following two paragraphs which both discuss the role of genes in an organism:

Now they swarm in huge colonies, safe inside gigantic lumbering robots, sealed off from the outside world, communicating with it by tortuous indirect routes, manipulating it by remote control. They are in you and me; they created us, body and mind; and their preservation is the ultimate rationale for our existence.

Now they are trapped in huge colonies, locked inside highly intelligent beings, moulded by the outside world, communicating with it by complex processes, through which, blindly, as if by magic, function emerges. They are in you and me; we are the system that allows their code to be read. and their preservation is totally dependent on the joy we experience in reproducing ourselves (our joy not theirs!) We are the ultimate rationale for their existence.

Thursday, December 23, 2010

A veteran teacher shares his wisdom

David Griffiths taught at Reed College for 30 years (a rather unique undergraduate institution in Portland, Oregon) and is author of several widely used textbooks. He has a provocative piece Illuminating physics for students in Physics World. [I first encountered the article on the noticeboard outside the Mott lecture theatre at Bristol University]. The summary is:

He says that the role of a physics teacher should be to illuminate the subject's intrinsic interest, beauty and power – and warns that attempts to make it more marketable using gimmicks, false advertising or dilution are bound to be counterproductiveIt is worth reading in full. But here are a few extracts to picque your interest:

What we have on offer is nothing less than an explanation of how matter behaves on the most fundamental level. It is a story that is magnificent (by good fortune or divine benevolence), coherent (at least that is the goal), plausible (though far from obvious) and true (that is the most remarkable thing about it). It is imperfect and unfinished (of course), but always improving. It is, moreover, amazingly powerful and extraordinarily useful. Our job is to tell this story ....

[clickers] can be powerfully effective in the hands of an inspired expert like Mazur, but I have seen them reduced to distracting gimmicks by less-capable instructors. What concerns me, however, is the unspoken message reliance on such devices may convey: (1) this stuff is boring; and (2) I cannot rely on you to pay attention. Now, point (2) may be valid, but point (1) is so utterly and perniciously false that one should, in my view, avoid anything that is even remotely open to such an interpretation.....

I have never suffered the interference of a brainless dean concerned only with grants and publications, and as a consequence I have been more productive than would have been possible in the usual academic straitjacket. I do not know what makes good teaching, beyond the obvious things: absolute command of the subject; organization; preparation (I write out every lecture verbatim the night before, though I never bring my notes to the lecture hall); clarity; enthusiasm; and a story-teller's instinct for structure, pacing and drama. I personally never use transparencies or PowerPoint – these things are fine for scientific talks, but not in the classroom. ....

Wednesday, December 22, 2010

Tuneable electron-phonon scattering graphene

There is a nice article by Michael S. Fuhrer in Physics about tuning the Fermi surface area in graphene and using it to observe qualitatively different temperature dependence of the resistivity due to electron-phonon scattering.

Tuesday, December 21, 2010

Good internet access while travelling in the USA

In the past this has been an issue. But, last trip at Radio Shack I bought one of these Virgin Broadband2Go devices. The rates have now decreased so that on this trip I am paying just $40 for a month of unlimited access. The coverage is pretty good although it is occasionally slow at remoter locations.

Sunday, December 19, 2010

Seeing what you want to see

There is a good Opinion piece in the November Scientific American, Fudge Factor: a look at Harvard science fraud case by Scott O. Lillienfeld. He discusses the problem of distinguishing intentional scientific fraud from confirmation bias, the tendency we have as scientists to selectively interpret data in order to confirm our own theories.

This is a good reminder that the easiest person to fool is yourself.

This is a good reminder that the easiest person to fool is yourself.

Friday, December 17, 2010

Not everything is RVB

The pyrochlore lattice consists of a three-dimensional network of corner sharing tetrahedra. In a number of transition metal oxides the metal ions are located

on a pyrochlore lattice.

The ground state of the antiferromagnetic Heisenberg model on a pyrochlore lattice is a gapped spin liquid (see this PRB by Canals and Lacroix). The ground state consists of weakly coupled RVB (resonating valence bond) states on each tetrahedra. The conditions necessary for deconfined spinons has been explored in Klein type models on the pyrochlore lattice.

The material KOs2O6 has a pyrochlore structure and was discovered to be superconducting with a transition temperature of about 10 K. Originally it was thought (and hoped) that the superconductivity might be intimately connected to RVB physics. However, it now seems that the superconductivity is not unconventional. It can be explained in terms of strong coupling electron-phonon interaction which arises because of anharmonic phonons associated with ``rattling" vibrational modes of the K ions which are located inside relatively large spatial regions within the cage of Os and O ions (see this paper).

on a pyrochlore lattice.

The ground state of the antiferromagnetic Heisenberg model on a pyrochlore lattice is a gapped spin liquid (see this PRB by Canals and Lacroix). The ground state consists of weakly coupled RVB (resonating valence bond) states on each tetrahedra. The conditions necessary for deconfined spinons has been explored in Klein type models on the pyrochlore lattice.

The material KOs2O6 has a pyrochlore structure and was discovered to be superconducting with a transition temperature of about 10 K. Originally it was thought (and hoped) that the superconductivity might be intimately connected to RVB physics. However, it now seems that the superconductivity is not unconventional. It can be explained in terms of strong coupling electron-phonon interaction which arises because of anharmonic phonons associated with ``rattling" vibrational modes of the K ions which are located inside relatively large spatial regions within the cage of Os and O ions (see this paper).

To me this is a cautionary tale in my enthusiasm for RVB physics.

Thursday, December 16, 2010

The challenge of H-bonding

Hydrogen bonds are ubiquitous in biomolecules and a key to understanding their functionality. This was first appreciated by Pauling and exploited by Watson and Crick to decode the structure of DNA. It is also the origin of the unique and amazing properties of water. There is a helpful review article Hydrogen bonding in the solid state by Steiner. Here are a few things I learnt.

Energies vary by 2 orders of magnitude, 0.2-40 kcal/mol [10 meV to 2 eV]. This spans the energy range from van der Waals to covalent and ionic bonds. The amount of electrostatic, covalent, and dispersion character of the bond varies within this range.

For O-H ... O bonds the shift in frequency of the O-H bond correlates with the distance between the oxygen atoms. The O-H distance is correlated with the O..H distance.

All hydrogen bonds can be considered as incipient hydrogen transfer reactions.

Hydrogen bonds exhibit some unexplained isotope effects. Simple zero-point motion arguments suggest that deuterium substitution should lead to weaker bonds, as is observed in some cases. However, some bonds exhibit a negligible effect and others a negative effect.

Energies vary by 2 orders of magnitude, 0.2-40 kcal/mol [10 meV to 2 eV]. This spans the energy range from van der Waals to covalent and ionic bonds. The amount of electrostatic, covalent, and dispersion character of the bond varies within this range.

For O-H ... O bonds the shift in frequency of the O-H bond correlates with the distance between the oxygen atoms. The O-H distance is correlated with the O..H distance.

All hydrogen bonds can be considered as incipient hydrogen transfer reactions.

Hydrogen bonds exhibit some unexplained isotope effects. Simple zero-point motion arguments suggest that deuterium substitution should lead to weaker bonds, as is observed in some cases. However, some bonds exhibit a negligible effect and others a negative effect.

Wednesday, December 15, 2010

Feynman on path integrals for cheap

The book Quantum mechanics and path integrals by Feynman and Hibbs is a classic that was out of print and an old hardback edition is currently going for $799! The good news is the book has been reprinted by Dover and you can now buy a copy on Amazon for only US$12. My copy arrived today.

Tuesday, December 14, 2010

Basics of inflation

I quite like the new journal Physics from APS because it has nice overview articles which are particularly good for learning something about topics outside ones expertise. There is a good article Can we test inflationary expansion of the early universe?

It explains the basic ideas behind inflation [including the broken symmetry associated with the inflaton field], why it is necessary in standard big bang cosmology, to solve the "horizon" and "flatness" problems, and the hope of actually finding more than circumstantial evidence for inflation.

It explains the basic ideas behind inflation [including the broken symmetry associated with the inflaton field], why it is necessary in standard big bang cosmology, to solve the "horizon" and "flatness" problems, and the hope of actually finding more than circumstantial evidence for inflation.

Sunday, December 12, 2010

Marrying Heitler-London and Pauling

The Linus Pauling archive at Oregon State University has lots of nice resources including original manuscripts, videos, quotes, and photos. Above is a photo of Heitler and London with Pauling's wife.

Broken symmetry is comical

This comic in the Pearls before Swine series appeared in the newspaper today. I thank my family for bringing it to my attention. The previous days cartoon was about Australia and alternative energy.

Saturday, December 11, 2010

Resonant Raman basics

I am trying to get a better understanding of resonant raman scattering as a probe of properties of excited states of organic molecules. The figure below [taken from a JACS paper] shows how in the Green Flourescent Protein one sees an enhanced coupling to vibrations [blue curve in lower panel].

There is a nice site on Resonant Raman Theory set up by Trevor Dines (University of Dundee). It emphasizes that in the Born-Oppenheimer approximation one only sees a significant signal when the excited state involves a displacement of the vibrational co-ordinate.

This raises a question about whether in a completely symmetric molecule one can have a resonant Raman signal due to effects that go beyond Born Oppenheimer.

Friday, December 10, 2010

What did he know and when did he know it?

This was the key question in Watergate scandal.

But, this post is actually about what a Ph.D student should know and when they should know it. Here are a few different answers I have heard:

When the student knows more about the topic than their advisor/supervisor they are ready to submit their thesis.

At the end of your Ph.D you should know more about the topic than anyone else in the world.

The student should know more about the project than their advisor by the time they do their comprehensive exam (a few years into a US Ph.D).

But, this post is actually about what a Ph.D student should know and when they should know it. Here are a few different answers I have heard:

When the student knows more about the topic than their advisor/supervisor they are ready to submit their thesis.

At the end of your Ph.D you should know more about the topic than anyone else in the world.

The student should know more about the project than their advisor by the time they do their comprehensive exam (a few years into a US Ph.D).

Thursday, December 9, 2010

Simple valence bond model for a chemical reaction

Valence bond theory provides an intuitive picture of not just chemical bonding but bond breaking and making. For a reaction ab + c -> ac + b, one can write done the energy of the total system in terms of pairwise exchange J and coulomb integrals Q. This can be used to produce semi-empirical potential energy surfaces and/or diabatic states and coupling between them. This is at the heart of the treatment of coupled electron-proton transfer by Hammes-Schiffer and collaborators, discussed in previous posts. I struggled a bit to find the background of this. It goes back to London-Eyring-Polanyi-Saito (LEPS). A nice summary is the paragraph below taken from a paper by Kim, Truhlar, and Kreevoy. It provides a way to parametrise the Qs and Js in terms of empirical Morse potentials for the constituent molecules. At the transition state the gap to the next excited state is related to a singlet-triplet gap, a point emphasized by Shaik and collaborators.

More background is in material in the old text, Theoretical Chemistry by Glasstone (1944).

Wednesday, December 8, 2010

Coupled electron-proton transfer

In many biochemical processes electron transfer and proton transfer are important and have featured in many of my posts. However, another process that is important and fascinating is coupled electron proton transfer. This is the process discussed in a previous post about the enzyme soybean lipoxygenase. Sometimes this can be viewed as a hydrogen atom transfer, but in some cases the electron and proton start or end at different sites on the donor or acceptor molecule. Describing this process (even for the H-atom transfer case) theoretically has proven to be a challenge which has recently attracted significant attention. A recent review is by Sharon Hammes-Schiffer. Basic questions that arise include:

- What is the reaction co-ordinate? Is it the proton (or H-atom) position? Or the solvent (or heavy atoms) configuration? Or both?

- What is the role of proton tunneling?

- Are the dynamics of the electron and the proton both adiabatic or non-adiabatic?

- Under what conditions is the electron and proton transfer concerted and when is it sequential?

[The figure above is taken from another review]. The figure below shows some possible mechanisms for the coupled proton-electron transfer between tyrosine and tryptophan, which is important in various biochemical processes. It is taken from here.

Monday, December 6, 2010

Tunneling without instantons?

This attempts to answer questions raised in a previous post.

Here is one point of view.

Tunneling is always present and as one lowers the temperature (or increases the coupling to the environment) one just has a crossover from transitions dominated by activation over the barrier to tunneling under the barrier. Instantons [or the "bounce solution" which is a solution to the classical equations of motion in an inverted potential] are just a convenient calculational machinery which arises when evaluating a path integral approximately by finding saddle points. There is always a contribution from the trivial solution corresponding to the top of the barrier. Quadratic fluctuations about this saddle point give a "prefactor" which includes quantum corrections due to tunneling and reflection.

Here is one point of view.

Tunneling is always present and as one lowers the temperature (or increases the coupling to the environment) one just has a crossover from transitions dominated by activation over the barrier to tunneling under the barrier. Instantons [or the "bounce solution" which is a solution to the classical equations of motion in an inverted potential] are just a convenient calculational machinery which arises when evaluating a path integral approximately by finding saddle points. There is always a contribution from the trivial solution corresponding to the top of the barrier. Quadratic fluctuations about this saddle point give a "prefactor" which includes quantum corrections due to tunneling and reflection.

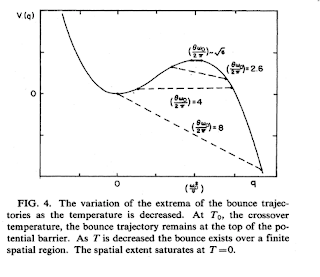

Below the crossover temperature T0 this first saddle point becomes unstable and there is a second saddle point, which is the instanton solution.

I thank Eli Pollak for sharing his thoughts on this subject.

But all this seems against the spirit of the approach to tunneling in dissipative environments, pioneered by Leggett [and reviewed in detail here], which seems to assert that tunneling only exists when instanton solutions are present.

Perhaps, the key distinction is that the instanton captures coherent tunneling whereas the quadratic fluctuations only capture incoherent tunneling. Specifically, if one considers a double well system, the instanton can capture the level splitting associated with tunneling.

Perhaps, the key distinction is that the instanton captures coherent tunneling whereas the quadratic fluctuations only capture incoherent tunneling. Specifically, if one considers a double well system, the instanton can capture the level splitting associated with tunneling.

I am keen to hear others perspectives.

Saturday, December 4, 2010

Deconstructing H atom transfer in enzymes

Yesterday I had a really helpful discussion with Judith Klinman about the question of quantum tunneling of hydrogen in enzymes. [An accessible summary of her point of view is a recent Perspective with Zachary Nagel in Nature Chemical Biology].

Here are a few points I came to a better appreciation of:

There are a number of enzymes (e.g. soybean lipoxygenase) which have very small activation energies (Ea~0-2 kcal/mol ~ 100 meV) for hydrogen atom transfers. (n.b. this is a coupled electron and proton transfer). They exhibit kinetic isotope effects which are

[In the figure above the hydrogen atom (black in the centre of the figure) is transfered to the oxygen atom (red, to the left of the H atom). Mutations correspond to substituting the amino acids Ile553 and/or Leu754.]

The key physics is the following (originally proposed by Kutzenov and Ulstrup) which might be viewed as the proton version on Marcus-Hush electron transfer theory. A JACS paper by Hatcher, Soudakov, and Hammes-Schiffer gives a more sophisticated treatment, including molecular dynamics simulations to extract model parameter values.

The proton directly tunnels between the vibrational ground states of the reactant and product. The isotope effect arises because the spatial extent of the vibrational wavefunction is different for the two isotopes. The temperature dependence of the isotope effect is determined by vibrations of the relative positions of the donor and acceptor atoms.

The main problem or challenge that this model has is the following. The simplest model treatment deduces that the tunneling distance is 0.66 Angstroms [which is less than the van der Waals radii?] and that this increases significantly, up to 2.6 A with mutations. Hammes-Schiffer gets smaller variations, which are more realistic.

However, recent determinations of the crystal structures of the mutants show some structural changes but they do not clearly correlate with the changes in kinetics. In particular there are no detectable changes in tunneling distance. Klinman takes this as evidence for the important role of dynamics.

But, perhaps these structural changes lead to changes in the potential energy surface which in turn changes the amount of tunneling. Things I would like to see include:

-DFT calculations of the potential energy surfaces for the different mutations

-an examination of the Debye-Waller factors for the donor and acceptor atoms in the different mutant structures.

-an examination of non-Born-Oppenheimer effects [which will be isotope dependent].

Note added later: I just found a recent paper by Edwards, Soudakov, and Hammes-Schiffer which uses molecular dynamics to show how the mutations change the tunneling distance and frequency and consequently the kinetic isotope effect. The abstract figure is below.

Here are a few points I came to a better appreciation of:

There are a number of enzymes (e.g. soybean lipoxygenase) which have very small activation energies (Ea~0-2 kcal/mol ~ 100 meV) for hydrogen atom transfers. (n.b. this is a coupled electron and proton transfer). They exhibit kinetic isotope effects which are

- very large in magnitude (~100)

- weakly temperature dependent (difference in Ea for H and D ~ 1 kcal/mol ~ 50 meV)

- change their temperature dependence significantly with mutation

[In the figure above the hydrogen atom (black in the centre of the figure) is transfered to the oxygen atom (red, to the left of the H atom). Mutations correspond to substituting the amino acids Ile553 and/or Leu754.]

The key physics is the following (originally proposed by Kutzenov and Ulstrup) which might be viewed as the proton version on Marcus-Hush electron transfer theory. A JACS paper by Hatcher, Soudakov, and Hammes-Schiffer gives a more sophisticated treatment, including molecular dynamics simulations to extract model parameter values.

The proton directly tunnels between the vibrational ground states of the reactant and product. The isotope effect arises because the spatial extent of the vibrational wavefunction is different for the two isotopes. The temperature dependence of the isotope effect is determined by vibrations of the relative positions of the donor and acceptor atoms.

The main problem or challenge that this model has is the following. The simplest model treatment deduces that the tunneling distance is 0.66 Angstroms [which is less than the van der Waals radii?] and that this increases significantly, up to 2.6 A with mutations. Hammes-Schiffer gets smaller variations, which are more realistic.

However, recent determinations of the crystal structures of the mutants show some structural changes but they do not clearly correlate with the changes in kinetics. In particular there are no detectable changes in tunneling distance. Klinman takes this as evidence for the important role of dynamics.

But, perhaps these structural changes lead to changes in the potential energy surface which in turn changes the amount of tunneling. Things I would like to see include:

-DFT calculations of the potential energy surfaces for the different mutations

-an examination of the Debye-Waller factors for the donor and acceptor atoms in the different mutant structures.

-an examination of non-Born-Oppenheimer effects [which will be isotope dependent].

Note added later: I just found a recent paper by Edwards, Soudakov, and Hammes-Schiffer which uses molecular dynamics to show how the mutations change the tunneling distance and frequency and consequently the kinetic isotope effect. The abstract figure is below.

Friday, December 3, 2010

Kagome lattice antiferromagnet may be a spin liquid

As I have posted previously finding a realistic Heisenberg spin model Hamiltonian which has a spin liquid ground state has proven to be difficult. The model on the Kagome lattice was thought to be a prime candidate for many years, partly because the classical model has an infinite number of degenerate ground states. However, a few years ago Rajiv Singh and David Huse performed a series expansion study which suggested that the ground state was actually a valence bond crystal with a unit cell of 36 spins. In the picture below the blue lines represent spin singlets, and H, P, and E, denote Hexagons, Pinwheels, and Empty triangles respectively. This result was confirmed by my UQ colleagues Glen Evenbly and Guifre Vidal using a completely different numerical method based on entanglement renormalisation.

However, there are new numerical results using DMRG which appeared on the arXiv this week, by Yan, Huse, and White. They find a spin liquid ground state, with a gap to both singlet and triplet excitations.

Interlayer magnetoresistance as a probe of Fermi surface anisotropies

Here are the slides for a talk I will give in the physics department at Berkeley this afternoon. Some of what I will talk about concerns a paper with Michael Smith, which appeared online in PRB this week, Fermi surface of underdoped cuprate superconductors from interlayer magnetoresistance: Closed pockets versus open arcs

Thursday, December 2, 2010

Ambiguities about tunneling at non-zero temperature?

An important question came up in the seminar I gave today. It concerns something I have been confused about for quite a while and I was encouraged that some of the audience got animated about it.

Consider the problem of quantum tunneling out of the potential minimum on the left in the Figure below

However, there is an alternative way to obtain the same expression, which seems to me to involve assuming lots of tunneling! Eli Pollak [who is also visiting Berkeley] reminded me of this today. I think this derivation was originally due to Bell, following earlier work by Wigner, and is implicit in Truhlar and collaborators treatment of tunneling and implemented in their POLYRATE code. One considers the tunneling transmission amplitude as a function of energy and integrates over all energies with a Boltzmann weighting factor.

How does one reconcile these two derivations, which seem to me to involve very different physics? I welcome comments.

Consider the problem of quantum tunneling out of the potential minimum on the left in the Figure below

If one considers a path integral approach than one says that tunneling occurs when there is an "instanton" solution (i.e., a non-trivial solution) to the classical equations of motion in imaginary time with a period determined by the temperature. This only occurs when the temperature is less than

which is defined by curvature of the top of the potential barrier. At temperatures above this there is only one solution to the classical equations of motion, the trivial one x(tau)=x_b, corresponding to the minima of the inverted potential. One can then calculate the quantum fluctuations about this minimum, at the Gaussian level. This gives a total decay rate

which is well defined, provided the temperature T is larger than T_0. What do these quantum fluctuations represent? I would say they represent tunneling just below the top of the barrier and reflection from just above the top of the barrier. In my talk I said this situation of "no instantons" represents "no tunneling" which several people disagreed with. I corrected myself with "no deep tunneling."However, there is an alternative way to obtain the same expression, which seems to me to involve assuming lots of tunneling! Eli Pollak [who is also visiting Berkeley] reminded me of this today. I think this derivation was originally due to Bell, following earlier work by Wigner, and is implicit in Truhlar and collaborators treatment of tunneling and implemented in their POLYRATE code. One considers the tunneling transmission amplitude as a function of energy and integrates over all energies with a Boltzmann weighting factor.

How does one reconcile these two derivations, which seem to me to involve very different physics? I welcome comments.

Revival of the non-Fermi liquid

On the Condensed Matter Physics Journal Club Patrick Lee has a nice summary of recent work concerning very subtle problems concerning large N expansions of gauge theories with non-Fermi liquid ground state.

Chemistry seminar at Berkeley

I am giving a seminar, Limited role of quantum dynamics in biomolecular function in the Chemistry department at Berkeley this afternoon.

Wednesday, December 1, 2010

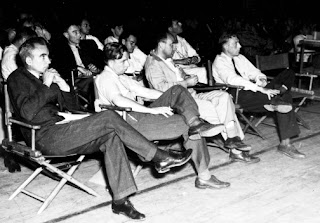

An ugly period in American physics/politics

On the plane from Brisbane to LA I watched some of The Trials of J. Robert Oppenheimer [you can also watch it online] which gave an excellent portrayal (using exact testimony) of the trial and background that led to Oppenheimer losing his security clearance. It also had some excellent background on Oppenheimer's youth and time as a young faculty member at Berkeley. [Mental health issues feature somewhat].

Other physicists who were "tarred and feathered" in the McCarthy era were David Bohm [who was basically fired by Princeton University because he refused to testify] and Frank Oppenheimer [younger brother of J. Robert] who was forced to resign from a faculty position at University of Minnesota. He later founded the Exploratorium in San Francisco.

Last year Physics Today published a fascinating article by J.D. Jackson [of electrodynamics textbook fame], Panosky agonistes: The 1950 loyalty oath at Berkeley, which chronicles more problems from that era.

[Coincidentally, this post is being written in Berkeley!].

Another aside: the photo at the top is of the audience for a colloquium at Los Alamos during the Manhattan project. See who you can recognise.

Other physicists who were "tarred and feathered" in the McCarthy era were David Bohm [who was basically fired by Princeton University because he refused to testify] and Frank Oppenheimer [younger brother of J. Robert] who was forced to resign from a faculty position at University of Minnesota. He later founded the Exploratorium in San Francisco.

Last year Physics Today published a fascinating article by J.D. Jackson [of electrodynamics textbook fame], Panosky agonistes: The 1950 loyalty oath at Berkeley, which chronicles more problems from that era.

[Coincidentally, this post is being written in Berkeley!].

Another aside: the photo at the top is of the audience for a colloquium at Los Alamos during the Manhattan project. See who you can recognise.

Modelling electron transfer in photosynthesis

This follows up on a previous post about measurements of the rate at which electron transfer occurs in a photosynthetic protein. I noted several deviations of the experimental results from what is predicted by Marcus-Hush electron transfer theory. This is not necessarily surprising because one is not in quite in the right parameter regime.

In principle [at least to me] this should be described by a spin boson model which has the Hamiltonian

and the spectral density contains all the relevant information about the protein dynamics,

So the question I have is: if one has the correct parameters and spectral density can one actually describe all the experiments? Below is the spectral density found by Parson and Warshel in molecular dynamics simulations.

Sunday, November 28, 2010

Frank Fenner (1914-2010): a legacy of public science

Frank Fenner (1914-2010), one of Australia's most distinguished scientists died this week. He was an immunologist who is best known for leading the world-wide eradication of the smallpox virus and for introducing myxomatosis to stop the rabbit plague in Australia. The latter led to an interesting study in evolution and genetics, described in a recent Cambridge University Press book, Myxomatosis he recently co-authored (in his nineties!).

He was also author of a classic text, Medical Virology, first published in 1970, now in its fourth edition.

I partly know of Fenner because my father knew him, through working at the John Curtin School of Medical Research (JCSMR) at the ANU in Canberra. The prolific Fenner also wrote an exhaustive history of the JCSMR. [Three Nobel Prizes have been awarded for work done in the JCSMR].

What has immunology got to do with emergence and physics? I have always been fascinated by the existence of the Reviews of Modern Physics article, Immunology for physicists.

He was also author of a classic text, Medical Virology, first published in 1970, now in its fourth edition.

I partly know of Fenner because my father knew him, through working at the John Curtin School of Medical Research (JCSMR) at the ANU in Canberra. The prolific Fenner also wrote an exhaustive history of the JCSMR. [Three Nobel Prizes have been awarded for work done in the JCSMR].

What has immunology got to do with emergence and physics? I have always been fascinated by the existence of the Reviews of Modern Physics article, Immunology for physicists.

Saturday, November 27, 2010

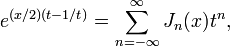

Abramowitz and Stegun online

This morning I was at home struggling with some Bessel function identities. I really wanted to look at the copy of Abramowitz and Stegun: Handbook of Mathematical Functions that I have in my office but, I discovered that the complete text is available online.

I know Wolfram Mathworld can be useful but it does not have the same level of detail as A&S.

I know Wolfram Mathworld can be useful but it does not have the same level of detail as A&S.

Friday, November 26, 2010

Research income is a good measure of ...

While on the subject of money .... Research income is a good measure of .... research income, and not much more. Consider the following:

Obituaries and Nobel Prize citations do not mention how much research income someone received.

Grants are a means to an end, not an end in themselves. Sometimes they are necessary to do good research, either to hire people to do the work, or to purchase or build equipment. But, grants are not a sufficient condition to do good research.

Many significant discoveries, especially experimental ones, come from people doing "tabletop" science with small budgets. The discovery of graphene is a significant example.

A distinguished elderly colleague expressed his disappointment to me that his department newsletter was always trumpeting the grants that people got. He asked, "Why aren't there any articles about what discoveries they make with the money?"

I find it easier to get grants than to do really significant and original research. As I have posted before, the latter is very hard work. I doubt I am alone in this.

Grant writing involves a different skill set from actually doing the research. Most people are more adept at one than the other.

A more interesting metric than research income or total number of papers is the ratio of the two quantities. Citations and the h-index are better. But, in the end there is only one meaningful and worthwhile measure of research productivity: creation of significant new scientific knowledge. Don't forget it and get distracted by endlessly chasing money or comparing your income to others.

Obituaries and Nobel Prize citations do not mention how much research income someone received.

Grants are a means to an end, not an end in themselves. Sometimes they are necessary to do good research, either to hire people to do the work, or to purchase or build equipment. But, grants are not a sufficient condition to do good research.

Many significant discoveries, especially experimental ones, come from people doing "tabletop" science with small budgets. The discovery of graphene is a significant example.

A distinguished elderly colleague expressed his disappointment to me that his department newsletter was always trumpeting the grants that people got. He asked, "Why aren't there any articles about what discoveries they make with the money?"

I find it easier to get grants than to do really significant and original research. As I have posted before, the latter is very hard work. I doubt I am alone in this.

Grant writing involves a different skill set from actually doing the research. Most people are more adept at one than the other.

A more interesting metric than research income or total number of papers is the ratio of the two quantities. Citations and the h-index are better. But, in the end there is only one meaningful and worthwhile measure of research productivity: creation of significant new scientific knowledge. Don't forget it and get distracted by endlessly chasing money or comparing your income to others.

Money changes you

Phil Anderson finishes his classic More is Different article from Science in 1972 with the cheeky and amusing conclusion:

Marx said that "Quantitative differences become qualitative ones." But a dialogue in Paris from the 1920’s sums it up even more clearly:I often wondered what this was all about. It is worth reading Quote/Counterquote which explains that the dialogue never actually happened.

FITZGERALD: The rich are different from us.HEMMINGWAY: Yes, they have more money.

Thursday, November 25, 2010

Faculty position in Melbourne available

I was asked to publicise a position in Condensed Matter Theory that has been advertised at University of Melbourne. I am always keen to see more Condensed Matter Theory in Australia!

All the details are here.

I believe that applications will still be received for a week or two after the advertised closing date (30 November), but after the closing date should also be submitted directly to ProfessorLes Allen or Professor Lloyd Hollenberg.

All the details are here.

I believe that applications will still be received for a week or two after the advertised closing date (30 November), but after the closing date should also be submitted directly to Professor

Wednesday, November 24, 2010

Possible origin of anisotropic scattering in cuprates

Previously I posted about the anisotropic scattering scattering rate in the optimally doped to overdoped cuprates. Both Angle-Dependent Magnetoresistance (ADMR) and Angle-Resolved PhotoEmission Spectroscopy (ARPES) suggest that it has a d-wave variation around the Fermi surface and that it has a "marginal Fermi liquid" dependence on energy and temperature. ADMR measurements found that the strength of this scattering scales with the transition temperature, and hence increases as one moves towards optimal doping.

This raises three important questions:

1. What is the physical origin of this scattering [and the associated self energy]? Superconducting, D-density wave, antiferromagnetic, or gauge fluctuations?

2. Is this scattering relevant to the superconductivity? i.e., do the same interactions produce the superconductivity and/or do these interactions make the metallic state unstable to superconductivity?

3. Is this relevant to formation of the pseudogap in the underdoped region? e.g., as the self energy increases in magnitude with decreasing doping does the pseudogap just result from new poles in the spectral function?

I focus here on possible answers to 1. as there are already some attempts to answer this question in the literature. Back in 1998, Ioffe and Millis published a PRB paper focusing on the phenomenology of such a scattering rate but Section IV of their paper considered how superconducting fluctuations could produce an anisotropic scattering rate. They suggested that in the overdoped region the rate should scale with T^2, but it should be kept in mind this depends on what assumptions one makes about the temperature dependence of the correlation length.

Walter Metzner and colleagues have been investigating D-density wave fluctuations near a quantum critical point associated with a Pomeranchuk instability [A PRL with Dell'Anna summarises the main results, including a scattering rate which scales with temperature]. Their starting point is an effective Hamiltonian which has a d-wave form factor built into it. But this is motivated by an earlier PRL which found that the forward scattering they deduced for the Hubbard model from renormalisation group flows.

Maslov and Chubukov have also published papers on the subject, such as this PRB.

This raises three important questions:

1. What is the physical origin of this scattering [and the associated self energy]? Superconducting, D-density wave, antiferromagnetic, or gauge fluctuations?

2. Is this scattering relevant to the superconductivity? i.e., do the same interactions produce the superconductivity and/or do these interactions make the metallic state unstable to superconductivity?

3. Is this relevant to formation of the pseudogap in the underdoped region? e.g., as the self energy increases in magnitude with decreasing doping does the pseudogap just result from new poles in the spectral function?

I focus here on possible answers to 1. as there are already some attempts to answer this question in the literature. Back in 1998, Ioffe and Millis published a PRB paper focusing on the phenomenology of such a scattering rate but Section IV of their paper considered how superconducting fluctuations could produce an anisotropic scattering rate. They suggested that in the overdoped region the rate should scale with T^2, but it should be kept in mind this depends on what assumptions one makes about the temperature dependence of the correlation length.

Walter Metzner and colleagues have been investigating D-density wave fluctuations near a quantum critical point associated with a Pomeranchuk instability [A PRL with Dell'Anna summarises the main results, including a scattering rate which scales with temperature]. Their starting point is an effective Hamiltonian which has a d-wave form factor built into it. But this is motivated by an earlier PRL which found that the forward scattering they deduced for the Hubbard model from renormalisation group flows.

Maslov and Chubukov have also published papers on the subject, such as this PRB.

A key question is what experiments might be a smoking gun to distinguish the different origin of the scattering. I wonder whether the observed weak dependence of the scattering on magnetic field [at least up to 50 Tesla] may help.

Monday, November 22, 2010

A beast of an issue

If you don't think mental health problems will strike anyone you know it is worth reading this column by Kathleen Noonan which appeared in our local newspaper a few weeks back.

Sunday, November 21, 2010

What is wrong with these colloquia?

Are you preparing a talk? There was a provocative article What's Wrong with Those Talks by David Mermin, published by Physics Today back in 1992. It is worth digesting, even if you do not agree with it. He does practice what he preaches. I once remember him reading a referee report from PRL once in a talk on quasi-crystals. (He claimed the referee was Linus Pauling). One of his main points is we need to be very modest about what we hope we can achieve in a talk, particularly a colloquium. People will rarely complain if the talk is too basic and they understand most of it. The primary purpose is to help people understand why you thought the project was so interesting that you embarked on it.

A string theorist learns basic solid state physics

The Big Bang Theory seems to be getting better all the time, both in terms of humour and scientific content. This weekend we watched The Einstein Approximation (Season 3, Episode 14). Sheldon is obsessed with understanding how the electrons in graphene are "massless". His brain is stuck and so he seeks out a mind numbing job that he hopes will remove this mental block [inspired by Einstein working in the Swiss patent office, hence the title]. Eventually, he realises the problem was that he was thinking of the electrons as particles rather than as waves diffracted off the hexagonal lattice formed by the carbon nuclei. The Big Blog Theory has a good discussion about graphene.

I could not find a clip on YouTube of the relevant scenes where the physics is discussed. Let me know if you know of one.

A synopsis is here.

Finally, I wonder if this episode helped sway the Nobel Committee?

I could not find a clip on YouTube of the relevant scenes where the physics is discussed. Let me know if you know of one.

A synopsis is here.

Finally, I wonder if this episode helped sway the Nobel Committee?

Saturday, November 20, 2010

The Fermi surface of overdoped cuprates

When I was in Bristol a few months ago Nigel Hussey gave me a proof copy of a nice paper that has just appeared in the New Journal of Physics,

A detailed de Haas–van Alphen effect study of the overdoped cuprate Tl2Ba2CuO6+δ

by P M C Rourke, A F Bangura, T M Benseman, M Matusiak, J R Cooper, A Carrington and N E Hussey

It is part of a FOCUS ON FERMIOLOGY OF THE CUPRATES

Here are a few things I found particularly interesting and significant about the paper.

A detailed de Haas–van Alphen effect study of the overdoped cuprate Tl2Ba2CuO6+δ

by P M C Rourke, A F Bangura, T M Benseman, M Matusiak, J R Cooper, A Carrington and N E Hussey

It is part of a FOCUS ON FERMIOLOGY OF THE CUPRATES

Here are a few things I found particularly interesting and significant about the paper.

- 1. It is beautiful data!

- 2. Estimating the actual doping level and "band filling" in cuprates is a notoriously difficult problem. But, measuring the dHvA oscillation frequency gives a very accurate measure of the Fermi surface area (via Onsager's relation). Luttingers theorem then gives the doping level. [see equation 15 in the paper].

- 3. The intralayer Fermi surface and anisotropy of the interlayer hopping determined are consistent with independent determinations from Angle-Dependent MagnetoResistance (ADMR) performed by Hussey's group earlier. This increases confidence in the validity of both methods. Aside: It should be stressed that both are bulk low-energy probes, whereas ARPES and STM are surface probes.

- 4. The effective mass m*(therm) determined from the temperature dependence of the oscillations agrees with that determined from the specific heat and from ARPES. This is approximately 3 times the band mass, showing the presence of strong correlations, even in overdoped systems. The effective mass does not vary significantly with doping [see my earlier post on this].

- 5. The presence of the oscillations put upper bounds on the amount of inhomogeneity in these samples, ruling out some claims concerning inhomogeneous doping.

- 6. The Figure above shows how well the observed Fermi surface agrees with that calculated from the Local Density Approximation (LDA) of Density Functional Theory (DFT). I think this means that the momentum dependence of the real part of the self energy on the Fermi surface must be small.

- 7. The "spin zeroes" allow one to determine the "effective mass" m*(susc) associated with the Zeeman splitting. [This could also be viewed as a effective g-factor g*].

- A few small comments:

- a. The equation below relates the specific heat coefficient gamma to the effective mass determined from dHvA

- This is often derived for a parabolic band. It is not widely that this is a very general relationship which holds for any quasi-two-dimensional band structure. Furthermore, the formula for the effective mass in terms of the derivative of the area of the Fermi surface gives this formula too. Hence, taking that derivative numerically, as done by Rourke et al., is unnecessary. This is shown and discussed in detail in a paper I wrote with Jaime a decade ago, Cyclotron effective masses in layered metals.

- b. The ratio of the two effective masses, m*(susc) and m*(therm) equals the Sommerfeld-Wilson ratio, something I pointed out in a preprint [but was not able to publish] long ago.

Friday, November 19, 2010

Should debatable data generate theoretical hyper-activity?

At the physics colloquium today recent experimental data was highlighted that has been interpreted as evidence for dark matter. I thought this looked familiar and recalled I wrote an earlier blog post Trust but verify, urging caution. If one does a search on the arxiv with the words "dark matter AND positron AND FERMI" one finds more than one hundred papers, many proposing exotic theoretical scenarios.

It will be interesting to see in a decade whether all this theoretical hyper-activity was justified.

It will be interesting to see in a decade whether all this theoretical hyper-activity was justified.

Thursday, November 18, 2010

Is chemical accuracy possible?

Seth Olsen and I had a nice discussion this week about Monkhorst's paper, Chemical physics without the Born-Oppenheimer approximation: The molecular coupled-cluster method, who emphasizes that corrections to the Born-Oppenheimer approximation can be as large as ten per cent. This raises important questions about the dreams and dogmas of computational quantum chemists. The goal is to calculate energies (especially heats of reaction, binding energies, and activation energies) to "chemical accuracy" which is of the order of kBT, about 1 kcal/mol or 0.03 eV. This is much better than most methods can do.

The claim of computational chemists is that it just a matter of more computational power. In principle, if one uses a large enough basis set (for the atomic orbitals) and a sophisticated enough treatment of the electronic correlations, then one will converge on the correct answer. However, this is all done assuming the Born-Oppenheimer approximation and "clamped" nuclei.

But what about corrections to Born-Oppenheimer? Are there any specific cases of molecules for which any deviations between experiment and computations might be due to non-BOA corrections?

Furthermore, there is a tricky issue of "parametrising" and "benchmarking" functionals, pseudo-potentials, and results against experiment. How can one separate out effects due to correlations and those due to corrections to BOA?

The claim of computational chemists is that it just a matter of more computational power. In principle, if one uses a large enough basis set (for the atomic orbitals) and a sophisticated enough treatment of the electronic correlations, then one will converge on the correct answer. However, this is all done assuming the Born-Oppenheimer approximation and "clamped" nuclei.

But what about corrections to Born-Oppenheimer? Are there any specific cases of molecules for which any deviations between experiment and computations might be due to non-BOA corrections?

Furthermore, there is a tricky issue of "parametrising" and "benchmarking" functionals, pseudo-potentials, and results against experiment. How can one separate out effects due to correlations and those due to corrections to BOA?

How to do better on physics exams

Since I am marking many first year exams here are a few tips. They are all basic but it is amazing and discouraging how few students do the following:

Clearly state any assumptions you make. Don't just write down equations. e.g, explicitly state, "Because of the Newtons second law ..." or "we neglect transfer of heat to the environment."

Keep track of units at each stage of the calculation. Don't just add them in at the end. All physical quantities DO have units. If you make a mistake it will often show up in getting the wrong units.

Clearly state the answer you obtain. Maybe draw a box around it. e.g, "The change in entropy of the gas is 9.3 J/K".

Try and be neat and set out your work clearly. If you make a mess just cross out the whole section and rewrite it. I see many students exam papers where I really have no idea what the student is doing. It is just a random collection of scribbles, equations, and numbers....

Think about the answers you get and whether they make physical sense. e.g. if you calculate the efficiency of the turbine is 150 per cent or that the car is travelling at 1600 metres per second you have probably made a mistake!

Clearly state any assumptions you make. Don't just write down equations. e.g, explicitly state, "Because of the Newtons second law ..." or "we neglect transfer of heat to the environment."

Keep track of units at each stage of the calculation. Don't just add them in at the end. All physical quantities DO have units. If you make a mistake it will often show up in getting the wrong units.

Clearly state the answer you obtain. Maybe draw a box around it. e.g, "The change in entropy of the gas is 9.3 J/K".

Try and be neat and set out your work clearly. If you make a mess just cross out the whole section and rewrite it. I see many students exam papers where I really have no idea what the student is doing. It is just a random collection of scribbles, equations, and numbers....

Think about the answers you get and whether they make physical sense. e.g. if you calculate the efficiency of the turbine is 150 per cent or that the car is travelling at 1600 metres per second you have probably made a mistake!

Wednesday, November 17, 2010

Recommended summer school

Telluride Science Research Center runs some great programs each northern summer, mostly with a chemistry orientation. One I would recommend, especially to physics postdocs and graduate students who want to see how quantum and stat. mech. is relevant to chemistry is the Telluride School of Theoretical Chemistry which will run July 10-16 next year.

Non-Markovian quantum dynamics in photosynthesis

Understanding how photosynthetic systems convert photons into separated charge is of fundamental scientific interest and relevant to the desire to develop efficient photovoltaic cells. Systematic studies could also provide a laboratory to test theories of quantum dynamics in complex environments, for reasons I will try and justify below. When I was at U. Washington earlier this year Bill Parson brought to my attention two very nice papers from the group of Neal Woodbury at Arizona State.

The Figures below are taken from the latter paper. I think the basic processes involved here are

P + H_A + photon -> P* + H_A -> P+ + H_A-

where a photon is absorbed by the P, producing the excited state P* which then decays non-radiatively by transfer of an electron to a neighbouring molecule H_A. The graphs below show the electron population on P as a function of time, for a range of different temperatures and with different mutants of the protein.

The different mutants correspond to substituting amino acids which are located near the special pair. These change the relative free energy of the P+ state.

The simplest possible theory which might describe these experiments is Hush-Marcus theory. However, it predicts

* the decay should be exponential with a single decay constant

* the rate should decrease with decreasing temperature

* the activation energy for the rate should be smallest when the energy difference between the two charge states epsilon equals the environmental re-organisation energy.

The above two papers contained several important results which are inconsistent with the simplest version of Hush-Marcus theory:

- The population does not exhibit simple exponential decay, but rather there are several different times scales, suggesting that the dynamics is non-Markovian, and may be correlated with the dynamics of the protein environment.

- The temperature dependence: the rate increases with decreasing temperature.

- A quantitative description of the data could be given in terms of a modification of Hush-Marcus to include the slow dynamics of the protein environment. This allows extraction of epsilon for the different mutants.

Later I will present my perspective on this....

Tuesday, November 16, 2010

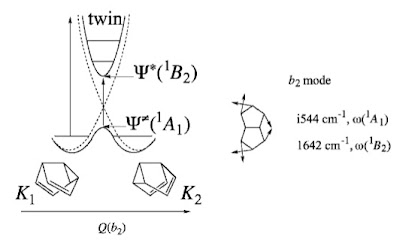

Finding the lost twin

This beautiful picture is on the cover of A Chemist's guide to Valence Bond Theory by Shaik and Hiberty. It summarises the main idea in a paper, The Twin-Excited State as a Probe for the Transition State in Concerted Unimolecular Reactions: The Semibullvalene Rearrangement.

It illustrates how the use of diabatic states (K1 and K2) based on chemical intuition can lead to adiabatic potential energy surfaces with complex structure. Furthermore, it illustrates the notion of an excited state (K1 - K2) which is a "twin state" to the ground state, K1+K2. The relevant vibrational frequency is higher in the excited state than in the ground state.

An earlier post discussed the analogous picture for benzene.

Sunday, November 14, 2010

Quantum decoherence in the brain on prime time TV

It is great how interesting physics still gets a mention in The Big Bang Theory. Last night my family watched The Maternal Congruence (Season 3, Episode 11). Leonard's mother [Beverley] comes for a visit and on the ride from the airport it turns out she and Sheldon have been in correspondence, unbeknown to Leonard. Here is the scene and dialogue I was delighted about:

Beverley: Yes, dear. Mommy’s proud. I’ve been meaning to thank you for your notes on my paper disproving quantum brain dynamic theory.On the Big Blog Theory, David Saltzberg [the UCLA Physics Professor who is a consultant to the show] discusses the "quantum dynamic brain theory" at length and mentions Roger Penrose and says:

Sheldon: My pleasure. For a non-physicist, you have a remarkable grasp of how electric dipoles in the brain’s water molecules could not possibly form a Bose condensate.

Leonard: Wait, wait, wait. When did you send my mom notes on a paper?

Quantum Brain Dynamicists entertain the idea that the same kind of condensate might exist in a living human brain, at normal body temperature. Does that sound pretty unlikely? It did to me. So I poked around a bit. The amount of published material in refereed scientific journals turns out to be small. Most of what I found about it was published on webpages and small publishers which is a red flag..... There are a few papers on these ideas published by Springer, a serious publisher of scientific work. Usually ideas about how the world works separate nicely into mainstream (even if speculative) versus crackpot. Here we find the distinction is not so clear.Saltzberg's scepticism is justified. The case again Penrose is actually much stronger than suggested by Saltzberg. Here I mention two papers I recently co-authored which discredit the "Quantum dynamic brain theory."

Weak, strong, and coherent regimes of Fröhlich condensation and their applications to terahertz medicine and quantum consciousness published in Proceedings of the National Academy of Sciences (USA)

The Penrose-Hameroff Orchestrated Objective-Reduction Proposal for Human Consciousness is Not Biologically Feasible, published in Physical Review E.

Saturday, November 13, 2010

A grand challenge: calculate the charge mobility of a real organic material

Previously I have written several posts about charge transport in organic materials for plastic electronic and photovoltaic devices. This week I looked over two recent articles in Accounts of Chemical Research that discuss progress at the very ambitious task of using computer simulations to calculate/predict properties of real disordered molecular materials beginning with DFT calculations of specific molecules. I was pleased to see that Marcus-Hush electron transfer theory plays a central role in both papers,

the average charge velocity, and extract the mobility. Intermolecular hopping rates are given by Marcus-Hush theory with parameters extracted from DFT calculations.

1. Is this the most efficient and reliable way to calculate mobility?

Generally in solid state physics one uses the fluctuation-dissipation relation. In this case Einstein's relation the mobility can be obtained from the diffusion constant. The diffusion constant is just the intermolecular hopping rate times the square of the intermolecular distance.

2. The mobility computed depends on the thickness of the sample.

3. How was the calculation benchmarked? Does this method give reliable

results for simpler systems, e.g., a naphthalene crystal?

4. The abstract makes some very strong claims,

But when I look at the graph above it looks to me that the method often disagrees with experiment by more than an order of magnitude and fails to capture any electric field dependence. I could not find any discussion of temperature dependence.

Electronic Properties of Disordered Organic Semiconductors via QM/MM Simulations (from the group of Troy Van Voorhis at MIT).

Modeling Charge Transport in Organic Photovoltaic Materials (from Jenny Nelson's group at Imperial College London).

I have several questions and concerns about the latter paper.

The authors do a rather sophisticated simulation of a time of flight experiment where they put a charge accumulation on one side of the sample, apply an electric field, and measurethe average charge velocity, and extract the mobility. Intermolecular hopping rates are given by Marcus-Hush theory with parameters extracted from DFT calculations.

1. Is this the most efficient and reliable way to calculate mobility?

Generally in solid state physics one uses the fluctuation-dissipation relation. In this case Einstein's relation the mobility can be obtained from the diffusion constant. The diffusion constant is just the intermolecular hopping rate times the square of the intermolecular distance.

2. The mobility computed depends on the thickness of the sample.

3. How was the calculation benchmarked? Does this method give reliable

results for simpler systems, e.g., a naphthalene crystal?

4. The abstract makes some very strong claims,

"these computational methods simulate experimental mobilities within an order of magnitude at high electric fields. We ... reproduce the relative values of electron and hole mobility in a conjugated small molecule... We can reproduce the trends in mobility wiht molecular weight ... we quantitatively reproduce...On the basis of these results, we conclude that all of the necessary building blocks are in place for the predictive simulation of charge transport in macromolecular electronic materials and that such methods can be used as a tool toward the future rational design of functional organic electronic materials."

But when I look at the graph above it looks to me that the method often disagrees with experiment by more than an order of magnitude and fails to capture any electric field dependence. I could not find any discussion of temperature dependence.

Friday, November 12, 2010

Beyond Born-Oppenheimer

This morning I started to read a fascinating paper by Henrik Monkhorst,

It begins discussing a controversy I did not know about which

Later there is a fascinating discussion of the coupled cluster (CC) method. Note the superlatives in the last sentence.

Thursday, November 11, 2010

A sign of true love

My son and I just watched a great episode of The Big Bang Theory. It is called the Gorilla Experiment and in it Penny decides that she wants to learn some physics so she can talk to Leonard about his experiment testing the Aharonov-Bohm effect with electric fields. She begs Sheldon to teach her leading to this amusing scene.

Wednesday, November 10, 2010

Adiabatic, non-adiabatic, or diabatic?

The Diabatic Picture of Electron Transfer, Reaction Barriers, and Molecular Dynamics. This is the review article that we will be discussing at the cake meeting tomorrow. It is clear and helpful.

Adiabatic states are the eigenstates of the electronic Schrodinger equation in the Born-Oppenheimer approximation. There are several problems with them

-they can vary significantly in character with changes in nuclear geometry [in particular they can be singular near conical intersections]

-they are not a good starting point for describing non-adiabatic processes such as transitions between potential energy surface

-they are hard to connect to chemical intuitive concepts such as valence bond and ionic states

Diabatic states overcome some of these problems. They do not vary significantly with changes in nuclear geometry.

However, determining them is non-trivial. The article describes these issues.

Tuesday, November 9, 2010

There is still a Kondo problem

It was nice having Peter Wolfle visit UQ the past few days and give a colloquium style talk on the Kondo effect.

A nice accessible article which discusses the basics of the Kondo effect and how it occurs in quantum dots, carbon nanotubes, and "quantum corrals" is this 2001 Physics World article by Leo Kouwenhoven and Leonid Glazman.

In his talk Peter gave a nice discussion of the problem of the Kondo lattice (a lattice of localised spins interacting with a band of itinerant electrons). There is still an open question (originally posed by Doniach) of what happens in between the two limits of weak coupling (one expects magnetic ordering of the spins due to the RKKY interaction mediated by the itinerant electrons) and strong coupling (where the individual spins are Kondo screened by the itinerant electrons). Is there a quantum critical point? Does a non-Fermi liquid occur near it?

All of this is discussed in a nice review article Peter co-authored, Fermi liquid instabilities at magnetic phase transitions. It was very useful to Michael Smith and I when we wrote a PRL about possible Weidemann-Franz violations at a quantum critical point.

If you want a discussion of the RKKY-Kondo competition from the point of view of quantum entanglement, see this PRA paper Sam Cho and I wrote.

A nice accessible article which discusses the basics of the Kondo effect and how it occurs in quantum dots, carbon nanotubes, and "quantum corrals" is this 2001 Physics World article by Leo Kouwenhoven and Leonid Glazman.

In his talk Peter gave a nice discussion of the problem of the Kondo lattice (a lattice of localised spins interacting with a band of itinerant electrons). There is still an open question (originally posed by Doniach) of what happens in between the two limits of weak coupling (one expects magnetic ordering of the spins due to the RKKY interaction mediated by the itinerant electrons) and strong coupling (where the individual spins are Kondo screened by the itinerant electrons). Is there a quantum critical point? Does a non-Fermi liquid occur near it?

All of this is discussed in a nice review article Peter co-authored, Fermi liquid instabilities at magnetic phase transitions. It was very useful to Michael Smith and I when we wrote a PRL about possible Weidemann-Franz violations at a quantum critical point.

If you want a discussion of the RKKY-Kondo competition from the point of view of quantum entanglement, see this PRA paper Sam Cho and I wrote.

Subscribe to:

Comments (Atom)

A golden age for precision observational cosmology

Yin-Zhe Ma gave a nice physics colloquium at UQ last week, A Golden Age for Cosmology I learnt a lot. Too often, colloquia are too speciali...

-

This week Nobel Prizes will be announced. I have not done predictions since 2020 . This is a fun exercise. It is also good to reflect on w...

-

Is it something to do with breakdown of the Born-Oppenheimer approximation? In molecular spectroscopy you occasionally hear this term thro...

-

Nitrogen fluoride (NF) seems like a very simple molecule and you would think it would very well understood, particularly as it is small enou...