Monday, December 30, 2013

My most popular blogposts for 2013

According to blogspot here are the six most popular posts from this blog over the past year

Effective "Hamiltonians" for the stock market

Thirty years ago in Princeton

Relating non-Fermi liquid transport properties to thermodynamics

Mental health talk in Canberra

What simple plotting software would you recommend?

A political metaphor for the correlated electron community

Thanks to my readers, particularly to those who write comments.

Best wishes for the New Year!

Friday, December 27, 2013

What product is this?

Previously I posted about a laundry detergent that had "Vibrating molecules" [TradeMark].

Here is a product my son recently bought that benefits from "Invisible science" [TradeMark].

I welcome guesses as to what the product.

Monday, December 23, 2013

Encouraging undergraduate research

The American Physical Society has prepared a draft statement calling on all universities "to provide all physics and astronomy majors with significant research experiences". The statement is worth reading because of the claims and documentations about some of the benefits of such experiences. In particularly, such experiences can better prepare students for a broad range of career options. I agree.

However, I add some caveats. I think there are two dangers that one should not ignore.

First, departments need to be diligent that students are not just used as "cheap labour" for some faculty research. Earlier I posted about What makes a good undergraduate research project?, which attracted several particularly insightful comments.

Second, such undergraduate research experiences are not a substitute for an advanced undergraduate laboratory. APS News recently ran a passionate article, Is there a future for the Advanced Lab? by Jonathan Reichert. It is very tempting for university "bean counters" to propose saving money by replacing expensive advanced teaching labs with students working in research groups instead. Students need both experiences.

However, I add some caveats. I think there are two dangers that one should not ignore.

First, departments need to be diligent that students are not just used as "cheap labour" for some faculty research. Earlier I posted about What makes a good undergraduate research project?, which attracted several particularly insightful comments.

Second, such undergraduate research experiences are not a substitute for an advanced undergraduate laboratory. APS News recently ran a passionate article, Is there a future for the Advanced Lab? by Jonathan Reichert. It is very tempting for university "bean counters" to propose saving money by replacing expensive advanced teaching labs with students working in research groups instead. Students need both experiences.

Wednesday, December 18, 2013

An acid test for theory

Just because you read something in a chemistry textbook does not mean you should believe it. Basic [pun!] questions about what happens to H+ ions in acids remain outstanding. Is the relevant unit H3O+, H5O2+ [Zundel cation], H9O4+ [Eigen cation], or something else?

There is a fascinating Accounts of Chemical Research

Myths about the Proton. The Nature of H+ in Condensed Media

Christopher A. Reed

Here are a few highlights.

H+ must be solvated and is nearly always di-ordinate, i.e. "bonded" to two units. H3O+ is a rarely seen.

This is based on the distinctive infrared absorption spectra shown below.

What is particularly interesting is the "continuous broad absorption" (cab) shown in blue. This is very unusual for a chemical IR spectra. Reed says "Theory has not reproduced the cba, but it appears to be the signature of delocalized protons whose motion is faster than the IR time scale."

I don't quite follow this argument. The protons are delocalised within the flat potential of the SSLB H-bond, but there is a well defined zero-point energy associated with this. The issue may be more how does this quantum state couple to other fluctuations in the system.

There is a 2011 theory paper by Xu, Zhang, and Voth [unreferenced by Reed] that does claim to explain the "continuous broad absorption". Also Slovenian work highlighted in my post Hydrogen bonds fluctuate like crazy, should be relevant. The key physics may be that for O-O distances of about 2.5-2.6 A the O-H stretch frequency varies between 1500 and 2500 cm-1. Thus even small fluctuations in O-O produce a large line width.

Repeating the experiments in heavy water or deuterated acids would put more constraints on possible explanations since quantum effects in H-bonding are particularly sensitive to isotope substitution and the relevant O-O bond lengths.

[I am just finishing a paper on this subject (see my Antwerp talk for a preview)].

There is a fascinating Accounts of Chemical Research

Myths about the Proton. The Nature of H+ in Condensed Media

Christopher A. Reed

Here are a few highlights.

H+ must be solvated and is nearly always di-ordinate, i.e. "bonded" to two units. H3O+ is a rarely seen.

"In contrast to the typical asymmetric H-bond found in proteins (N–H···O) or ice (O–H···O), the short, strong, low-barrier (SSLB) H-bonds found in proton disolvates, such as H(OEt2)2+ and H5O2+, deserve much wider recognition.''This is particularly interesting because quantum nuclear effects are important in these SSLBs.

A poorly understood feature of the IR spectra of proton disolvates in condensed phases is that IR bands associated with groups adjacent to H+, such as νCO in H(Et2O)2+ or νCO and δCOH in H(CH3OH)8+,(50) lose much of their intensity or disappear entirely.(51)So is it Zundel or Eigen? Neither, in wet organic solvents:

Contrary to general expectation and data from gas phase experiments, neither the trihydrated Eigen ion, H3O+·(H2O)3, nor the tetrahydrated Zundel ion, H5O2+·(H2O)4, is a good representation of H+ when acids ionize in wet organic solvents, .... Rather, the core ion structure is H7O3+ ....

The H7O3+ ion has its own unique and distinctive IR spectrum that allows it to be distinguished from alternate formulations of the same stoichiometry, namely, H3O+·2H2O and H5O2+·H2O.(58) It has its own particular brand of low-barrier H-bonding involving the 5-atom O–H–O–H–O core and it has somewhat longer O···O separations than in the H5O2+ ion.What happens in water? The picture is below.

This is based on the distinctive infrared absorption spectra shown below.

What is particularly interesting is the "continuous broad absorption" (cab) shown in blue. This is very unusual for a chemical IR spectra. Reed says "Theory has not reproduced the cba, but it appears to be the signature of delocalized protons whose motion is faster than the IR time scale."

I don't quite follow this argument. The protons are delocalised within the flat potential of the SSLB H-bond, but there is a well defined zero-point energy associated with this. The issue may be more how does this quantum state couple to other fluctuations in the system.

There is a 2011 theory paper by Xu, Zhang, and Voth [unreferenced by Reed] that does claim to explain the "continuous broad absorption". Also Slovenian work highlighted in my post Hydrogen bonds fluctuate like crazy, should be relevant. The key physics may be that for O-O distances of about 2.5-2.6 A the O-H stretch frequency varies between 1500 and 2500 cm-1. Thus even small fluctuations in O-O produce a large line width.

Repeating the experiments in heavy water or deuterated acids would put more constraints on possible explanations since quantum effects in H-bonding are particularly sensitive to isotope substitution and the relevant O-O bond lengths.

[I am just finishing a paper on this subject (see my Antwerp talk for a preview)].

Tuesday, December 17, 2013

Skepticism should be the default reaction to exotic claims

A good principle in science is "extra-ordinary claims require extra-ordinary evidence", i.e. the more exotic and unexpected the claimed new phenomena the greater the evidence needs to be before it should be taken seriously. A classic case is the recent CERN experiment claiming to show that neutrinos could travel faster than the speed of light. Surely it wasn't too surprising when it was found that the problem was one of detector calibration. Nevertheless, that did not stop many theorists from writing papers on the subject. Another case, are claims of "quantum biology".

About a decade ago some people tried to get me interested in some anomalous experimental results concerning elastic scattering of neutrons off condensed phases of matter. They claimed to have evidence for quantum entanglement between protons on different molecules for very short time scales and [in later papers] to detect the effects of decoherence on this entanglement. An example is this PRL which has more than 100 citations, including exotic theory papers stimulated by the "observation."

Many reasons led me not to get involved: mundane debates about detector calibration, a strange conversation with one of the protagonists, and discussing with Roger Cowley his view that the theoretical interpretation of the experiments was flawed, and of course, "extra-ordinary claims require extra-ordinary evidence"....

Some of the main protagonists have not given up. But the paper below is a devastating critique of their most recent exotic claims.

Spurious indications of energetic consequences of decoherence at short times for scattering from open quantum systems by J. Mayers and G. Reiter

Mayers is the designer and builder of the relevant spectrometer. Reiter is a major user. Together they have performed many nice experiments imaging proton probability distributions in hydrogen bonded systems.

They argue that the unexpected few per cent deviations from conventional theory that are "evidence" just arise from incorrect calibration of the instrument.

About a decade ago some people tried to get me interested in some anomalous experimental results concerning elastic scattering of neutrons off condensed phases of matter. They claimed to have evidence for quantum entanglement between protons on different molecules for very short time scales and [in later papers] to detect the effects of decoherence on this entanglement. An example is this PRL which has more than 100 citations, including exotic theory papers stimulated by the "observation."

Many reasons led me not to get involved: mundane debates about detector calibration, a strange conversation with one of the protagonists, and discussing with Roger Cowley his view that the theoretical interpretation of the experiments was flawed, and of course, "extra-ordinary claims require extra-ordinary evidence"....

Some of the main protagonists have not given up. But the paper below is a devastating critique of their most recent exotic claims.

Spurious indications of energetic consequences of decoherence at short times for scattering from open quantum systems by J. Mayers and G. Reiter

Mayers is the designer and builder of the relevant spectrometer. Reiter is a major user. Together they have performed many nice experiments imaging proton probability distributions in hydrogen bonded systems.

They argue that the unexpected few per cent deviations from conventional theory that are "evidence" just arise from incorrect calibration of the instrument.

Monday, December 16, 2013

Should you judge a paper by the quality of its referencing?

No.

Someone can write a brilliant paper and yet poorly reference previous work.

On the other hand, one can write a mediocre or wrong paper and reference previous work in a meticulous manner.

But, I have to confess I find I sometimes do judge a paper by the quality of the referencing.

I find there is often a correlation between the quality of the referencing and the quality of the science. Perhaps this correlation should not be surprising since both reflect on how meticulous is the scholarship of the authors.

If I am sent a paper to referee I often find the following happens. I desperately search the abstract and the figures to find something new and interesting. If I don't I find that sub-consciously I start to scan the references. This sometimes tells me a lot.

Here are some of the warning signs I have noticed over the years.

Lack of chronological diversity.

Most fields have progressed over many decades.

Yet some papers will only reference papers from the last few years or may ignore work from the last few years. Some physicists seem to think that work on quantum coherence in photosynthesis began in 2007. I once reviewed a paper were the majority of the references were more than 40 years old. Perhaps for some papers that might be appropriate but it certainly wasn't for that one.

Crap markers. I learnt this phrase from the late Sean Barrett. It refers to using dubious papers to justify more dubious work.

For me an example would be "Engel et al. have shown that quantum entanglement is crucial to the efficiency of photosynthetic systems [Nature 2007]."

Lack of geographic and ethnic diversity. This sounds politically incorrect! But that is not the issue. Most interesting fields attract good work from around the world. Yet I have reviewed papers were more than half the references were from the same country as the authors. Perhaps that might be appropriate for some work but it certainly wasn't for that one. It ignored relevant and significant work from other countries.

Gratuitous self-citation.

Missing key papers or not including references with different points of view.

Citing papers with contradictory conclusions without acknowledging the inconsistency.

I once reviewed a paper with a title like "Spin order in compound Y", yet it cited in support a paper with a title like "Absence of spin order in compound Y."!

When I started composing this post I felt guilty and superficial about confessing my approach. The quality of the referencing does not determine the quality of the paper.

So why do I keep doing this?

Shouldn't I just focus on the science.

The painful reality is that I have limited time that I need to budget efficiently.

I want to spend as much time reviewing a paper that is in proportion to its quality.

Often the references gives me a quick and rough estimate of the quality of the paper.

The best ones I will work hard on, try and to engage with carefully and find robust reasons for endorsement and constructive suggestions for improvement.

At the other end of the spectrum, I just need to find some quick and concrete reasons for rejection.

But, I should stress I am not using the quality of referencing as a stand alone criteria for accepting or rejecting a paper. I am just using this as a quick guide as to how seriously I should consider the paper. Furthermore, this is only after I have failed to find something obviously new and important in the figures.

Am I too superficial?

Does anyone else follow a similar approach?

Other comments welcome.

Someone can write a brilliant paper and yet poorly reference previous work.

On the other hand, one can write a mediocre or wrong paper and reference previous work in a meticulous manner.

But, I have to confess I find I sometimes do judge a paper by the quality of the referencing.

I find there is often a correlation between the quality of the referencing and the quality of the science. Perhaps this correlation should not be surprising since both reflect on how meticulous is the scholarship of the authors.

If I am sent a paper to referee I often find the following happens. I desperately search the abstract and the figures to find something new and interesting. If I don't I find that sub-consciously I start to scan the references. This sometimes tells me a lot.

Here are some of the warning signs I have noticed over the years.

Lack of chronological diversity.

Most fields have progressed over many decades.

Yet some papers will only reference papers from the last few years or may ignore work from the last few years. Some physicists seem to think that work on quantum coherence in photosynthesis began in 2007. I once reviewed a paper were the majority of the references were more than 40 years old. Perhaps for some papers that might be appropriate but it certainly wasn't for that one.

Crap markers. I learnt this phrase from the late Sean Barrett. It refers to using dubious papers to justify more dubious work.

For me an example would be "Engel et al. have shown that quantum entanglement is crucial to the efficiency of photosynthetic systems [Nature 2007]."

Lack of geographic and ethnic diversity. This sounds politically incorrect! But that is not the issue. Most interesting fields attract good work from around the world. Yet I have reviewed papers were more than half the references were from the same country as the authors. Perhaps that might be appropriate for some work but it certainly wasn't for that one. It ignored relevant and significant work from other countries.

Gratuitous self-citation.

Missing key papers or not including references with different points of view.

Citing papers with contradictory conclusions without acknowledging the inconsistency.

I once reviewed a paper with a title like "Spin order in compound Y", yet it cited in support a paper with a title like "Absence of spin order in compound Y."!

When I started composing this post I felt guilty and superficial about confessing my approach. The quality of the referencing does not determine the quality of the paper.

So why do I keep doing this?

Shouldn't I just focus on the science.

The painful reality is that I have limited time that I need to budget efficiently.

I want to spend as much time reviewing a paper that is in proportion to its quality.

Often the references gives me a quick and rough estimate of the quality of the paper.

The best ones I will work hard on, try and to engage with carefully and find robust reasons for endorsement and constructive suggestions for improvement.

At the other end of the spectrum, I just need to find some quick and concrete reasons for rejection.

But, I should stress I am not using the quality of referencing as a stand alone criteria for accepting or rejecting a paper. I am just using this as a quick guide as to how seriously I should consider the paper. Furthermore, this is only after I have failed to find something obviously new and important in the figures.

Am I too superficial?

Does anyone else follow a similar approach?

Other comments welcome.

Friday, December 13, 2013

Desperately seeking triplet superconductors

A size-able amount of time and energy has been spent by the "hard condensed matter" community over the past quarter century studying unconventional superconductors. A nice and recent review is by Mike Norman. In the absence of spin-orbit coupling spin is a good quantum number and the Cooper pairs must either be in a spin singlet or a spin triplet state. Furthermore, in a crystal with inversion symmetry spin singlets (triplets) are associated with even (odd) parity.

Actually, pinning down the symmetry of the Cooper pairs from experiment turns out to be extremely tricky. In the cuprates the "smoking gun" experiments that showed they were really d-wave used cleverly constructed Josephson junctions, that allowed one to detect the phase of the order parameter and show that it changed sign as one moved around the Fermi surface.

How can one show that the pairing is spin triplet?

Perhaps the simplest way is to show that they have an upper critical magnetic field that exceeds the Clogston-Chandrasekhar limit [often called the Pauli paramagnetic limit, but I think this is a misnomer].

In most type II superconductors the upper critical field is determined by orbital effects. When the magnetic field gets large enough the spacing between the vortices in the Abrikosov lattice becomes comparable to the size of the vortices [determined by the superconducting coherence length in the directions perpendicular to the magnetic field direction]. This destroys the superconductivity. In a layered material the orbital upper critical field can become very large for fields parallel to the layers, because the interlayer coherence length can be of the order of the lattice spacing. Consequently, the superconductivity can be destroyed by the Zeeman effect breaking up the singlets of the Cooper pairs. This is the Clogston-Chandrasekhar paramagnetic limit.

How big is this magnetic field?

In a singlet superconductor the energy lost compared to the metallic state is ~chi_s B^2/2 where chi_s is the magnetic [Pauli spin] susceptibility in the metallic phase. Once the magnetic field is large enough that this is larger than the superconducting condensation energy, superconductivity becomes unstable. Normally, these two quantities are compared within BCS theory, and one finds that the "Pauli limit", H_P = 1.8 k_B T_c/g mu_B. This means for a Tc=10K the upper critical field is 18 Tesla.

Sometimes, people then use this criteria to claim evidence for spin triplets.

However, in 1999 I realised that one could estimate the upper critical field independent of BCS theory, using just the measured values of the spin susceptibility and condensation energy. In this PRB my collaborators and I showed that for the organic charge transfer salt kappa-(BEDT-TTF)2Cu(SCN)2 the observed upper critical field of 30 Tesla agreed with the theory-independent estimate. In contrast, the BCS estimate was 18 Tesla. Thus, the experiment was consistent with singlet superconductivity.

But, exceeding the Clogston-Chandrasekhar paramagnetic limit is the first hint that one might have a triplet superconductor. Indeed this was the case for the heavy fermion superconductor UPt3, but not for Sr2RuO4. Recent, phase sensitive Josephson junction measurements have shown that both these materials have odd-parity superconductivity, consistent with triplet pairing. A recent review considered the status of the evidence for triplet odd-parity pairing and the possibility of a topological superconductor in Sr2RuO4.

A PRL from Nigel Hussey's group last year reported measurements of the upper critical field for the quasi-one dimensional material Li0.9Mo6O17. They found that the relevant upper critical field was 8 Telsa, compared to values of 5 Tesla and 4 Tesla for the thermodynamic and BCS estimates, respectively.

Thus, this material could be a triplet superconductor.

[Aside: this material has earlier attracted considerable attention because it has a very strange metallic phase, as reviewed here.]

A challenge is to now come up with more definitive experimental signatures of the unconventional superconductivity. Given the history of UPt3 and Sr2RuO4, this could be a long road... but a rich journey....

Actually, pinning down the symmetry of the Cooper pairs from experiment turns out to be extremely tricky. In the cuprates the "smoking gun" experiments that showed they were really d-wave used cleverly constructed Josephson junctions, that allowed one to detect the phase of the order parameter and show that it changed sign as one moved around the Fermi surface.

How can one show that the pairing is spin triplet?

Perhaps the simplest way is to show that they have an upper critical magnetic field that exceeds the Clogston-Chandrasekhar limit [often called the Pauli paramagnetic limit, but I think this is a misnomer].

In most type II superconductors the upper critical field is determined by orbital effects. When the magnetic field gets large enough the spacing between the vortices in the Abrikosov lattice becomes comparable to the size of the vortices [determined by the superconducting coherence length in the directions perpendicular to the magnetic field direction]. This destroys the superconductivity. In a layered material the orbital upper critical field can become very large for fields parallel to the layers, because the interlayer coherence length can be of the order of the lattice spacing. Consequently, the superconductivity can be destroyed by the Zeeman effect breaking up the singlets of the Cooper pairs. This is the Clogston-Chandrasekhar paramagnetic limit.

How big is this magnetic field?

In a singlet superconductor the energy lost compared to the metallic state is ~chi_s B^2/2 where chi_s is the magnetic [Pauli spin] susceptibility in the metallic phase. Once the magnetic field is large enough that this is larger than the superconducting condensation energy, superconductivity becomes unstable. Normally, these two quantities are compared within BCS theory, and one finds that the "Pauli limit", H_P = 1.8 k_B T_c/g mu_B. This means for a Tc=10K the upper critical field is 18 Tesla.

Sometimes, people then use this criteria to claim evidence for spin triplets.

However, in 1999 I realised that one could estimate the upper critical field independent of BCS theory, using just the measured values of the spin susceptibility and condensation energy. In this PRB my collaborators and I showed that for the organic charge transfer salt kappa-(BEDT-TTF)2Cu(SCN)2 the observed upper critical field of 30 Tesla agreed with the theory-independent estimate. In contrast, the BCS estimate was 18 Tesla. Thus, the experiment was consistent with singlet superconductivity.

But, exceeding the Clogston-Chandrasekhar paramagnetic limit is the first hint that one might have a triplet superconductor. Indeed this was the case for the heavy fermion superconductor UPt3, but not for Sr2RuO4. Recent, phase sensitive Josephson junction measurements have shown that both these materials have odd-parity superconductivity, consistent with triplet pairing. A recent review considered the status of the evidence for triplet odd-parity pairing and the possibility of a topological superconductor in Sr2RuO4.

A PRL from Nigel Hussey's group last year reported measurements of the upper critical field for the quasi-one dimensional material Li0.9Mo6O17. They found that the relevant upper critical field was 8 Telsa, compared to values of 5 Tesla and 4 Tesla for the thermodynamic and BCS estimates, respectively.

Thus, this material could be a triplet superconductor.

[Aside: this material has earlier attracted considerable attention because it has a very strange metallic phase, as reviewed here.]

A challenge is to now come up with more definitive experimental signatures of the unconventional superconductivity. Given the history of UPt3 and Sr2RuO4, this could be a long road... but a rich journey....

Thursday, December 12, 2013

Chemical bonding, blogs, and basic questions

Roald Hoffmann and Sason Shaik are two of my favourite theoretical chemists. They have featured in a number of my blog posts. I particularly appreciate their concern with using computations to elucidate chemical concepts.

In Angewandte Chemie there is a fascinating article, One Molecule, Two Atoms, Three Views, Four Bonds that is written as a three-way dialogue including Henry Rzepa.

The simple (but profound) scientific question they address concerns how to describe the chemical bonding in the molecule C2 [i.e. a diatomic molecule of carbon]. In particular, does it involve a quadruple bond?

The answer seems to be yes, based on a full CI [configuration interaction] calculation that is then projected down to a Valence Bond wave function.

The dialogue is very engaging and the banter back and forth includes interesting digressions such the role of Rzepa's chemistry blog, learning from undergraduates, the relative merits of molecular orbital theory and valence bond theory, the role of high level quantum chemical calculations, and why Hoffmann is not impressed by the Quantum Theory of Atoms in Molecules.

In Angewandte Chemie there is a fascinating article, One Molecule, Two Atoms, Three Views, Four Bonds that is written as a three-way dialogue including Henry Rzepa.

The simple (but profound) scientific question they address concerns how to describe the chemical bonding in the molecule C2 [i.e. a diatomic molecule of carbon]. In particular, does it involve a quadruple bond?

The answer seems to be yes, based on a full CI [configuration interaction] calculation that is then projected down to a Valence Bond wave function.

The dialogue is very engaging and the banter back and forth includes interesting digressions such the role of Rzepa's chemistry blog, learning from undergraduates, the relative merits of molecular orbital theory and valence bond theory, the role of high level quantum chemical calculations, and why Hoffmann is not impressed by the Quantum Theory of Atoms in Molecules.

Tuesday, December 10, 2013

Science is broken II

This week three excellent articles have been brought my attention that highlight current problems with science and academia. The first two are in the Guardian newspaper.

How journals like Nature, Cell and Science are damaging science

The incentives offered by top journals distort science, just as big bonuses distort banking

Randy Schekman, a winner of the 2013 Nobel prize for medicine.

Peter Higgs: I wouldn't be productive enough for today's academic system

Physicist doubts work like Higgs boson identification achievable now as academics are expected to 'keep churning out papers'

How academia resembles a drug gang is a blog post by Alexandre Afonso, a lecturer in Political Economy at Kings College London. He takes off from the fascinating chapter in Freakanomics, "Why drug dealers still live with their moms." It is because they all hope they are going to make the big time and eventually become head of the drug gang. Academia has a similar hierarchical structure with a select few tenured and well-funded faculty who lead large "gangs" of Ph.D students, postdocs, and "adjunct" faculty. They soldier on in the slim hope that one day they will make the big time... just like the gang leader.

The main idea here is highlighting some of the personal injustices of the current system. That is worth a separate post. What does this have to do with good/bad science? This situation is a result of the problems highlighted in the two Guardian articles. In particular, many of these "gangs" are poorly supervised and produce low quality science. This is due to the emphasis on quantity and marketability, rather than quality and reproducibility.

How journals like Nature, Cell and Science are damaging science

The incentives offered by top journals distort science, just as big bonuses distort banking

Randy Schekman, a winner of the 2013 Nobel prize for medicine.

Peter Higgs: I wouldn't be productive enough for today's academic system

Physicist doubts work like Higgs boson identification achievable now as academics are expected to 'keep churning out papers'

How academia resembles a drug gang is a blog post by Alexandre Afonso, a lecturer in Political Economy at Kings College London. He takes off from the fascinating chapter in Freakanomics, "Why drug dealers still live with their moms." It is because they all hope they are going to make the big time and eventually become head of the drug gang. Academia has a similar hierarchical structure with a select few tenured and well-funded faculty who lead large "gangs" of Ph.D students, postdocs, and "adjunct" faculty. They soldier on in the slim hope that one day they will make the big time... just like the gang leader.

The main idea here is highlighting some of the personal injustices of the current system. That is worth a separate post. What does this have to do with good/bad science? This situation is a result of the problems highlighted in the two Guardian articles. In particular, many of these "gangs" are poorly supervised and produce low quality science. This is due to the emphasis on quantity and marketability, rather than quality and reproducibility.

Monday, December 9, 2013

Effect of frustration on the thermodynamics of a Mott insulator

When I recently gave a talk on bad metals in Sydney at the Gordon Godfrey Conference, Andrey Chubukov and Janez Bonca asked some nice questions that stimulated this post.

The main question that the talk is trying to address is: what is the origin of the low temperature coherence scale T_coh associated with the crossover from a bad metal to a Fermi liquid?

In particular, T_coh is much less than the Fermi temperature of for non-interacting band structure of the relevant Hubbard model [on an anisotropic triangular lattice at half filling].

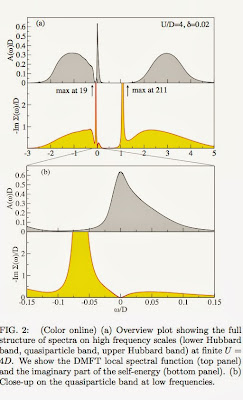

Here is the key figure from the talk [and the PRL written with Jure Kokalj].

It shows the temperature dependence of the specific heat for different values of U/t for a triangular lattice t'=t. Below T_coh, the specific heat becomes approximately linear in temperature. For U=6t, which is near the Mott insulator transition, T_coh ~t/20. Thus, we see the emergence of the low energy scale.

Note that well into the Mott phase [U=12t] there is a small peak in the specific heat versus temperature. This is also seen in the corresponding Heisenberg model and corresponds to spin-waves associated with short-range antiferromagnetic order.

So here are the further questions.

What is the effect of frustration?

How does T_coh compare to the antiferromagnetic exchange J=4t^2/U?

The answers are in the Supplementary material of PRL. [I should have had them as back-up slides for the talk]. The first figure shows the specific heat for U=10t and different values of the frustration.

t'=0 [red curve] corresponds to the square lattice [no frustration] and t'=t [green dot-dashed curve] corresponds to the isotropic triangular lattice.

The main question that the talk is trying to address is: what is the origin of the low temperature coherence scale T_coh associated with the crossover from a bad metal to a Fermi liquid?

In particular, T_coh is much less than the Fermi temperature of for non-interacting band structure of the relevant Hubbard model [on an anisotropic triangular lattice at half filling].

Here is the key figure from the talk [and the PRL written with Jure Kokalj].

It shows the temperature dependence of the specific heat for different values of U/t for a triangular lattice t'=t. Below T_coh, the specific heat becomes approximately linear in temperature. For U=6t, which is near the Mott insulator transition, T_coh ~t/20. Thus, we see the emergence of the low energy scale.

Note that well into the Mott phase [U=12t] there is a small peak in the specific heat versus temperature. This is also seen in the corresponding Heisenberg model and corresponds to spin-waves associated with short-range antiferromagnetic order.

So here are the further questions.

What is the effect of frustration?

How does T_coh compare to the antiferromagnetic exchange J=4t^2/U?

The answers are in the Supplementary material of PRL. [I should have had them as back-up slides for the talk]. The first figure shows the specific heat for U=10t and different values of the frustration.

t'=0 [red curve] corresponds to the square lattice [no frustration] and t'=t [green dot-dashed curve] corresponds to the isotropic triangular lattice.

The antiferromagnetic exchange constant J=4t^2/U is shown on the horizontal scale. For the square lattice there is a very well defined peak at temperature of order J. However, as the frustration increases the magnitude of this peak decreases significantly and shifts to a much lower temperature.

This reflects that there are not well-defined spin excitations in the frustrated system.

The significant effect of frustration is also seen in the entropy versus temperature shown below. [The colour labels are the same]. At low temperatures frustration greatly increases the entropy, reflecting the existence of weakly interacting low magnetic moments.

Friday, December 6, 2013

I don't want this blog to become too popular!

This past year I have been surprised and encouraged that this blog has a wide readership. However, I have also learnt that I don't want it to become too popular.

A few months ago, when I was visiting Columbia University I met with Peter Woit. He writes a very popular blog, Not Even Wrong, that has become well known, partly because of his strong criticism of string theory. It is a really nice scientific blog, mostly focusing on elementary particle physics and mathematics. The comments generate some substantial scientific discussion. However, it turns out that the popularity is a real curse. A crowd will attract a bigger crowd. The comments sections attracts two undesirable audiences. The first are non-scientists who have their own "theory of everything" that they wish to promote. The second "audience" are robots that leave "comments" containing links to dubious commercial websites. Peter has to spend a substantial amount of time each day monitoring these comments, deleting them, and finding automated ways to block them. I am very thankful I don't have these problems. Occasionally, I get random comments with commercial links. I delete them manually. I did not realise that they may be generated by robots.

Due to the robot problem, Peter said he thought the pageview statistics provided by blog hosts [e.g. blogspot or wordpress] were a gross over-estimate. I could see that this would be the case for his blog. However, I suspect this is not the case for mine. Blogspot claims a typical post of mine gets 50-200 page views. This seems reasonable to me. Furthermore, the numbers for individual posts tend to scale with the number of comments and the anticipated breadth of the audience [e.g. journal policies and mental health attract more interest than hydrogen bonding!] Hence, I will still take the stats as a somewhat reliable guide as to interest and influence.

A few months ago, when I was visiting Columbia University I met with Peter Woit. He writes a very popular blog, Not Even Wrong, that has become well known, partly because of his strong criticism of string theory. It is a really nice scientific blog, mostly focusing on elementary particle physics and mathematics. The comments generate some substantial scientific discussion. However, it turns out that the popularity is a real curse. A crowd will attract a bigger crowd. The comments sections attracts two undesirable audiences. The first are non-scientists who have their own "theory of everything" that they wish to promote. The second "audience" are robots that leave "comments" containing links to dubious commercial websites. Peter has to spend a substantial amount of time each day monitoring these comments, deleting them, and finding automated ways to block them. I am very thankful I don't have these problems. Occasionally, I get random comments with commercial links. I delete them manually. I did not realise that they may be generated by robots.

Due to the robot problem, Peter said he thought the pageview statistics provided by blog hosts [e.g. blogspot or wordpress] were a gross over-estimate. I could see that this would be the case for his blog. However, I suspect this is not the case for mine. Blogspot claims a typical post of mine gets 50-200 page views. This seems reasonable to me. Furthermore, the numbers for individual posts tend to scale with the number of comments and the anticipated breadth of the audience [e.g. journal policies and mental health attract more interest than hydrogen bonding!] Hence, I will still take the stats as a somewhat reliable guide as to interest and influence.

Thursday, December 5, 2013

Quantum nuclear fluctuations in water

Understanding the unique properties of water remains one of the outstanding challenges in science today. Most discussions and computer simulations of pure water [and its interactions with biomolecules] treat the nuclei as classical. Furthermore, the hydrogen bonds are classified as weak. Increasingly, these simple pictures are being questioned. Water is quantum!

There is a nice PNAS paper Nuclear quantum effects and hydrogen bond fluctuations in water

Michele Ceriotti, Jerome Cuny, Michele Parrinello, and David Manolopoulos

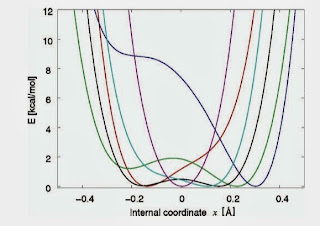

The authors perform path integral molecular dynamics simulations where the nuclei are treated quantum mechanically, moving on potential energy surfaces that are calculated "on the fly" from density functional theory based methods using the Generalised Gradient Approximation. A key technical advance is using an approximation for the path integrals (PI) based on a mapping to a Generalised Langevin Equation [GLE] [PI+GLE=PIGLET!].

In the figure below the horizontal axis co-ordinate nu is the difference in the O-H distance between the donor and acceptor atom. Thus, nu=0 corresponds to the proton being equidistant between the donor and acceptor.

In contrast, for the Zundel cation, H5O2+ the proton is most likely to be equidistant due to the strong H-bond involved [the donor-acceptor distance is about 2.4 A]. In that case quantum fluctuations play a significant role.

The most important feature of the probability densities shown in the figure is the difference between the solid red and blue curves in the upper panel. This is the difference between quantum and classical. In particular, the probability of a proton being located equidistant between the donor and acceptor becomes orders of magnitude larger due to quantum fluctuations. It is still small (one in a thousand) but relevant to the rare events that dominate many dynamical processes (e.g. auto-ionisation).

On average the H-bonds in water are weak, as defined by a donor-acceptor distance [d(O-O), the vertical axis in the lower 3 panels] of about 2.8 Angstroms. However, there are rare [but non-negligible] fluctuations where this distance can become shorter [~2.5-2.7 A] characteristic of much stronger bonds. This further facilitates the quantum effects in the proton transfer (nu) co-ordinate.

There is a nice PNAS paper Nuclear quantum effects and hydrogen bond fluctuations in water

Michele Ceriotti, Jerome Cuny, Michele Parrinello, and David Manolopoulos

The authors perform path integral molecular dynamics simulations where the nuclei are treated quantum mechanically, moving on potential energy surfaces that are calculated "on the fly" from density functional theory based methods using the Generalised Gradient Approximation. A key technical advance is using an approximation for the path integrals (PI) based on a mapping to a Generalised Langevin Equation [GLE] [PI+GLE=PIGLET!].

In the figure below the horizontal axis co-ordinate nu is the difference in the O-H distance between the donor and acceptor atom. Thus, nu=0 corresponds to the proton being equidistant between the donor and acceptor.

In contrast, for the Zundel cation, H5O2+ the proton is most likely to be equidistant due to the strong H-bond involved [the donor-acceptor distance is about 2.4 A]. In that case quantum fluctuations play a significant role.

The most important feature of the probability densities shown in the figure is the difference between the solid red and blue curves in the upper panel. This is the difference between quantum and classical. In particular, the probability of a proton being located equidistant between the donor and acceptor becomes orders of magnitude larger due to quantum fluctuations. It is still small (one in a thousand) but relevant to the rare events that dominate many dynamical processes (e.g. auto-ionisation).

On average the H-bonds in water are weak, as defined by a donor-acceptor distance [d(O-O), the vertical axis in the lower 3 panels] of about 2.8 Angstroms. However, there are rare [but non-negligible] fluctuations where this distance can become shorter [~2.5-2.7 A] characteristic of much stronger bonds. This further facilitates the quantum effects in the proton transfer (nu) co-ordinate.

Tuesday, December 3, 2013

Review of strongly correlated superconductivity

On the arXiv, Andre-Marie Tremblay has posted a nice tutorial review Strongly correlated superconductivity. It is based on some summer school lectures and will be particularly valueable to students. I think it is particularly clearly and nicely highlights some key concepts.

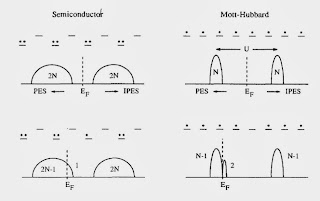

For example, the figure below highlights a fundamental difference between a Mott-Hubbard insulator and a band insulator [or semiconductor].

There is also two clear messages that should not be missed. A minority of people might disagree.

1. For both the cuprates and large classes of organic charge transfer salts the relevant effective Hamiltonians are "simple" one-band Hubbard models. They can capture the essential details of the phase diagrams, particularly the competition between superconductivity, Mott insulator, and antiferromagnetisim.

2. Cluster Dynamical Mean-Field Theory (CDMFT) captures the essential physics of these Hubbard models.

I agree completely.

Tremblay does mention some numerical studies that doubt that there is superconductivity in the Hubbard model on the anisotropic triangular lattice at half filling. My response to that criticism is here.

Monday, December 2, 2013

I have no idea what you are talking about

Sometimes when I am at a conference or in a seminar I find that I have absolutely no idea what the speaker is talking about. It is not just that I don't understand the finer technical details. I struggle to see the context, motivation, and background. The words are just jargon and the pictures are just wiggles and the equations random symbols. What is being measured or what is being calculated? Why? Is there a simple physical picture here? How is this related to other work?

A senior experimental colleague I spoke to encouraged me to post this. He thought that his similar befuddlement was because he wasn't a theorist.

There are three audiences for this message.

1. Me. I need to work harder at making my talks accessible and clear.

2. Other speakers. You need to work harder at making your talks accessible and clear.

3. Students. If you are also struggling don't assume that you are stupid and don't belong in science. It is probably because the speaker is doing a poor job. Don't be discouraged. Don't give up on going to talks. Have the courage to ask "stupid" questions.

A senior experimental colleague I spoke to encouraged me to post this. He thought that his similar befuddlement was because he wasn't a theorist.

There are three audiences for this message.

1. Me. I need to work harder at making my talks accessible and clear.

2. Other speakers. You need to work harder at making your talks accessible and clear.

3. Students. If you are also struggling don't assume that you are stupid and don't belong in science. It is probably because the speaker is doing a poor job. Don't be discouraged. Don't give up on going to talks. Have the courage to ask "stupid" questions.

Friday, November 29, 2013

Mental health talk in Canberra

Today I am giving a talk to scientists [mostly postdocs and grad students] at the Black Mountain laboratories of CSIRO [Australia's national industrial labs].

Here are the slides.

On the personal side there is something "strange" about the location of this talk. It is less than one kilometre from where I grew up and was an undergrad. Back then I never even thought about these issues.

Here are the slides.

On the personal side there is something "strange" about the location of this talk. It is less than one kilometre from where I grew up and was an undergrad. Back then I never even thought about these issues.

Thursday, November 28, 2013

Another bad metal talk

Today I am giving a talk on bad metals at the 2013 Gordon Godfrey Workshop on Strong Electron Correlations and Spins in Sydney.

Here is the current version of the slides for the talk.

One question I keep getting asked is, "Are these dirty systems?" NO! They are very clean. The bad metal arises purely from electron-electron interactions.

The main results in the talk are in a recent PRL, written with Jure Kokalj.

Here is the current version of the slides for the talk.

One question I keep getting asked is, "Are these dirty systems?" NO! They are very clean. The bad metal arises purely from electron-electron interactions.

The main results in the talk are in a recent PRL, written with Jure Kokalj.

The organic charge transfer salts and the relevant Hubbard model are discussed extensively in a review, written with Ben Powell. However, I stress that this bad metal physics is present in a wide range of strongly correlated electron materials. The organics just provide a nice tuneable system to study.

A recent review of the Finite Temperature Lanczos Method is by Peter Prelovsek and Janez Bonca.

A recent review of the Finite Temperature Lanczos Method is by Peter Prelovsek and Janez Bonca.

Monday, November 25, 2013

The emergence of "sloppy" science

Here "sloppy" science is good science!

How do effective theories emerge?

What is the minimum number of variables and parameters needed to describe some emergent phenomena?

Is there a "blind"/"automated" procedure for determining what the relevant variables and parameters are?

There is an interesting paper in Science

Parameter Space Compression Underlies Emergent Theories and Predictive Models

Benjamin B. Machta, Ricky Chachra, Mark K. Transtrum, James P. Sethna

These issues are not just relevant in physics, but also in systems biology. The authors state:

I have one minor quibble with the first sentence of the paper.

Reference (2) is Anderson's classic "More is different".

I think Wigner's paper is largely about something quite different from emergence, the focus of Anderson's paper. Wigner is primarily concerned with the even more profound philosophical question as to why nature can be described by mathematics at all. I see no scientific answer on the horizon.

How do effective theories emerge?

What is the minimum number of variables and parameters needed to describe some emergent phenomena?

Is there a "blind"/"automated" procedure for determining what the relevant variables and parameters are?

There is an interesting paper in Science

Parameter Space Compression Underlies Emergent Theories and Predictive Models

Benjamin B. Machta, Ricky Chachra, Mark K. Transtrum, James P. Sethna

These issues are not just relevant in physics, but also in systems biology. The authors state:

important predictions largely depend only on a few “stiff” combinations of parameters, followed by a sequence of geometrically less important “sloppy” ones... This recurring characteristic, termed “sloppiness,” naturally arises in models describing collective data (not chosen to probe individual system components) and has implications similar to those of the renormalization group (RG) and continuum limit methods of statistical physics. Both physics and sloppy models show weak dependence of macroscopic observables on microscopic details and allow effective descriptions with reduced dimensionality.The following idea is central to the paper.

The sensitivity of model predictions to changes in parameters is quantified by the Fisher Information Matrix (FIM). The FIM forms a metric on parameter space that measures the distinguishability between a model with parameters theta_m and a nearby model with parameters theta_m + delta theta_m.The authors show that for several specific models, the eigenvalue spectrum of the FIM is dominated by just a few eigenvalues. These eigenvalues are then associated with the key parameters of the theory.

I have one minor quibble with the first sentence of the paper.

"Physics owes its success (1) in large part to the hierarchical character of scientific theories (2)."Reference (1) is Eugene Wigner's famous 1960 essay "The Unreasonable Effectiveness of Mathematics in the Natural Sciences".

Reference (2) is Anderson's classic "More is different".

I think Wigner's paper is largely about something quite different from emergence, the focus of Anderson's paper. Wigner is primarily concerned with the even more profound philosophical question as to why nature can be described by mathematics at all. I see no scientific answer on the horizon.

Update. The authors also have longer papers on the same subject

Mark K. Transtrum; Benjamin B. Machta; Kevin S. Brown; Bryan C. Daniels; Christopher R. Myers; James P. Sethna

(2015)

Review: Information geometry for multiparameter models: new perspectives on the origin of simplicity

Katherine N Quinn, Michael C Abbott, Mark K Transtrum, Benjamin B Machta and James P Sethna

(2022)

Sunday, November 24, 2013

The commuting problem

I am not talking about commuting operators in quantum mechanics.

When considering a job offer, or the relative merits of multiple job offers [a luxury] rarely does one hear discussion of the daily commute associated with the job. Consider the following two options.

A. The prestigious institution is in a large city and due to the high cost of housing you will have to commute for greater than an hour. Furthermore, this commute involves driving in heavy traffic or taking and waiting for crowded public transport.

B. A less prestigious institution offers you on campus [or near campus] housing so you can walk 5-15 minutes to work each day.

The difference is considerable. Option A will waste more than 10 hours of each week and increase your stress and reduce your energy. In light of that you may end up being more productive and successful at B.

It is interesting that

I realise the options often aren't that simple. Furthermore, you may not have a choice. Also, time is not the only factor. A one hour train ride to and from work each day may not be that bad if you can always get a seat and there are tables to work on. Some people really enjoy a 40 minute bicycle ride to and from work each day.

I am just saying it is an issue to consider.

I once had an attractive job offer that I once turned down largely because of commuting . I am glad I did. So is my family.

When considering a job offer, or the relative merits of multiple job offers [a luxury] rarely does one hear discussion of the daily commute associated with the job. Consider the following two options.

A. The prestigious institution is in a large city and due to the high cost of housing you will have to commute for greater than an hour. Furthermore, this commute involves driving in heavy traffic or taking and waiting for crowded public transport.

B. A less prestigious institution offers you on campus [or near campus] housing so you can walk 5-15 minutes to work each day.

The difference is considerable. Option A will waste more than 10 hours of each week and increase your stress and reduce your energy. In light of that you may end up being more productive and successful at B.

It is interesting that

I realise the options often aren't that simple. Furthermore, you may not have a choice. Also, time is not the only factor. A one hour train ride to and from work each day may not be that bad if you can always get a seat and there are tables to work on. Some people really enjoy a 40 minute bicycle ride to and from work each day.

I am just saying it is an issue to consider.

I once had an attractive job offer that I once turned down largely because of commuting . I am glad I did. So is my family.

Thursday, November 21, 2013

Bad metal talk at IISc Bangalore

Today I am giving a seminar in the Physics Department at the Indian Institute of Science in Bangalore.

Here is the current version of the slides.

The main results in the talk are in a recent PRL, written with Jure Kokalj.

Here is the current version of the slides.

The main results in the talk are in a recent PRL, written with Jure Kokalj.

The organic charge transfer salts and the relevant Hubbard model are discussed extensively in a review, written with Ben Powell.

Wednesday, November 20, 2013

The role of universities in nation building

There is a general view that great nations have great universities. This motivates significant public and private investment [both financial and political] in universities.

Unfortunately, these days much of the focus is on universities promoting economic growth.

However, I think equally important are the contributions that universities can make to culture, political stability, and positive social change.

Aside: Much of this discussion assumes a causality: strong universities produce strong nations. However, I think caution is in order here. Sometimes it may be correlation not causality. For example, wealthy nations use their wealth to build excellent universities.

The main purpose of this post is to make two bold claims. For neither claim do I have empirical evidence. But, I think they are worth discussing.

First some nomenclature. In every country the quality of institutions decays with ranking. In different countries that decay rate is different. Roughly the rate decreases from India to Australia to the USA. Below I will distinguish between tier 1 and tier 2 institutions. It is not clear how to define the exact boundary. But, roughly the number of tier 1 would be no more than 50, 10, and 10 for the USA, India, and Australia respectively. Let's not get in a big debate about how big this number is.

So here are the claims.

1. The key institutions for nation building are not the best institutions but the second tier ones.

2. Making second tier institutions effective is much more challenging than first tier institutions.

Let me try and justify each claim.

1. Great nations are not just build by brilliant scientists, writers and entrepreneurs.

Rather they also require effective school teachers, engineers, small business owners,...

Furthermore, you need citizens who are well informed, critical thinkers and engaged in politics and communities. The best universities are populated with highly gifted and motivated faculty and students. Most would be productive and successful, regardless of fancy buildings or high salaries. The best students will learn a lot regardless of the quality of the teaching. You don't need to teach them how to write an essay or to think critically. In contrast, faculty and students at second tier institutions require significantly more nurturing and development.

2. At tier one institutions governments [or private trustees] just need to provide a certain minimal amount of resources and get out of the way. However, tier two institutions are a completely different ball game. Many are characterised by form without substance.

To be concrete, you can write impressive course profiles, assign leading texts, give lectures, and have fancy graduation ceremonies, but at the end students actually learn little. This painful reality is covered up by soft exams and grading. The focus in on rote learning rather than critical thinking.

Faculty may do research in the sense that they get grants, graduate Ph.D students, and publish papers.

However, the "research" and the Ph.D graduates are of such low quality they make little contribution to the nation.

The problem is accentuated by the fact they many of these institutions don't want to face the painful reality of the low quality of their incoming students and so they don't adjust their mission and programs accordingly. They just try to mimic tier one institutions.

Reforming these institutions is particularly difficult because they are largely controlled by career administrators who have no real experience or interest in real scholarship or teaching. Instead, they are obsessed with rankings, metrics, reorganisations, buildings, money, and particularly their own careers.

I think these concerns are just as applicable to countries as diverse as the USA, Australia, and India.

For a perspective on the latter, there is an interesting paper by Sabyasachi Bhattacharya

Indian Science Today: An Indigenously Crafted Crisis He was a recent director of the Tata Institute for Fundamental Research.

I also found helpful a Physics World essay by Shiraz Minwalla.

I welcome comments.

Unfortunately, these days much of the focus is on universities promoting economic growth.

However, I think equally important are the contributions that universities can make to culture, political stability, and positive social change.

Aside: Much of this discussion assumes a causality: strong universities produce strong nations. However, I think caution is in order here. Sometimes it may be correlation not causality. For example, wealthy nations use their wealth to build excellent universities.

The main purpose of this post is to make two bold claims. For neither claim do I have empirical evidence. But, I think they are worth discussing.

First some nomenclature. In every country the quality of institutions decays with ranking. In different countries that decay rate is different. Roughly the rate decreases from India to Australia to the USA. Below I will distinguish between tier 1 and tier 2 institutions. It is not clear how to define the exact boundary. But, roughly the number of tier 1 would be no more than 50, 10, and 10 for the USA, India, and Australia respectively. Let's not get in a big debate about how big this number is.

So here are the claims.

1. The key institutions for nation building are not the best institutions but the second tier ones.

2. Making second tier institutions effective is much more challenging than first tier institutions.

Let me try and justify each claim.

1. Great nations are not just build by brilliant scientists, writers and entrepreneurs.

Rather they also require effective school teachers, engineers, small business owners,...

Furthermore, you need citizens who are well informed, critical thinkers and engaged in politics and communities. The best universities are populated with highly gifted and motivated faculty and students. Most would be productive and successful, regardless of fancy buildings or high salaries. The best students will learn a lot regardless of the quality of the teaching. You don't need to teach them how to write an essay or to think critically. In contrast, faculty and students at second tier institutions require significantly more nurturing and development.

2. At tier one institutions governments [or private trustees] just need to provide a certain minimal amount of resources and get out of the way. However, tier two institutions are a completely different ball game. Many are characterised by form without substance.

To be concrete, you can write impressive course profiles, assign leading texts, give lectures, and have fancy graduation ceremonies, but at the end students actually learn little. This painful reality is covered up by soft exams and grading. The focus in on rote learning rather than critical thinking.

Faculty may do research in the sense that they get grants, graduate Ph.D students, and publish papers.

However, the "research" and the Ph.D graduates are of such low quality they make little contribution to the nation.

The problem is accentuated by the fact they many of these institutions don't want to face the painful reality of the low quality of their incoming students and so they don't adjust their mission and programs accordingly. They just try to mimic tier one institutions.

Reforming these institutions is particularly difficult because they are largely controlled by career administrators who have no real experience or interest in real scholarship or teaching. Instead, they are obsessed with rankings, metrics, reorganisations, buildings, money, and particularly their own careers.

I think these concerns are just as applicable to countries as diverse as the USA, Australia, and India.

For a perspective on the latter, there is an interesting paper by Sabyasachi Bhattacharya

Indian Science Today: An Indigenously Crafted Crisis He was a recent director of the Tata Institute for Fundamental Research.

I also found helpful a Physics World essay by Shiraz Minwalla.

I welcome comments.

Monday, November 18, 2013

The challenge of intermediate coupling

The point here is a basic one. But, it is important to keep in mind.

One might tend to think that in quantum many-body theory the hardest problems are strong coupling ones. Let g denote some dimensionless coupling constant where g=0 corresponds to non-interacting particles. Obviously for large g perturbation theory is most unreliable and progress will be difficult. However, in some problems one can treat 1/g as a perturbative parameter and make progress. But this does require the infinite coupling limit be tractable.

Here are a few examples where strong coupling is actually tractable [but certainly non-trivial]

Intermediate coupling is both a blessing and a curse. It is a blessing because there is lots of interesting physics and chemistry associated with it. It is a curse because it is so hard to make reliable progress.

I welcome suggestions of other examples.

One might tend to think that in quantum many-body theory the hardest problems are strong coupling ones. Let g denote some dimensionless coupling constant where g=0 corresponds to non-interacting particles. Obviously for large g perturbation theory is most unreliable and progress will be difficult. However, in some problems one can treat 1/g as a perturbative parameter and make progress. But this does require the infinite coupling limit be tractable.

Here are a few examples where strong coupling is actually tractable [but certainly non-trivial]

- The Hubbard model at half filling. For U much larger than t, the ground state is a Mott insulator. There is a charge gap and the low-lying excitations are spin excitations that are described by an antiferromagnetic Heisenberg model. Except for the case of frustration, i.e. on a non-bipartite lattice, the system is well understood.

- BEC-BCS crossover in ultracold fermionic atoms, near the unitarity limit.

- The Kondo problem at low temperatures. The system is a Fermi liquid, corresponding to the strong-coupling fixed point of the Kondo model.

- The fractional quantum Hall effect.

- Cuprate superconductors. For a long time it was considered that they are in the large U/t limit [i.e. strongly correlated] and that the Mottness was essential. However, Andy Millis and collaborators argue otherwise, as described here. It is interesting that one gets d-wave superconductivity both from a weak-coupling RG approach and a strong coupling RVB theory.

- Quantum chemistry. Weak coupling corresponds to molecular orbital theory. Strong coupling corresponds to valence bond theory. Real molecules are somewhere in the middle. This is the origin of the great debate about the relative merits of these approaches.

- Superconducting organic charge transfer salts. Many can be described by a Hubbard model on the anisotropic triangular lattice at half filling. Superconductivity occurs in proximity to the Mott transition which occurs for U ~ 8t. Ring exchange terms in the Heisenberg model may be important for understanding spin liquid phases.

- Graphene. It has U ~ bandwidth and long range Coulomb interactions. Perturb it and you could end up with an insulator.

- Exciton transport in photosynthetic systems. The kinetic energy, thermal energy, solvent reorganisation energy, and relaxation frequency [cut-off frequency of the bath] are all comparable.

- Water. This is my intuition but I find it hard to justify. It is not clear to me what the "coupling constants" are.

Intermediate coupling is both a blessing and a curse. It is a blessing because there is lots of interesting physics and chemistry associated with it. It is a curse because it is so hard to make reliable progress.

I welcome suggestions of other examples.

Saturday, November 16, 2013

The silly marketing of an Australian university

Recently, I posted about a laundry detergent I bought in India that features "Vibrating molecules" (TM) and wryly commented that the marketing of some universities is not much better. I saw that this week, again in India. I read that a former Australian cricket captain, Adam Gilchrist, [an even bigger celebrity in India than in Australia], was in Bangalore as a "Brand name Ambassador" for a particular Australian university. The university annually offers one Bradman scholarship to an Indian student for which it pays 50 per cent of the tuition for an undergraduate degree. [Unfortunately, the amount of money spent on the business class airfares associated with the launch of this scholarship probably exceeded the annual value of the scholarship].

Some measure of Gilchrist's integrity is that at the same event sponsored by the university he said he supported the introduction of legalised betting on sports in India. Many in Australia think such betting has been a disaster, leading to government intervention last year.

What is my problem with this? Gilchrist and Bradman may be cricketing legends. However, neither ever went to university or has had any engagement or interest in universities.

Some measure of Gilchrist's integrity is that at the same event sponsored by the university he said he supported the introduction of legalised betting on sports in India. Many in Australia think such betting has been a disaster, leading to government intervention last year.

What is my problem with this? Gilchrist and Bradman may be cricketing legends. However, neither ever went to university or has had any engagement or interest in universities.

Thursday, November 14, 2013

Possible functional role of strong hydrogen bonds in proteins

There is a nice review article Low-barrier hydrogen bonds in proteins

by M.V. Hosur, R. Chitra, Samarth Hegde, R.R. Choudhury, Amit Das, and R.V. Hosur

Most hydrogen bonds in proteins are weak, as characterised by a donor-acceptor distance larger than 2.8 Angstroms, and interaction energies of a few kcal/mol (~0.1 eV~3 k_B T). However, there are some bonds that are much shorter. In particular, Cleland proposed in 1993 that for some enzymes that there are H-bonds that are sufficiently short (R ~ 2.4-2.5 A) that the energy barrier for proton transfer from the donor to acceptor is sufficiently small that it is comparable to the zero-point energy for the donor-H stretch vibration. These are called low-barrier hydrogen bonds. This proposal remains controversial. For example, Ariel Warshel says they have no functional role.

The authors perform extensive analysis of crystal structure databases, for both proteins and small molecules, in order to identify the relative abundance of short bonds, and their location relative to the active sites of proteins. Here are a few things I found interesting.

1. For a strong bond, the zero-point motion along the bond direction will be much larger than in the perpendicular directions. This means that there should be significant anisotropy in the ellipsoid associated with the uncertainty of the hydrogen atom position determined from neutron scattering [ADP = Atomic Displacement Parameter = Debye-Waller factor]. The ellipsoid is generally spherical for normal [i.e., common and weak] H-bonds. They find that anisotropy is correlated with the presence of short bonds and with "matching pK_a's" [i.e., the donor and acceptor have similar chemical identity and proton affinity], as one would expect.

2. For 36 different protein structures they find very few LBHB's. Furthermore, in many the H-bonds identified are away from the active site. But, this may be of significance, as discussed below.

3. A LBHB may play a role in excited state proton transfer in green fluorescent protein, as described here.

4. In HIV-1 protease there is a very short H-bond with no barrier.

5. There is correlation between the location of short H-bonds and the "folding cores" of specific proteins, including HIV-1. These sites are identified through NMR, that allows one to study partially denatured [i.e. unfolded] protein conformations. This suggests short H-bonds may play a functional role in protein folding.

by M.V. Hosur, R. Chitra, Samarth Hegde, R.R. Choudhury, Amit Das, and R.V. Hosur

Most hydrogen bonds in proteins are weak, as characterised by a donor-acceptor distance larger than 2.8 Angstroms, and interaction energies of a few kcal/mol (~0.1 eV~3 k_B T). However, there are some bonds that are much shorter. In particular, Cleland proposed in 1993 that for some enzymes that there are H-bonds that are sufficiently short (R ~ 2.4-2.5 A) that the energy barrier for proton transfer from the donor to acceptor is sufficiently small that it is comparable to the zero-point energy for the donor-H stretch vibration. These are called low-barrier hydrogen bonds. This proposal remains controversial. For example, Ariel Warshel says they have no functional role.

The authors perform extensive analysis of crystal structure databases, for both proteins and small molecules, in order to identify the relative abundance of short bonds, and their location relative to the active sites of proteins. Here are a few things I found interesting.

1. For a strong bond, the zero-point motion along the bond direction will be much larger than in the perpendicular directions. This means that there should be significant anisotropy in the ellipsoid associated with the uncertainty of the hydrogen atom position determined from neutron scattering [ADP = Atomic Displacement Parameter = Debye-Waller factor]. The ellipsoid is generally spherical for normal [i.e., common and weak] H-bonds. They find that anisotropy is correlated with the presence of short bonds and with "matching pK_a's" [i.e., the donor and acceptor have similar chemical identity and proton affinity], as one would expect.

2. For 36 different protein structures they find very few LBHB's. Furthermore, in many the H-bonds identified are away from the active site. But, this may be of significance, as discussed below.

3. A LBHB may play a role in excited state proton transfer in green fluorescent protein, as described here.

4. In HIV-1 protease there is a very short H-bond with no barrier.

5. There is correlation between the location of short H-bonds and the "folding cores" of specific proteins, including HIV-1. These sites are identified through NMR, that allows one to study partially denatured [i.e. unfolded] protein conformations. This suggests short H-bonds may play a functional role in protein folding.

Monday, November 11, 2013

Tata Colloquium on organic Mott insulators

Tomorrow I am giving the theory colloquium at the Tata Institute for Fundamental Research in Mumbai. My host is Kedar Damle.

Here are the slides for "Frustrated Mott insulators: from quantum spin liquids to superconductors".

A related review article was co-authored with Ben Powell.

Here are the slides for "Frustrated Mott insulators: from quantum spin liquids to superconductors".

A related review article was co-authored with Ben Powell.

Saturday, November 9, 2013

Towards effective scientific publishing and career evaluation by 2030?

Previously I have argued that Science is broken and raised the question, Have journals become redundant and counterproductive?. Reading these earlier posts is recommended to better understand this post.

Some of the problems that need to be addressed are:

It is always much easier to identify problems than to provide constructive and realistic solutions.

Here is my proposal for a possible way forward. I hope others will suggest even better approaches.

1. Abolish journals. They are an artefact of the pre-internet world and are now doing more harm than good.

2. Scientists will post papers on the arXiv.

3. Every scientist receives only 2 paper tokens per year. This entitles them to post two single author [or four dual author, etc.] papers per year on the arXiv. Unused credits can be carried over to later years. There will be no limit to the paper length. Overall, this should increase the quality of papers and will remove the problem of "honorary" authors.

4. To keep receiving 2 tokens per year, each scientist must write a commentary on 2 other papers. Multiple author commentaries are allowed. These commentaries can include new results checking the results of the commented on paper. Authors of the original can write responses.

5. When people apply for jobs, grants, and promotion they will submit their publication list and their commentary list. They will be evaluated on the quality of both. Those evaluating the candidate will find the quantity and quality of commentaries on their papers very useful.

The above draft proposal is far from perfect and I can already think of silly things that people will do to publish more... However, for all its faults I sincerely believe that this system would be vastly better than the current one.

The first step is to get the arXiv to allow commentaries to be added. But, this will only become really effective when there is a career incentive for people to write careful critical and detailed commentaries.

So, fire away! I welcome comment and alternative suggestions.

Some of the problems that need to be addressed are:

- journals are wasting a lot of time and money

- rubbish and mediocrity is getting published, sometimes in "high impact" journals

- honorary authorship leads to long author lists and misplaced credit

- increasing emphasis on "sexy" speculative results

- metrics are taking priority over rigorous evaluation

- negative results or confirmatory studies don't get published

- lack of transparency of the refereeing and editorial process

- .....

It is always much easier to identify problems than to provide constructive and realistic solutions.

Here is my proposal for a possible way forward. I hope others will suggest even better approaches.

1. Abolish journals. They are an artefact of the pre-internet world and are now doing more harm than good.

2. Scientists will post papers on the arXiv.