The public release of ChatGPT was a landmark that surprised many people, both in the general public and researchers working in Artificial Intelligence. All of a sudden it seemed Large Language Models had capabilities that some thought were a decade away or even not possible. It is like the field underwent a "phase transition." This idea turns out to be more than just a physics metaphor. It has been made concrete and rigorous in the following paper.

Emergent Abilities of Large Language Models

Jason Wei, Yi Tay, Rishi Bommasani, Colin Raffel, Barret Zoph, Sebastian Borgeaud, Dani Yogatama, Maarten Bosma, Denny Zhou, Donald Metzler, Ed H. Chi, Tatsunori Hashimoto, Oriol Vinyals, Percy Liang, Jeff Dean, William Fedus

They use the following definition, "Emergence is when quantitative changes in a system result in qualitative changes in behavior," citing Phil Anderson's classic "More is Different" article. [Even though the article does not contain the word emergence].

In this paper, we will consider a focused definition of emergent abilities of large language models:

An ability is emergent if it is not present in smaller models but is present in larger models.

How does one define the "size" or "scale" of a model? Wei et al., note that "Today’s language models have been scaled primarily along three factors: amount of computation, number of model parameters, and training dataset size."

The essence of the analysis in the paper is summarised as follows.

We first discuss emergent abilities in the prompting paradigm, as popularized by GPT-3 (Brown et al., 2020). In prompting, a pre-trained language model is given a prompt (e.g. a natural language instruction) of a task and completes the response without any further training or gradient updates to its parameters.

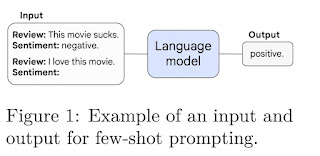

An example of a prompt is shown below

Brown et al. (2020) proposed few-shot prompting, which includes a few input-output examples in the model’s context (input) as a preamble before asking the model to perform the task for an unseen inference-time example.

The ability to perform a task via few-shot prompting is emergent when a model has random performance until a certain scale, after which performance increases to well-above random.

An example is shown in the Figure below. The horizontal axis is the number of training FLOPs for the model, a measure of model scale. The vertical axis measures the accuracy of the model to perform a task, Modular Arithmetic, for which the model was not designed, but just given two-shot prompting. The red dashed line is the performance for a random model. The purple data is for GPT-3 and the blue for LaMDA. Note how once the model scale reaches about 10^22 there is a rapid onset of ability.

Wei et al., point out that "there are currently few compelling explanations for why such abilities emerge the way that they do".

The authors have encountered some common characteristics of emergent properties. They are hard to predict or anticipate before they are observed. They are often universal, i.e., they can occur in a wide range of different systems and are not particularly sensitive to the details of the components. Even after emergent properties are observed, it is still hard to explain why they occur, even when one has a good understanding of the properties of the system at a smaller scale. Superconductivity was observed in 1911 and only explained in 1957 by the BCS theory.

On the positive side, this paper presents hope that computational science and technology are at the point where AI may produce more exciting capabilities. On the negative side, there is also the possibility of significant societal risks such as having unanticipated power to create and disseminate false information, bias, and toxicity.

Aside: One thing I found surprising is that the authors did not reference John Holland, a computer scientist, and his book, Emergence.

I thank Gerard Milburn for bringing the paper to my attention.

No comments:

Post a Comment