Spontaneous symmetry breaking is a fundamental concept in condensed matter and quantum field theory. Amongst philosophers of science the concept is receiving increasing attention, particularly in the context of discussions about emergence.

How do we understand the following two observations about a system at zero temperature?

At zero temperature for a finite-sized system there is no symmetry breaking. The ground state transforms as the trivial representation of the symmetry group of the Hamiltonian. It is non-degenerate.

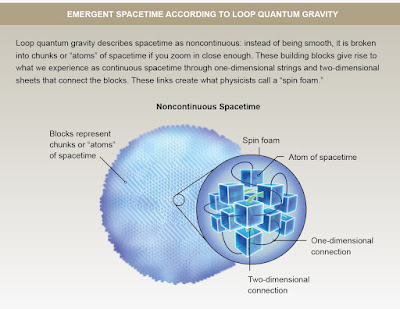

In the thermodynamic limit, there is a family of degenerate ground states. They are related to one another by a transformation of the symmetry group. This concept is captured in picture below of the Mexican hat potential.

Motion around the trough is associated with the Goldstone mode. Motion perpendicular to the trough is associated with the "Higgs boson".

How does this picture connect with a finite system?

An intuitive picture is that the ball in the trough has a finite mass and so motion in the trough is like the quantum mechanics of a rotor with finite moment of inertia. Then there is a non-generate ground state with equal probability to be located at any angle. What might the moment of inertia be? For reasons described below it turns out to be related to the superfluid stiffness.

For the case of a Heisenberg antiferromagnet, the physics was worked out by Phil Anderson in 1952 where he introduced the concept of a "tower of states" that become degenerate in the thermodynamic limit.

They are described by the following effective Hamiltonian

c is the speed of magnons (Goldstone bosons). vec(S) is the total spin, V is the volume of the system, and rho_s is the spin stiffness associated with the broken-symmetry. As the thermodynamic limit is approached the energy of these states scale with L^-d where d is the dimension of the system. In contrast, the magnon states scale with L^-1. Thus, for exact diagonalisation of sufficiently large systems, the "tower of states" should be clearly be below the magnons states.In the figure below, the top panel shows the low-lying eigenstates. The lowest energy states do scale with S^2. The middle panel shows how these states do separate from the magnon states.

More recently there has been some interesting work that explores how the tower of states appears in the entanglement entropy.

Entanglement Entropy of Systems with Spontaneously Broken Continuous Symmetry

Max A. Metlitski, Tarun Grover

But for now, discussing that is above my pay grade 😀

I thank Gerard Milburn for asking me questions that led to me finally getting a better physical picture of the issues discussed here.