My UQ economics colleague John Quiggin has an excellent article, Tell 'em there dreaming. He argues convincingly that nuclear power is irrelevant to reducing carbon emissions in Australia. The argument is purely pragmatic. We currently have no commercial nuclear power plants, no nuclear industry, and no legislative or regulative framework. Even in the most optimistic scenario [including the highly unlikely prospect of a groundswell of popular and political support for an ambitious nuclear program] it would be around 2040 before any actual electricity would come onto the grid. That is too late.

Aside: the title of the article is an allusion to a famous line in a cult classic Australian movie, The Castle. If Americans want to really appreciate that Australia is a very different culture they should watch this movie with a group of Australians. The Aussies will be dying with laughter and the Americans will be wondering what is so funny.

Tuesday, December 30, 2014

Friday, December 19, 2014

Slides like this should be banned from talks

Increasingly I see people show slides like this in talks.

I don't see the point.

It is just a screen dump of a publication list from a CV.

There is no pedagogical value.

It is just saying, "Look, I have published lots of papers!"

Don't do it.

I don't see the point.

It is just a screen dump of a publication list from a CV.

There is no pedagogical value.

It is just saying, "Look, I have published lots of papers!"

Don't do it.

Wednesday, December 17, 2014

Successful researchers should move on to new hard problems

Based on anecdotal evidence I fear/suspect that metrics, luxury journals, and funding pressures have led to a shift in how some/many of the best researchers operate.

Thirty of more years ago the best researchers would operate as follows.

They would pick a difficult problem/area, work on it for a few years and when they had (hopefully) solved it they would write a few (1-3) papers about it. They would then find some challenging new problem to work on. Meanwhile lesser researchers would then write papers that would work out more of the details of the first problem.

Now, people want to work on lower risk problems with guaranteed steady "outputs". Thus, they are reluctant to "move on", particularly when they have a competitive edge in a new area. It is easy for them to churn out 10-20 more papers on natural "follow up" studies working out all the details. They are "safe" projects for graduate students. These papers may be valuable but they could probably have been done by lesser talents. From the point of view of advancing science it would have been better if the successful leader had moved onto a newer and more challenging problem. So, why don't they? Because their publication rate might drop significantly and it would be risky/difficult to supervise so many students on new projects.

An earlier post, raised the question, Why do you keep publishing the same paper?

Is this a fair assessment? Is my concern legitimate? Or I am just naively nostalgic?

Aside: I am on vacation. You should not work on vacations. This post (and the next one) was written last week and posted by delay (cool, huh!).

Thirty of more years ago the best researchers would operate as follows.

They would pick a difficult problem/area, work on it for a few years and when they had (hopefully) solved it they would write a few (1-3) papers about it. They would then find some challenging new problem to work on. Meanwhile lesser researchers would then write papers that would work out more of the details of the first problem.

Now, people want to work on lower risk problems with guaranteed steady "outputs". Thus, they are reluctant to "move on", particularly when they have a competitive edge in a new area. It is easy for them to churn out 10-20 more papers on natural "follow up" studies working out all the details. They are "safe" projects for graduate students. These papers may be valuable but they could probably have been done by lesser talents. From the point of view of advancing science it would have been better if the successful leader had moved onto a newer and more challenging problem. So, why don't they? Because their publication rate might drop significantly and it would be risky/difficult to supervise so many students on new projects.

An earlier post, raised the question, Why do you keep publishing the same paper?

Is this a fair assessment? Is my concern legitimate? Or I am just naively nostalgic?

Aside: I am on vacation. You should not work on vacations. This post (and the next one) was written last week and posted by delay (cool, huh!).

Monday, December 15, 2014

Finding the twin state for hydrogen bonding in malonaldehyde

I was quite excited when I saw the picture above when I recently visited Susanta Mahapatra.

One of the key predictions of the diabatic state picture of hydrogen bonding is that there should be an excited electronic state (a twin state) which is the "anti-bonding" combination of the two diabatic states associated with the ground state H-bond.

Recently, I posted about how this state is seen in quantum chemistry calculations for the Zundel cation.

The figure above is taken from

Optimal initiation of electronic excited state mediated intramolecular H-transfer in malonaldehyde by UV-laser pulses

K. R. Nandipati, H. Singh, S. Nagaprasad Reddy, K. A. Kumar, S. Mahapatra

The figure hinted to me that for malonaldehyde the twin state is the S2 excited state, because of the valence bond pictures shown at the bottom of the figure and because the shape of the two potential energy curves is similar to that given by the diabatic state model.

Below I have plotted the curves for a donor-acceptor distance of R=2.5 Angstroms, comparable to that in malonaldehyde.

The vertical scale is such that D=120 kcal/mol, leading to an energy gap between the ground and excited state of about 4 eV, comparable to that in the top figure [which is in atomic units, where Energy = 1 Hartree = 27.2 eV].

Note that in the first figure, there is a gap on the vertical scale and the top and bottom part of the figures involve a different linear scale.

Hence, to make a more meaningful comparison I took the potential energy curves and replotted them on a linear scale using the polynomial fits given in the paper. The results are below.

There is reasonable agreement, but only at the semi-quantitative level.

[With regard to units the distances are in atomic units (Bohr radius = 0.5 Angstroms] and comparable].

The figure below shows how the calculated transition dipole moment between the ground state and the first excited state. I was surprised that it only varied by about five per cent with change in nuclear geometry.

However, Seth Olsen pointed out to me that this small variation reflects the Franck- Condon approximation [which is very important and robust in molecular spectroscopy].

I also calculated this with the diabatic state model, assuming that the dipole moment of diabatic states did not vary with geometry and the Mulliken-Hush diabaticity condition [that the dipole operator is diagonal in the diabatic state basis] held (see Nitzan for an extensive discussion).

[The units here are 1 atomic unit = 2.6 Debye].

The vertical scale here is the magnitude of the dipole moment in one of the diabatic states. This is also the approximate value of the dipole moment of the molecule at "high" temperatures above which there is no coherent tunnelling between the two isomers, i.e. the proton is localised on the left or right side of the value. The experimental value reported here is about 2.6 Debye.

This is consistent with the claim that the diabatic state model gets the essential physics of the electronic transition.

But, of course the real molecule is more complex. For example, between the S0 and S1 states there is a "dark" S1 state, characterised as a n to pi* transition where n is the lone pair orbital on the oxygen. The three states and their associated conical intersections are discussed in a nice paper by Joshua Coe and Todd Martinez.

I think a good way to more rigorously test/establish/disprove the diabatic state picture is to use one of the "unbiased" recipes to construct diabatic states, such as that discussed here, from high-level computational chemistry.

Friday, December 12, 2014

Battling the outbreak of High Impact Factor Syndrome

Hooray for Carl Caves!

He has an excellent piece The High-impact-factor syndrome on The Back Page of the American Physical Society News.

Before giving a response I highlight some noteworthy sentences. I encourage reading the article in full, particularly the concrete recommendations at the end.

1. I groaned where I saw that he lists the impact factors of journals with four and five significant figures. Surely two or three is appropriate.

2. I am more skeptical about using citations to aid decisions. I think they are almost meaningless for young people. They can be of some use in filtering for senior people. But I also think they largely tell us things we already know.

3. Carl suggests we need to recommit to finding the best vehicle to communicate our science and select journals accordingly. I think this is irrelevant. We know the answer: post the paper on the arXiv and possibly email a copy to 5-10 people who may be particularly interested. I argued previously that journals have become irrelevant to science communication. Journals only continue to exist for reasons of institutional inertia and career evaluation purposes.

4. My biggest concern with HIFS is not the pernicious and disturbing impact on careers but the real negative impact on the quality of science.

This is the same concern as that of Randy Schekman.

Simply put, luxury journals encourage and reward crappy science. Specifically they promote speculative and unjustified claims and hype. "Quantum biology" is a specific example. Just a few examples are here, here, and here.

Why does this matter?

Science is about truth. It is about careful and systematic work that considers differ possible explanations and not claiming more than the data actually does or does not show.

Once a bad paper gets published in a luxury journal it attracts attention.

Some people just ignore it, with a smirk or grimace.

Others waste precious time, grant money, and student and postdoc lives showing the paper is wrong.

Others actually believe it uncritically. They jump on the fashionable band wagon. They continue to promote the ideas until they move onto the next dubious fashion. They also waste precious resources.

The media believes the hype. You then get bizarre things like The Economist listing among their top 5 Science and Technology books of the year a book on quantum biology (groan...).

He has an excellent piece The High-impact-factor syndrome on The Back Page of the American Physical Society News.

Before giving a response I highlight some noteworthy sentences. I encourage reading the article in full, particularly the concrete recommendations at the end.

.... if you think it’s only a nightmare,.... , you need to wake up. Increasingly, scientists, especially junior scientists, are being evaluated in terms of the number of publications they have in HIF journals, a practice I call high-impact-factor syndrome (HIFS).

I’ve talked to enough people to learn that HIFS is less prevalent in physics and the other hard sciences than in biology and the biomedical sciences and also is less prevalent in North America than in Europe, East Asia, and Australia. For many readers, therefore, this article might be a wake-up call; if so, keep in mind that your colleagues elsewhere and in other disciplines might already have severe cases. Moreover, most physicists I talk to have at least a mild form of the disease.

Do you have HIFS? Here is a simple test. You are given a list of publications, rank-ordered by number of citations, for two physicists working in the same sub-discipline. All of the first physicist’s publications are in PRL and PRA, and all of the second’s are in Nature and Nature Physics. In terms of the citation numbers and publication dates, the two publication records are identical. You are asked which physicist has had more impact.

If you have even the slightest inclination to give the nod to the second physicist, you are suffering from HIFS.

In the case of junior scientists, the situation is more complicated [than senior scientists]. Their publication records are thinner and more recent. The focus shifts from evaluating accomplishment to trying to extract from the record some measure of potential. ...... even if you think publication in HIF journals is informative, it is not remotely as instructive as evaluation of the full record, which includes the actual research papers and the research they report, plus letters of recommendation, research presentations, and interviews. When HIFS intrudes into this evaluation, it amounts to devaluing a difficult, time-consuming, admittedly imperfect process in favor of an easy, marginally informative proxy whose only claim on our attention is that it is objective.

Relying on HIF leads to poor decisions, and the worse and more frequent such decisions are, the more they reinforce the HIFS-induced incentive structure. As physicists, we should know better. We know data must be treated with respect and not be pushed to disclose information it doesn’t have, and we know that just because a number is objective doesn’t mean it is meaningful or informative.

Even more pernicious than applying HIFS to individuals is the influence it exerts on the way we practice physics. Social scientists call this Campbell’s law: “The more any quantitative social indicator is used for social decision-making, the more subject it will be to corruption pressures and the more apt it will be to distort and corrupt the social processes it is intended to monitor.” This social-science law is nearly as ironclad as a physical law. In the case of HIFS, there will be gaming of the system. Moreover, our research agenda will change: If rewards flow to those who publish in HIF journals, we will move toward doing the research favored by those journals.

No matter how highly you think of the editors of the HIF journals, they are independent of and unaccountable to the research community, they do not represent the entire range of research in the sciences or in physics, and their decisions are inevitably colored by what sells their magazines.I just list a few of the concrete and excellent recommendations Carl makes. We are not helpless.

What to do?

Educate administrators that the HIF shortcut, though not devoid of information, is only marginally useful. For any scientist, junior or senior, an evaluation of research potential and accomplishment requires a careful consideration of the scientist’s entire record. A good administrator doesn’t need to be taught this, so this might be a mechanism for identifying and weeding out defective administrators.I agree with all of the above. However, I do differ with Carl on a few points. These disagreements are a matter of degree.

Take a look at the San Francisco Declaration on Research Assessment (DORA) which is aimed directly at combating HIFS. Consider adopting its principles and signing the declaration yourself. DORA comes out of the biosciences; signing might help bioscientists put out the fire that is raging through their disciplines and could help to prevent the smoldering in physics from bursting into flame.

Include in ads for positions at your institution a standard statement along the following lines: “Number of publications in high-impact-factor journals will not be a factor in assessing research accomplishments or potential.”

Adopting this final recommendation would send an unambiguous message to everybody concerned: applicants, letter writers, evaluators, and administrators. Making it a commonplace could, I believe, actually change things.

1. I groaned where I saw that he lists the impact factors of journals with four and five significant figures. Surely two or three is appropriate.

2. I am more skeptical about using citations to aid decisions. I think they are almost meaningless for young people. They can be of some use in filtering for senior people. But I also think they largely tell us things we already know.

3. Carl suggests we need to recommit to finding the best vehicle to communicate our science and select journals accordingly. I think this is irrelevant. We know the answer: post the paper on the arXiv and possibly email a copy to 5-10 people who may be particularly interested. I argued previously that journals have become irrelevant to science communication. Journals only continue to exist for reasons of institutional inertia and career evaluation purposes.

4. My biggest concern with HIFS is not the pernicious and disturbing impact on careers but the real negative impact on the quality of science.

This is the same concern as that of Randy Schekman.

Simply put, luxury journals encourage and reward crappy science. Specifically they promote speculative and unjustified claims and hype. "Quantum biology" is a specific example. Just a few examples are here, here, and here.

Why does this matter?

Science is about truth. It is about careful and systematic work that considers differ possible explanations and not claiming more than the data actually does or does not show.

Once a bad paper gets published in a luxury journal it attracts attention.

Some people just ignore it, with a smirk or grimace.

Others waste precious time, grant money, and student and postdoc lives showing the paper is wrong.

Others actually believe it uncritically. They jump on the fashionable band wagon. They continue to promote the ideas until they move onto the next dubious fashion. They also waste precious resources.

The media believes the hype. You then get bizarre things like The Economist listing among their top 5 Science and Technology books of the year a book on quantum biology (groan...).

Wednesday, December 10, 2014

Strong non-adiabatic effects in a prototype chemical system

This post concerns what may be the fast known internal conversion process in a chemical system, non-radiative decay times in the range of 3-8 femtoseconds. Internal conversion is the process whereby in a molecule there is a non-radiative transition between electronic excited states (without change in spin quantum number). This is by definition a break-down of the Born-Oppenheimer approximation.

Much is rightly made of the fascinating and important fact that excited states of DNA and RNA undergo "ultra-fast" non-radiative decay to their electronic ground state. This photo-stability is important to avoid mutations and protect genetic information. Conical intersections are key. The time scale for comparison is the order of a picosecond.

The figure below is taken from

Quantum Mechanical Study of Optical Emission Spectra of Rydberg-Excited H3 and Its Isotopomers Susanta Mahapatra and Horst Köppel

It shows the wavelength dependence of the intensity of emission from a 3d (Rydberg) excited state.

There are several things that are noteworthy about the experimental data, given that this is a gas phase spectra.

1. The large width of the spectra. In energy units this is of the order of an eV. Gas phase spectra for electronic transitions in typical molecules are usually extremely sharp (See here for a typical example).

2. The two peaks, suggesting the presence of two electronic transitions.

3. The strong isotope effects. For strictly electronic transitions between adiabatic states, there should be no dependence on the nuclear masses. This suggests strong vibronic and quantum nuclear effects.

So what is going on?

The key physics is that of the Jahn-Teller effect, conical intersections, and non-adiabatic effects.

For H3 there is geometry of an equilateral triangle which has C3 symmetry. There are then two degenerate electronic ground states with E symmetry, and experience E x epsilon Jahn-Teller effect leading to the two adiabatic potential energy surfaces shown below. They touch at a conical intersection. The two peaks in the spectra above correspond to transitions to these two different surfaces.

Non-adiabatic coupling leads to rapid transitions between the surfaces leading to the ultra-ultra-fast internal conversion and the very broad spectra. This is calculated in the paper, leading to the theoretical curves shown in the top figure.

More recently, Susanta and some of his students, have considered the relative importance of (off-diagonal) non-adiabatic effects, the geometric phase [associated with the conical intersection], and Born-Huang (diagonal) corrections to explaining the spectra.

They find that the first has by far the most dominant effect. The latter two have very small effects that look like they will be difficult to disentangle from experiment. I discussed the elusiveness of experimental signatures of the geometric phase in an earlier post.

Tuesday, December 9, 2014

Topping the bad university employer rankings

Stefan Grimm was a Professor of Medicine at Imperial College London. It was deemed by his supervisors that he was not bringing enough grant income into his department. Tragically, he was later found dead at his home. Email correspondence from Grimm and from his supervisors has been made public on a blog.

It is very sad and disturbing.

This was my first encounter with this excellent blog DCs Improbable Science written by David Colquohoun FRS, Emeritus Professor of Pharmacology at University College London.

He also has an interesting post that is rightly critical of an Australian universities for taking millions in "research funding" from dubious vitamin, herb, and supplement companies.

He also has important posts exposing metrics and hype in luxury journals.

It is very sad and disturbing.

This was my first encounter with this excellent blog DCs Improbable Science written by David Colquohoun FRS, Emeritus Professor of Pharmacology at University College London.

He also has an interesting post that is rightly critical of an Australian universities for taking millions in "research funding" from dubious vitamin, herb, and supplement companies.

He also has important posts exposing metrics and hype in luxury journals.

Friday, December 5, 2014

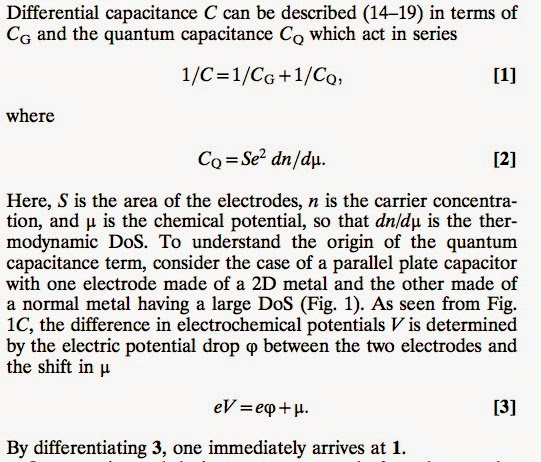

Quantum capacitance is the charge compressibility

In a quantum many-body system a key thermodynamic quantity is the charge compressibility, the derivative of the charge density with respect to the chemical potential d n/d mu.

In a degenerate Fermi gas kappa is simply proportional to the density of states at the Fermi energy.

kappa is zero in a Mott insulator. As the Mott transition is approached, the behaviour of kappa is non-trivial. For a band width (or frustration) controlled Mott transition that occurs at half filling, Jure Kokalj and I showed how kappa smoothly approached zero as the Mott transition was approached. However, there is some debate as to what happens for doping controlled transitions, e.g. does kappa diverge as one approaches the Mott insulator.

One thing I had wondered about was how one actually measures kappa accurately in an experiment. Varying the chemical potential and/or charge density is not always possible or straightforward.

See for example, Figure 10 in this review by Jaklic and Prelovsek, which compares experimental results on the cuprates with calculations for the t-J model.

The experimental error bars are large.

At the recent Australasian Workshop on Emergent Quantum Matter, Chris Lobb gave a nice talk about "Atomtronics" where he talked about how you define R, L, and C [resistance, inductance, and capacitance] in cold atom transport experiments. He argued that the capacitance was related to

d mu/dn. This got my attention.

Michael Fuhrer pointed out how there were measurements of the "quantum capacitance" for graphene.

Recent measurements for graphene are in this nice paper. They give a helpful review of the technique and the relevant equations.

Personally, I don't find this "derivation" completely obvious, but can follow the algebraic steps.

Why is C_Q called the "quantum capacitance"? This wasn't at all clear to me.

But, there is a helpful discussion in a paper,

Quantum capacitance devices by Serge Luryi

For a non-interacting two dimensional fermion gas the density of states scales inversely with the square of hbar. Hence, it diverges as hbar goes to zero. This means the quantum capacitance diverges and the "quantum" term C_Q in the equation [1] disappears.

In the graphene paper, the authors extract the renormalised Fermi velocity as a function of the density [band filling] and compare to renormalisation group calculations, that take into account electron-electron interactions, and predict a logarithmic dependence, as shown below. Red and blue are the theory, and experimental curves, respectively.

In a degenerate Fermi gas kappa is simply proportional to the density of states at the Fermi energy.

kappa is zero in a Mott insulator. As the Mott transition is approached, the behaviour of kappa is non-trivial. For a band width (or frustration) controlled Mott transition that occurs at half filling, Jure Kokalj and I showed how kappa smoothly approached zero as the Mott transition was approached. However, there is some debate as to what happens for doping controlled transitions, e.g. does kappa diverge as one approaches the Mott insulator.

One thing I had wondered about was how one actually measures kappa accurately in an experiment. Varying the chemical potential and/or charge density is not always possible or straightforward.

See for example, Figure 10 in this review by Jaklic and Prelovsek, which compares experimental results on the cuprates with calculations for the t-J model.

The experimental error bars are large.

At the recent Australasian Workshop on Emergent Quantum Matter, Chris Lobb gave a nice talk about "Atomtronics" where he talked about how you define R, L, and C [resistance, inductance, and capacitance] in cold atom transport experiments. He argued that the capacitance was related to

d mu/dn. This got my attention.

Michael Fuhrer pointed out how there were measurements of the "quantum capacitance" for graphene.

Recent measurements for graphene are in this nice paper. They give a helpful review of the technique and the relevant equations.

Personally, I don't find this "derivation" completely obvious, but can follow the algebraic steps.

Why is C_Q called the "quantum capacitance"? This wasn't at all clear to me.

But, there is a helpful discussion in a paper,

Quantum capacitance devices by Serge Luryi

For a non-interacting two dimensional fermion gas the density of states scales inversely with the square of hbar. Hence, it diverges as hbar goes to zero. This means the quantum capacitance diverges and the "quantum" term C_Q in the equation [1] disappears.

In the graphene paper, the authors extract the renormalised Fermi velocity as a function of the density [band filling] and compare to renormalisation group calculations, that take into account electron-electron interactions, and predict a logarithmic dependence, as shown below. Red and blue are the theory, and experimental curves, respectively.

As exciting as the above is, it is still not clear to me how to measure the "quantum capacitance" for a multi-layer system.

Update. (December 10, 2014)

Lu Li brought to my attention his measurements of the capacitance of the 2DEG at the LaAlO3/SrTiO3 interface, and earlier measurements of negative compressibility of correlated two dimensional electron gases (2DEGs) by Eisenstein and by Kravchenko.

Background theory has been discussed extensively by Kopp and Mannhart.

Update. (December 10, 2014)

Lu Li brought to my attention his measurements of the capacitance of the 2DEG at the LaAlO3/SrTiO3 interface, and earlier measurements of negative compressibility of correlated two dimensional electron gases (2DEGs) by Eisenstein and by Kravchenko.

Background theory has been discussed extensively by Kopp and Mannhart.

Thursday, December 4, 2014

How big and significant is condensed matter physics?

Occasionally I have to write a paragraph or two about why condensed matter physics is important in the Australian context, where it is arguably under-represented. Here is my latest version.

Condensed

matter physics is one of the largest and most vibrant areas of physics.

1. In the past 30 years the

Nobel Prize in Physics has been awarded 13 times for work on condensed matter.

2. Since 1998 seven condensed matter physicists [Kohn, Heeger, Ertl, Shechtmann,

Betzig, Hell, Moerner] have received the Nobel Prize in Chemistry!

3. Of the 144

physicists who were most highly cited in 2014 for papers in the period

2002-2012 (ISI highlycited.com), more than one half are condensed matter

physicists.

4. The largest physics conference in the world is the annual March

meeting of the American Physical Society. It attracts almost 10,000 attendees

and is focused on condensed matter.

Many of the materials first studied by condensed

matter physicists are now the basis of modern technology. Common examples

include crystalline silicon in computer chips, superconductors in hospital

magnetic imaging machines, magnetic multilayers in computer memories, and

liquid crystals in digital displays.

There are significant interactions with

other fields such as chemistry, materials science, biophysics, and engineering.

A few notes.

On 2. Although some of these people are now in chemistry departments they all did a Ph.D in condensed matter physics and the prize winning research was or could arguably have been published in a journal such as PRB.

On 3. I just went quickly through the list and counted the names I recognised. I welcome more accurate determinations.

I welcome other suggestions or observations.

Wednesday, December 3, 2014

Emergent length scales in quantum matter

Only last week I realised that an important and profound property of emergent quantum matter is the emergence of new length scales. These can be mesoscopic - intermediate between microscopic and macroscopic length scales. Say, very roughly between 100 nanometers and 100 microns.

Previously, I highlighted the emergence of new low energy scales in quantum many-body physics. The energy and length scales are often related.

In or near broken symmetry phases, some emergent length scales can be related to the rigidity of the order parameter such as the spin stiffness.

An example is the superconducting coherence length, xi which determines the minimum thickness of a thin film required to sustain superconductivity and the size of vortices in a type II superconductor. It is roughly given by xi ~ hbar v_F/Delta ~ a E_F/Delta

where v_F is the Fermi velocity, Delta is the energy gap, a is a lattice constant, and E_F is the Fermi energy. Since in a weak-coupling BCS superconductor Delta is much less than E_F, the length xi can be orders of magnitude larger than a lattice constant, and so is mesoscopic.

In BCS theory, the coherence length can be interpreted roughly as the "size" of a Cooper pair.

In neutral superfluids, such as liquid 4He or bosonic cold atomic gases, the corresponding length scale is sometimes known as the healing length, and defines the size of quantised vortices. In 4He this length scale is microscopic, being of the order of Angstroms, but in cold atoms it can be mesoscopic.

A second independent emergent length scale associated with superconductivity is the London penetration depth, that determines the scale on which magnetic fields penetrate the superconductor, or are expelled, i.e. the Meissner effect.

Just in case on thinks the emergence of new length scales is trivial in the sense that it is really the same as the emergence of new energy scales, consider the case of the Kondo effect. Clearly there are many experimental and theoretical signatures of the Kondo temperature, T_K.

One can easily construct a "Kondo length", L_K ~ hbar v_F / k_B T_K, and can identify this with the size of the "Kondo screening cloud."

Yet this has never been observed experimentally.

Ian Affleck has a nice review article discussing the relevant issues.

Partly as an aside I include below a nice graphic about length scales, taken from a UK Royal Society report on nanotechnology, from 2004.

Previously, I highlighted the emergence of new low energy scales in quantum many-body physics. The energy and length scales are often related.

In or near broken symmetry phases, some emergent length scales can be related to the rigidity of the order parameter such as the spin stiffness.

An example is the superconducting coherence length, xi which determines the minimum thickness of a thin film required to sustain superconductivity and the size of vortices in a type II superconductor. It is roughly given by xi ~ hbar v_F/Delta ~ a E_F/Delta

where v_F is the Fermi velocity, Delta is the energy gap, a is a lattice constant, and E_F is the Fermi energy. Since in a weak-coupling BCS superconductor Delta is much less than E_F, the length xi can be orders of magnitude larger than a lattice constant, and so is mesoscopic.

In BCS theory, the coherence length can be interpreted roughly as the "size" of a Cooper pair.

In neutral superfluids, such as liquid 4He or bosonic cold atomic gases, the corresponding length scale is sometimes known as the healing length, and defines the size of quantised vortices. In 4He this length scale is microscopic, being of the order of Angstroms, but in cold atoms it can be mesoscopic.

A second independent emergent length scale associated with superconductivity is the London penetration depth, that determines the scale on which magnetic fields penetrate the superconductor, or are expelled, i.e. the Meissner effect.

Just in case on thinks the emergence of new length scales is trivial in the sense that it is really the same as the emergence of new energy scales, consider the case of the Kondo effect. Clearly there are many experimental and theoretical signatures of the Kondo temperature, T_K.

One can easily construct a "Kondo length", L_K ~ hbar v_F / k_B T_K, and can identify this with the size of the "Kondo screening cloud."

Yet this has never been observed experimentally.

Ian Affleck has a nice review article discussing the relevant issues.

Partly as an aside I include below a nice graphic about length scales, taken from a UK Royal Society report on nanotechnology, from 2004.

Monday, December 1, 2014

Talk at grant writing workshop

Here are the slides for my talk at todays workshop on grant writing for the School of Mathematics and Physics at UQ. A rough outline of the talk, with links to relevant earlier posts, is in an earlier post which also attracted some helpful comments.

I welcome further comments and discussion.

I welcome further comments and discussion.

Thursday, November 27, 2014

The challenge of moving topological defects in quantum matter

I have really enjoyed this week at the Australasian Workshop on Emergent Quantum Matter. My UQ colleague Matt Davis is to be congratulated for putting together an excellent program. There was nice balance of cold atom and solid state talks.

Is there anything that stood out to me?

Yes. Vortices, (Josephson) phase coherence, and dimensional crossovers. Vortices kept coming up and remain a fascinating and perplexing problem.

Vortices are mesoscopic, intermediate between the microscopic (atomic) and macroscopic scales. The length scale associated with them is emergent. They have some quantum properties (quantised circulation) but obey classical equations of motion, but interact with microscopic degrees of freedom (quasi-particles and phonons).

When one has a broken symmetry vortices are novel emergent low energy excitations. They are topological defects in the order parameter. Given how much they have been studied in superfluid 4He and superconductors one would think they were pretty well understood. However, this is not the case. What is particularly poorly understood is the dynamics of these objects.

Stephen Eckel described some beautiful experiments at NIST that recently investigated Josephson type junctions in atomic BECs. My immediate question was how was this any different from landmark experiments performed by Davis and Packard in superfluid 3He? In those experiments the superfluid "healing" (or coherence) length is quite small and there is not a single weak link but many apertures. The origin of the coupling between these links is not clear.

It was also interesting that a key consulting role in these experiments was played by Chris Lobb, an expert on solid state Josephson junctions.

Victor Galitski and Joachim Brand both described theory motivated by recent fermionic cold atom experiments which measured a large mass (both inertial and gravitational, the two are different) for solitons in a quasi-one-dimensional superfluid. Victor discussed recent theoretical calculations based on exact solution of the dynamical Bogoliubov-de Gennes (BdG) equations.

The question of how vortices and quasi-particles interact and the dynamics of a single vortex in a Bose superfluid is highly controversial. Theoretical calculations of the mass of a vortex range from zero to infinity! A brief introduction, including key references, is in this PRL.

Dimensionality matters. Solitons and Luttinger liquid only exist in strictly one dimension. The Berezinskii-Kosterlitz-Thouless transition strictly only exists in two dimensions. However, what happens in quasi-one or quasi-two-dimensional systems is not completely clear, inspite of a lot of theoretical work. Some ultra cold atom experiments may be able to address these questions of dimensional crossover.

Is there anything that stood out to me?

Yes. Vortices, (Josephson) phase coherence, and dimensional crossovers. Vortices kept coming up and remain a fascinating and perplexing problem.

Vortices are mesoscopic, intermediate between the microscopic (atomic) and macroscopic scales. The length scale associated with them is emergent. They have some quantum properties (quantised circulation) but obey classical equations of motion, but interact with microscopic degrees of freedom (quasi-particles and phonons).

When one has a broken symmetry vortices are novel emergent low energy excitations. They are topological defects in the order parameter. Given how much they have been studied in superfluid 4He and superconductors one would think they were pretty well understood. However, this is not the case. What is particularly poorly understood is the dynamics of these objects.

Stephen Eckel described some beautiful experiments at NIST that recently investigated Josephson type junctions in atomic BECs. My immediate question was how was this any different from landmark experiments performed by Davis and Packard in superfluid 3He? In those experiments the superfluid "healing" (or coherence) length is quite small and there is not a single weak link but many apertures. The origin of the coupling between these links is not clear.

It was also interesting that a key consulting role in these experiments was played by Chris Lobb, an expert on solid state Josephson junctions.

Victor Galitski and Joachim Brand both described theory motivated by recent fermionic cold atom experiments which measured a large mass (both inertial and gravitational, the two are different) for solitons in a quasi-one-dimensional superfluid. Victor discussed recent theoretical calculations based on exact solution of the dynamical Bogoliubov-de Gennes (BdG) equations.

The question of how vortices and quasi-particles interact and the dynamics of a single vortex in a Bose superfluid is highly controversial. Theoretical calculations of the mass of a vortex range from zero to infinity! A brief introduction, including key references, is in this PRL.

Dimensionality matters. Solitons and Luttinger liquid only exist in strictly one dimension. The Berezinskii-Kosterlitz-Thouless transition strictly only exists in two dimensions. However, what happens in quasi-one or quasi-two-dimensional systems is not completely clear, inspite of a lot of theoretical work. Some ultra cold atom experiments may be able to address these questions of dimensional crossover.

Wednesday, November 26, 2014

Grant writing tips

I have been asked to speak at a grant writing workshop for the School of Mathematics and Physics at UQ.

Here are a few preliminary thoughts.

Consider not applying.

Seriously. Consider the opportunity cost. An application requires a lot of time and energy. The chances of success are slim. Would you be better off spending the time writing a paper and waiting to apply next year? Or, would it be best to write one rather than two applications? You do have a choice.

Don't listen to me.

It is just one opinion. Some of my colleagues will give you the opposite advice. I have never been on a grant selection committee. My last 3 grant applications failed. Postmortems of failed applications are just speculation. What does and does not get funded remains a mystery to me.

Take comfort from the "randomness" of the system.

You have a chance. Don't stress the details. Recycle old unsuccessful applications. Don't take it personally when you fail.

Who is your actual audience? Write with only them in mind.

For the Australian Research Council it is probably not your international colleagues but rather the members of the College of Experts. It needs to be written in terms they can understand and be impressed by.

Why should they give YOU a grant?

I find many people sweat about the details of the research project or think if they have brilliant cutting edge project they will get funded. I doubt it. Track record, and particularly track record relevant to the proposed project is crucial.

Not all pages are equal.

Unfortunately, the application will be 60-100 pages. Don't kid yourself that reviewers will carefully read and digest every page. Some are much more important than others. You should focus on those.

The first page of the project description is the most important. Polish it.

I sometimes read this and I have no idea what the person is planning to do. I quickly lose interest.

"Contributions to the field" and "Research accomplishments" means scientific knowledge generation not career advancement or hyperactivity.

Choose your co-investigators carefully.

They may lift you up or weigh you down. I am usually skeptical of people who have "big name" co-investigators they have never actually published with before. The more investigators the larger the application, and the more material available for criticism. Junior investigators need to realise that the senior people will usually get all the credit for the grant, even if they contributed little to the application. This is the Matthew effect.

Trim the budget.

The larger the budget the greater the scrutiny. It is better to get a small grant than no grant at all. Ridiculously large requests will strain your credibility.

Moderate the hype, both about yourself and technological applications.

There are reviewers like me who will not take you seriously and be more critical of the application.

Be discerning about what publication metrics (citations, journal impact factors) to include.

Impact factors have no impact on me. I don't see the point or value of short term citations.

Writing IS hard work, even for the experienced.

See Tips in the writing struggle.

Get started early. Get feedback.

Write. Edit. Polish. Rewrite. Polish. Polish.

My criteria for research quality.

Responding to feedback from administrators in the university Research Office.

Put in the application early. They can give very helpful feedback about compliance issues, formatting, page limits. Take with "a grain of salt" advice/exhortations about selling you and the science.

I welcome comments and suggestions.

What advice and suggestions have you received that were helpful or not helpful?

Here are a few preliminary thoughts.

Consider not applying.

Seriously. Consider the opportunity cost. An application requires a lot of time and energy. The chances of success are slim. Would you be better off spending the time writing a paper and waiting to apply next year? Or, would it be best to write one rather than two applications? You do have a choice.

Don't listen to me.

It is just one opinion. Some of my colleagues will give you the opposite advice. I have never been on a grant selection committee. My last 3 grant applications failed. Postmortems of failed applications are just speculation. What does and does not get funded remains a mystery to me.

Take comfort from the "randomness" of the system.

You have a chance. Don't stress the details. Recycle old unsuccessful applications. Don't take it personally when you fail.

Who is your actual audience? Write with only them in mind.

For the Australian Research Council it is probably not your international colleagues but rather the members of the College of Experts. It needs to be written in terms they can understand and be impressed by.

Why should they give YOU a grant?

I find many people sweat about the details of the research project or think if they have brilliant cutting edge project they will get funded. I doubt it. Track record, and particularly track record relevant to the proposed project is crucial.

Not all pages are equal.

Unfortunately, the application will be 60-100 pages. Don't kid yourself that reviewers will carefully read and digest every page. Some are much more important than others. You should focus on those.

The first page of the project description is the most important. Polish it.

I sometimes read this and I have no idea what the person is planning to do. I quickly lose interest.

"Contributions to the field" and "Research accomplishments" means scientific knowledge generation not career advancement or hyperactivity.

Choose your co-investigators carefully.

They may lift you up or weigh you down. I am usually skeptical of people who have "big name" co-investigators they have never actually published with before. The more investigators the larger the application, and the more material available for criticism. Junior investigators need to realise that the senior people will usually get all the credit for the grant, even if they contributed little to the application. This is the Matthew effect.

Trim the budget.

The larger the budget the greater the scrutiny. It is better to get a small grant than no grant at all. Ridiculously large requests will strain your credibility.

Moderate the hype, both about yourself and technological applications.

There are reviewers like me who will not take you seriously and be more critical of the application.

Be discerning about what publication metrics (citations, journal impact factors) to include.

Impact factors have no impact on me. I don't see the point or value of short term citations.

Writing IS hard work, even for the experienced.

See Tips in the writing struggle.

Get started early. Get feedback.

Write. Edit. Polish. Rewrite. Polish. Polish.

My criteria for research quality.

Responding to feedback from administrators in the university Research Office.

Put in the application early. They can give very helpful feedback about compliance issues, formatting, page limits. Take with "a grain of salt" advice/exhortations about selling you and the science.

I welcome comments and suggestions.

What advice and suggestions have you received that were helpful or not helpful?

Monday, November 24, 2014

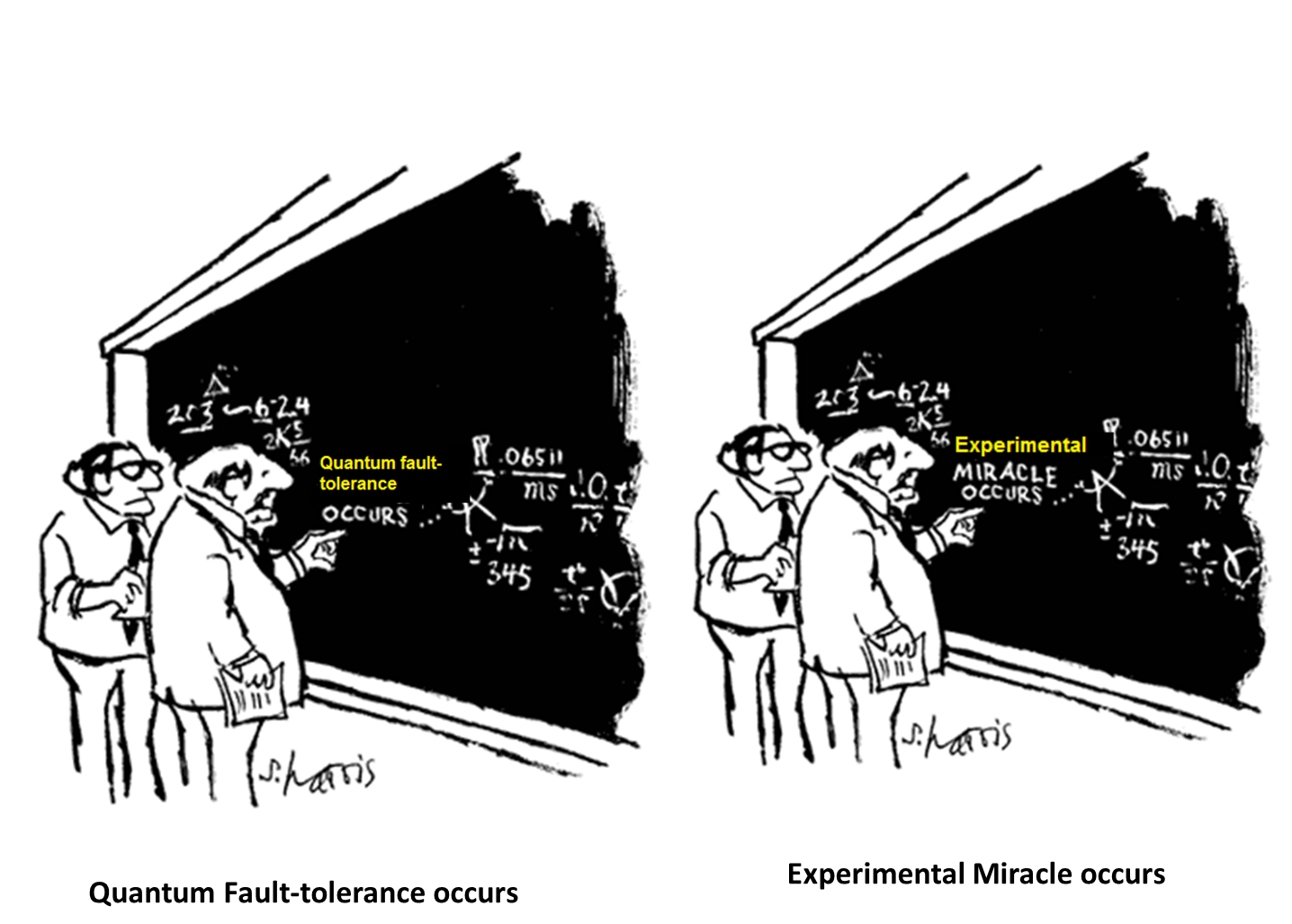

Quantum computing with Majorana fermions is science fiction fantasy

Someone has to say it.

I said it publicly today. Several people told me they were glad to hear it.

Majorana fermions are fascinating from a fundamental science point of view. They are worth investigating by a few theoretical and experimental groups. However, they are the latest fashion that is taking the solid state and quantum information communities by storm. It is the latest exotica. Much of the justification for all this research investment is that Majorana fermions could be used for "fault tolerant" quantum computing.

Lets get real. Lets not kid ourselves. First, as far as I am aware, no one has even demonstrated yet that the relevant solid state "realisations" even exhibit Majorana statistics. Suppose they do. Maybe in a few years someone will have 2 qubits. Looking at the complicated nanoscale devices and fabrication needed I fail to see how on any reasonable time scale (decades?) one is going to produce say 6-8 qubits. Yet even that is just a quantum "abacus", a toy.

The cartoon is taking from Gil Kalai's blog.

I said it publicly today. Several people told me they were glad to hear it.

Majorana fermions are fascinating from a fundamental science point of view. They are worth investigating by a few theoretical and experimental groups. However, they are the latest fashion that is taking the solid state and quantum information communities by storm. It is the latest exotica. Much of the justification for all this research investment is that Majorana fermions could be used for "fault tolerant" quantum computing.

Lets get real. Lets not kid ourselves. First, as far as I am aware, no one has even demonstrated yet that the relevant solid state "realisations" even exhibit Majorana statistics. Suppose they do. Maybe in a few years someone will have 2 qubits. Looking at the complicated nanoscale devices and fabrication needed I fail to see how on any reasonable time scale (decades?) one is going to produce say 6-8 qubits. Yet even that is just a quantum "abacus", a toy.

Sunday, November 23, 2014

An introduction to emergent quantum matter

Here are the slides for my talk, "An introduction to emergent quantum matter" that I am giving tomorrow at the Australasian Workshop on Emergent Quantum Matter.

A good discussion of some of the issues is Laughlin and Pines article The Theory of Everything and Piers Coleman's article Many-body Physics: Unfinished Revolution.

A more extensive and introductory discussion by Pines is at Physics for the 21st Century.

A more extensive and introductory discussion by Pines is at Physics for the 21st Century.

I welcome any comments.

Saturday, November 22, 2014

Investing in soft matter

I really enjoyed my visit to the TIFR Centre for Interdisciplinary Sciences (TCIS) of the Tata Institute for Fundamental Research in Hyderabad. This is an ambitious and exciting new venture. Higher education and basic research is expanding rapidly in India, with many new IITs, IISERs, and Central Universities. These are all hiring and so it is wonderful time to be looking for a science faculty job in India.

The initial focus of hiring of the new campus of TIFR (India's premier research institution in Mumbai) has been on soft condensed matter (broadly defined) with connections in biology and chemistry. There are many good reasons for this focus. Foremost, is that there excellent Indian's working in this area. However, I see many other reasons why choosing this area is a much better idea than quantum condensed matter, ultra cold atoms, quantum information, cosmology, elementary particle physics, string theory (yuk!), ...

Other reasons why I think investing in soft matter is wise and strategic include:

The TCIS Director, Sriram Ramaswamy is co-author of a nice RMP article, Hydrodynamics of soft active matter.

I am looking forward to seeing how this exciting new adventure develops over the years. India is leading the way.

The initial focus of hiring of the new campus of TIFR (India's premier research institution in Mumbai) has been on soft condensed matter (broadly defined) with connections in biology and chemistry. There are many good reasons for this focus. Foremost, is that there excellent Indian's working in this area. However, I see many other reasons why choosing this area is a much better idea than quantum condensed matter, ultra cold atoms, quantum information, cosmology, elementary particle physics, string theory (yuk!), ...

Other reasons why I think investing in soft matter is wise and strategic include:

- this is exciting and important inter-disciplinary research

- there are real world applications ranging from to foams to medicine to polymer turbulence drag reduction [used in oil pipelines and in fire fighter hoses]

- these types of applications are particularly important in Majority World countries

- in public outreach one can talk about flocking and do simple and impressive demonstrations such the Briggs-Rauscher oscillating chemical reaction or sand flowing through channels.

- the experimental infrastructure and start up costs are relatively small. most experiments are "table top" and at room temperature. this allows a new institution to get some momentum and "runs on the board" as quickly as possible.

Having said that I think there are significant obstacles and challenges with such interdisciplinary initiatives. These challenges are scientific, intellectual, and cultural (in the disciplinary sense).

Excellent inter-disciplinary teaching and research is a just plain hard work and slow. Getting people to put in the time and keep sticking at is difficult. It requires special individuals (both faculty and students) to build bridges, learn each others languages, respect, and persevere.

The TCIS Director, Sriram Ramaswamy is co-author of a nice RMP article, Hydrodynamics of soft active matter.

I am looking forward to seeing how this exciting new adventure develops over the years. India is leading the way.

Subscribe to:

Comments (Atom)

A golden age for precision observational cosmology

Yin-Zhe Ma gave a nice physics colloquium at UQ last week, A Golden Age for Cosmology I learnt a lot. Too often, colloquia are too speciali...

-

This week Nobel Prizes will be announced. I have not done predictions since 2020 . This is a fun exercise. It is also good to reflect on w...

-

Is it something to do with breakdown of the Born-Oppenheimer approximation? In molecular spectroscopy you occasionally hear this term thro...

-

Nitrogen fluoride (NF) seems like a very simple molecule and you would think it would very well understood, particularly as it is small enou...