A few months ago I attended a symposium at UQ on Energy in India. The talks can be viewed on Youtube. The one by Alexie Seller is particularly inspiring.

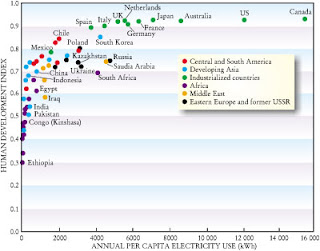

In the presentation of Chris Greig he showed a slide similar to that below with the title "Electricity affects Human well being".

He did not say it, but sometimes graphs like this are used to make claims such as "the more electricity people consume the better off they will be..." or "the only way to lift people out of poverty is to burn more coal..."

Two things are very striking about the graph.

First, the initial slope is very large. Second, the graph levels off quickly.

A little bit of electricity makes a huge difference. If you don't have electric lighting or minimal electricity to run hospitals, schools, basic communications, water pumps and treatment plants, ... then life is going to be difficult.

However, once you get to about 2000 kW hours per person per year, all the extra electricity beyond that makes little difference to basic human well being. This is frivolous use of air conditioners, aluminium smelters, conspicuous consumption, ...

Finally, we always need to distinguish correlation and causality. As people become more prosperous they do tend to consume more electricity. However, it is not necessarily the electricity consumption that is making them more prosperous. This is clearly seen by how flat the top of the curve is. Electricity consumption in Canada is almost four times that of Spain!

A more detailed discussion of the graph is in this book chapter by Vaclav Smil.