Monday, December 30, 2013

My most popular blogposts for 2013

According to blogspot here are the six most popular posts from this blog over the past year

Effective "Hamiltonians" for the stock market

Thirty years ago in Princeton

Relating non-Fermi liquid transport properties to thermodynamics

Mental health talk in Canberra

What simple plotting software would you recommend?

A political metaphor for the correlated electron community

Thanks to my readers, particularly to those who write comments.

Best wishes for the New Year!

Friday, December 27, 2013

What product is this?

Previously I posted about a laundry detergent that had "Vibrating molecules" [TradeMark].

Here is a product my son recently bought that benefits from "Invisible science" [TradeMark].

I welcome guesses as to what the product.

Monday, December 23, 2013

Encouraging undergraduate research

The American Physical Society has prepared a draft statement calling on all universities "to provide all physics and astronomy majors with significant research experiences". The statement is worth reading because of the claims and documentations about some of the benefits of such experiences. In particularly, such experiences can better prepare students for a broad range of career options. I agree.

However, I add some caveats. I think there are two dangers that one should not ignore.

First, departments need to be diligent that students are not just used as "cheap labour" for some faculty research. Earlier I posted about What makes a good undergraduate research project?, which attracted several particularly insightful comments.

Second, such undergraduate research experiences are not a substitute for an advanced undergraduate laboratory. APS News recently ran a passionate article, Is there a future for the Advanced Lab? by Jonathan Reichert. It is very tempting for university "bean counters" to propose saving money by replacing expensive advanced teaching labs with students working in research groups instead. Students need both experiences.

However, I add some caveats. I think there are two dangers that one should not ignore.

First, departments need to be diligent that students are not just used as "cheap labour" for some faculty research. Earlier I posted about What makes a good undergraduate research project?, which attracted several particularly insightful comments.

Second, such undergraduate research experiences are not a substitute for an advanced undergraduate laboratory. APS News recently ran a passionate article, Is there a future for the Advanced Lab? by Jonathan Reichert. It is very tempting for university "bean counters" to propose saving money by replacing expensive advanced teaching labs with students working in research groups instead. Students need both experiences.

Wednesday, December 18, 2013

An acid test for theory

Just because you read something in a chemistry textbook does not mean you should believe it. Basic [pun!] questions about what happens to H+ ions in acids remain outstanding. Is the relevant unit H3O+, H5O2+ [Zundel cation], H9O4+ [Eigen cation], or something else?

There is a fascinating Accounts of Chemical Research

Myths about the Proton. The Nature of H+ in Condensed Media

Christopher A. Reed

Here are a few highlights.

H+ must be solvated and is nearly always di-ordinate, i.e. "bonded" to two units. H3O+ is a rarely seen.

This is based on the distinctive infrared absorption spectra shown below.

What is particularly interesting is the "continuous broad absorption" (cab) shown in blue. This is very unusual for a chemical IR spectra. Reed says "Theory has not reproduced the cba, but it appears to be the signature of delocalized protons whose motion is faster than the IR time scale."

I don't quite follow this argument. The protons are delocalised within the flat potential of the SSLB H-bond, but there is a well defined zero-point energy associated with this. The issue may be more how does this quantum state couple to other fluctuations in the system.

There is a 2011 theory paper by Xu, Zhang, and Voth [unreferenced by Reed] that does claim to explain the "continuous broad absorption". Also Slovenian work highlighted in my post Hydrogen bonds fluctuate like crazy, should be relevant. The key physics may be that for O-O distances of about 2.5-2.6 A the O-H stretch frequency varies between 1500 and 2500 cm-1. Thus even small fluctuations in O-O produce a large line width.

Repeating the experiments in heavy water or deuterated acids would put more constraints on possible explanations since quantum effects in H-bonding are particularly sensitive to isotope substitution and the relevant O-O bond lengths.

[I am just finishing a paper on this subject (see my Antwerp talk for a preview)].

There is a fascinating Accounts of Chemical Research

Myths about the Proton. The Nature of H+ in Condensed Media

Christopher A. Reed

Here are a few highlights.

H+ must be solvated and is nearly always di-ordinate, i.e. "bonded" to two units. H3O+ is a rarely seen.

"In contrast to the typical asymmetric H-bond found in proteins (N–H···O) or ice (O–H···O), the short, strong, low-barrier (SSLB) H-bonds found in proton disolvates, such as H(OEt2)2+ and H5O2+, deserve much wider recognition.''This is particularly interesting because quantum nuclear effects are important in these SSLBs.

A poorly understood feature of the IR spectra of proton disolvates in condensed phases is that IR bands associated with groups adjacent to H+, such as νCO in H(Et2O)2+ or νCO and δCOH in H(CH3OH)8+,(50) lose much of their intensity or disappear entirely.(51)So is it Zundel or Eigen? Neither, in wet organic solvents:

Contrary to general expectation and data from gas phase experiments, neither the trihydrated Eigen ion, H3O+·(H2O)3, nor the tetrahydrated Zundel ion, H5O2+·(H2O)4, is a good representation of H+ when acids ionize in wet organic solvents, .... Rather, the core ion structure is H7O3+ ....

The H7O3+ ion has its own unique and distinctive IR spectrum that allows it to be distinguished from alternate formulations of the same stoichiometry, namely, H3O+·2H2O and H5O2+·H2O.(58) It has its own particular brand of low-barrier H-bonding involving the 5-atom O–H–O–H–O core and it has somewhat longer O···O separations than in the H5O2+ ion.What happens in water? The picture is below.

This is based on the distinctive infrared absorption spectra shown below.

What is particularly interesting is the "continuous broad absorption" (cab) shown in blue. This is very unusual for a chemical IR spectra. Reed says "Theory has not reproduced the cba, but it appears to be the signature of delocalized protons whose motion is faster than the IR time scale."

I don't quite follow this argument. The protons are delocalised within the flat potential of the SSLB H-bond, but there is a well defined zero-point energy associated with this. The issue may be more how does this quantum state couple to other fluctuations in the system.

There is a 2011 theory paper by Xu, Zhang, and Voth [unreferenced by Reed] that does claim to explain the "continuous broad absorption". Also Slovenian work highlighted in my post Hydrogen bonds fluctuate like crazy, should be relevant. The key physics may be that for O-O distances of about 2.5-2.6 A the O-H stretch frequency varies between 1500 and 2500 cm-1. Thus even small fluctuations in O-O produce a large line width.

Repeating the experiments in heavy water or deuterated acids would put more constraints on possible explanations since quantum effects in H-bonding are particularly sensitive to isotope substitution and the relevant O-O bond lengths.

[I am just finishing a paper on this subject (see my Antwerp talk for a preview)].

Tuesday, December 17, 2013

Skepticism should be the default reaction to exotic claims

A good principle in science is "extra-ordinary claims require extra-ordinary evidence", i.e. the more exotic and unexpected the claimed new phenomena the greater the evidence needs to be before it should be taken seriously. A classic case is the recent CERN experiment claiming to show that neutrinos could travel faster than the speed of light. Surely it wasn't too surprising when it was found that the problem was one of detector calibration. Nevertheless, that did not stop many theorists from writing papers on the subject. Another case, are claims of "quantum biology".

About a decade ago some people tried to get me interested in some anomalous experimental results concerning elastic scattering of neutrons off condensed phases of matter. They claimed to have evidence for quantum entanglement between protons on different molecules for very short time scales and [in later papers] to detect the effects of decoherence on this entanglement. An example is this PRL which has more than 100 citations, including exotic theory papers stimulated by the "observation."

Many reasons led me not to get involved: mundane debates about detector calibration, a strange conversation with one of the protagonists, and discussing with Roger Cowley his view that the theoretical interpretation of the experiments was flawed, and of course, "extra-ordinary claims require extra-ordinary evidence"....

Some of the main protagonists have not given up. But the paper below is a devastating critique of their most recent exotic claims.

Spurious indications of energetic consequences of decoherence at short times for scattering from open quantum systems by J. Mayers and G. Reiter

Mayers is the designer and builder of the relevant spectrometer. Reiter is a major user. Together they have performed many nice experiments imaging proton probability distributions in hydrogen bonded systems.

They argue that the unexpected few per cent deviations from conventional theory that are "evidence" just arise from incorrect calibration of the instrument.

About a decade ago some people tried to get me interested in some anomalous experimental results concerning elastic scattering of neutrons off condensed phases of matter. They claimed to have evidence for quantum entanglement between protons on different molecules for very short time scales and [in later papers] to detect the effects of decoherence on this entanglement. An example is this PRL which has more than 100 citations, including exotic theory papers stimulated by the "observation."

Many reasons led me not to get involved: mundane debates about detector calibration, a strange conversation with one of the protagonists, and discussing with Roger Cowley his view that the theoretical interpretation of the experiments was flawed, and of course, "extra-ordinary claims require extra-ordinary evidence"....

Some of the main protagonists have not given up. But the paper below is a devastating critique of their most recent exotic claims.

Spurious indications of energetic consequences of decoherence at short times for scattering from open quantum systems by J. Mayers and G. Reiter

Mayers is the designer and builder of the relevant spectrometer. Reiter is a major user. Together they have performed many nice experiments imaging proton probability distributions in hydrogen bonded systems.

They argue that the unexpected few per cent deviations from conventional theory that are "evidence" just arise from incorrect calibration of the instrument.

Monday, December 16, 2013

Should you judge a paper by the quality of its referencing?

No.

Someone can write a brilliant paper and yet poorly reference previous work.

On the other hand, one can write a mediocre or wrong paper and reference previous work in a meticulous manner.

But, I have to confess I find I sometimes do judge a paper by the quality of the referencing.

I find there is often a correlation between the quality of the referencing and the quality of the science. Perhaps this correlation should not be surprising since both reflect on how meticulous is the scholarship of the authors.

If I am sent a paper to referee I often find the following happens. I desperately search the abstract and the figures to find something new and interesting. If I don't I find that sub-consciously I start to scan the references. This sometimes tells me a lot.

Here are some of the warning signs I have noticed over the years.

Lack of chronological diversity.

Most fields have progressed over many decades.

Yet some papers will only reference papers from the last few years or may ignore work from the last few years. Some physicists seem to think that work on quantum coherence in photosynthesis began in 2007. I once reviewed a paper were the majority of the references were more than 40 years old. Perhaps for some papers that might be appropriate but it certainly wasn't for that one.

Crap markers. I learnt this phrase from the late Sean Barrett. It refers to using dubious papers to justify more dubious work.

For me an example would be "Engel et al. have shown that quantum entanglement is crucial to the efficiency of photosynthetic systems [Nature 2007]."

Lack of geographic and ethnic diversity. This sounds politically incorrect! But that is not the issue. Most interesting fields attract good work from around the world. Yet I have reviewed papers were more than half the references were from the same country as the authors. Perhaps that might be appropriate for some work but it certainly wasn't for that one. It ignored relevant and significant work from other countries.

Gratuitous self-citation.

Missing key papers or not including references with different points of view.

Citing papers with contradictory conclusions without acknowledging the inconsistency.

I once reviewed a paper with a title like "Spin order in compound Y", yet it cited in support a paper with a title like "Absence of spin order in compound Y."!

When I started composing this post I felt guilty and superficial about confessing my approach. The quality of the referencing does not determine the quality of the paper.

So why do I keep doing this?

Shouldn't I just focus on the science.

The painful reality is that I have limited time that I need to budget efficiently.

I want to spend as much time reviewing a paper that is in proportion to its quality.

Often the references gives me a quick and rough estimate of the quality of the paper.

The best ones I will work hard on, try and to engage with carefully and find robust reasons for endorsement and constructive suggestions for improvement.

At the other end of the spectrum, I just need to find some quick and concrete reasons for rejection.

But, I should stress I am not using the quality of referencing as a stand alone criteria for accepting or rejecting a paper. I am just using this as a quick guide as to how seriously I should consider the paper. Furthermore, this is only after I have failed to find something obviously new and important in the figures.

Am I too superficial?

Does anyone else follow a similar approach?

Other comments welcome.

Someone can write a brilliant paper and yet poorly reference previous work.

On the other hand, one can write a mediocre or wrong paper and reference previous work in a meticulous manner.

But, I have to confess I find I sometimes do judge a paper by the quality of the referencing.

I find there is often a correlation between the quality of the referencing and the quality of the science. Perhaps this correlation should not be surprising since both reflect on how meticulous is the scholarship of the authors.

If I am sent a paper to referee I often find the following happens. I desperately search the abstract and the figures to find something new and interesting. If I don't I find that sub-consciously I start to scan the references. This sometimes tells me a lot.

Here are some of the warning signs I have noticed over the years.

Lack of chronological diversity.

Most fields have progressed over many decades.

Yet some papers will only reference papers from the last few years or may ignore work from the last few years. Some physicists seem to think that work on quantum coherence in photosynthesis began in 2007. I once reviewed a paper were the majority of the references were more than 40 years old. Perhaps for some papers that might be appropriate but it certainly wasn't for that one.

Crap markers. I learnt this phrase from the late Sean Barrett. It refers to using dubious papers to justify more dubious work.

For me an example would be "Engel et al. have shown that quantum entanglement is crucial to the efficiency of photosynthetic systems [Nature 2007]."

Lack of geographic and ethnic diversity. This sounds politically incorrect! But that is not the issue. Most interesting fields attract good work from around the world. Yet I have reviewed papers were more than half the references were from the same country as the authors. Perhaps that might be appropriate for some work but it certainly wasn't for that one. It ignored relevant and significant work from other countries.

Gratuitous self-citation.

Missing key papers or not including references with different points of view.

Citing papers with contradictory conclusions without acknowledging the inconsistency.

I once reviewed a paper with a title like "Spin order in compound Y", yet it cited in support a paper with a title like "Absence of spin order in compound Y."!

When I started composing this post I felt guilty and superficial about confessing my approach. The quality of the referencing does not determine the quality of the paper.

So why do I keep doing this?

Shouldn't I just focus on the science.

The painful reality is that I have limited time that I need to budget efficiently.

I want to spend as much time reviewing a paper that is in proportion to its quality.

Often the references gives me a quick and rough estimate of the quality of the paper.

The best ones I will work hard on, try and to engage with carefully and find robust reasons for endorsement and constructive suggestions for improvement.

At the other end of the spectrum, I just need to find some quick and concrete reasons for rejection.

But, I should stress I am not using the quality of referencing as a stand alone criteria for accepting or rejecting a paper. I am just using this as a quick guide as to how seriously I should consider the paper. Furthermore, this is only after I have failed to find something obviously new and important in the figures.

Am I too superficial?

Does anyone else follow a similar approach?

Other comments welcome.

Friday, December 13, 2013

Desperately seeking triplet superconductors

A size-able amount of time and energy has been spent by the "hard condensed matter" community over the past quarter century studying unconventional superconductors. A nice and recent review is by Mike Norman. In the absence of spin-orbit coupling spin is a good quantum number and the Cooper pairs must either be in a spin singlet or a spin triplet state. Furthermore, in a crystal with inversion symmetry spin singlets (triplets) are associated with even (odd) parity.

Actually, pinning down the symmetry of the Cooper pairs from experiment turns out to be extremely tricky. In the cuprates the "smoking gun" experiments that showed they were really d-wave used cleverly constructed Josephson junctions, that allowed one to detect the phase of the order parameter and show that it changed sign as one moved around the Fermi surface.

How can one show that the pairing is spin triplet?

Perhaps the simplest way is to show that they have an upper critical magnetic field that exceeds the Clogston-Chandrasekhar limit [often called the Pauli paramagnetic limit, but I think this is a misnomer].

In most type II superconductors the upper critical field is determined by orbital effects. When the magnetic field gets large enough the spacing between the vortices in the Abrikosov lattice becomes comparable to the size of the vortices [determined by the superconducting coherence length in the directions perpendicular to the magnetic field direction]. This destroys the superconductivity. In a layered material the orbital upper critical field can become very large for fields parallel to the layers, because the interlayer coherence length can be of the order of the lattice spacing. Consequently, the superconductivity can be destroyed by the Zeeman effect breaking up the singlets of the Cooper pairs. This is the Clogston-Chandrasekhar paramagnetic limit.

How big is this magnetic field?

In a singlet superconductor the energy lost compared to the metallic state is ~chi_s B^2/2 where chi_s is the magnetic [Pauli spin] susceptibility in the metallic phase. Once the magnetic field is large enough that this is larger than the superconducting condensation energy, superconductivity becomes unstable. Normally, these two quantities are compared within BCS theory, and one finds that the "Pauli limit", H_P = 1.8 k_B T_c/g mu_B. This means for a Tc=10K the upper critical field is 18 Tesla.

Sometimes, people then use this criteria to claim evidence for spin triplets.

However, in 1999 I realised that one could estimate the upper critical field independent of BCS theory, using just the measured values of the spin susceptibility and condensation energy. In this PRB my collaborators and I showed that for the organic charge transfer salt kappa-(BEDT-TTF)2Cu(SCN)2 the observed upper critical field of 30 Tesla agreed with the theory-independent estimate. In contrast, the BCS estimate was 18 Tesla. Thus, the experiment was consistent with singlet superconductivity.

But, exceeding the Clogston-Chandrasekhar paramagnetic limit is the first hint that one might have a triplet superconductor. Indeed this was the case for the heavy fermion superconductor UPt3, but not for Sr2RuO4. Recent, phase sensitive Josephson junction measurements have shown that both these materials have odd-parity superconductivity, consistent with triplet pairing. A recent review considered the status of the evidence for triplet odd-parity pairing and the possibility of a topological superconductor in Sr2RuO4.

A PRL from Nigel Hussey's group last year reported measurements of the upper critical field for the quasi-one dimensional material Li0.9Mo6O17. They found that the relevant upper critical field was 8 Telsa, compared to values of 5 Tesla and 4 Tesla for the thermodynamic and BCS estimates, respectively.

Thus, this material could be a triplet superconductor.

[Aside: this material has earlier attracted considerable attention because it has a very strange metallic phase, as reviewed here.]

A challenge is to now come up with more definitive experimental signatures of the unconventional superconductivity. Given the history of UPt3 and Sr2RuO4, this could be a long road... but a rich journey....

Actually, pinning down the symmetry of the Cooper pairs from experiment turns out to be extremely tricky. In the cuprates the "smoking gun" experiments that showed they were really d-wave used cleverly constructed Josephson junctions, that allowed one to detect the phase of the order parameter and show that it changed sign as one moved around the Fermi surface.

How can one show that the pairing is spin triplet?

Perhaps the simplest way is to show that they have an upper critical magnetic field that exceeds the Clogston-Chandrasekhar limit [often called the Pauli paramagnetic limit, but I think this is a misnomer].

In most type II superconductors the upper critical field is determined by orbital effects. When the magnetic field gets large enough the spacing between the vortices in the Abrikosov lattice becomes comparable to the size of the vortices [determined by the superconducting coherence length in the directions perpendicular to the magnetic field direction]. This destroys the superconductivity. In a layered material the orbital upper critical field can become very large for fields parallel to the layers, because the interlayer coherence length can be of the order of the lattice spacing. Consequently, the superconductivity can be destroyed by the Zeeman effect breaking up the singlets of the Cooper pairs. This is the Clogston-Chandrasekhar paramagnetic limit.

How big is this magnetic field?

In a singlet superconductor the energy lost compared to the metallic state is ~chi_s B^2/2 where chi_s is the magnetic [Pauli spin] susceptibility in the metallic phase. Once the magnetic field is large enough that this is larger than the superconducting condensation energy, superconductivity becomes unstable. Normally, these two quantities are compared within BCS theory, and one finds that the "Pauli limit", H_P = 1.8 k_B T_c/g mu_B. This means for a Tc=10K the upper critical field is 18 Tesla.

Sometimes, people then use this criteria to claim evidence for spin triplets.

However, in 1999 I realised that one could estimate the upper critical field independent of BCS theory, using just the measured values of the spin susceptibility and condensation energy. In this PRB my collaborators and I showed that for the organic charge transfer salt kappa-(BEDT-TTF)2Cu(SCN)2 the observed upper critical field of 30 Tesla agreed with the theory-independent estimate. In contrast, the BCS estimate was 18 Tesla. Thus, the experiment was consistent with singlet superconductivity.

But, exceeding the Clogston-Chandrasekhar paramagnetic limit is the first hint that one might have a triplet superconductor. Indeed this was the case for the heavy fermion superconductor UPt3, but not for Sr2RuO4. Recent, phase sensitive Josephson junction measurements have shown that both these materials have odd-parity superconductivity, consistent with triplet pairing. A recent review considered the status of the evidence for triplet odd-parity pairing and the possibility of a topological superconductor in Sr2RuO4.

A PRL from Nigel Hussey's group last year reported measurements of the upper critical field for the quasi-one dimensional material Li0.9Mo6O17. They found that the relevant upper critical field was 8 Telsa, compared to values of 5 Tesla and 4 Tesla for the thermodynamic and BCS estimates, respectively.

Thus, this material could be a triplet superconductor.

[Aside: this material has earlier attracted considerable attention because it has a very strange metallic phase, as reviewed here.]

A challenge is to now come up with more definitive experimental signatures of the unconventional superconductivity. Given the history of UPt3 and Sr2RuO4, this could be a long road... but a rich journey....

Thursday, December 12, 2013

Chemical bonding, blogs, and basic questions

Roald Hoffmann and Sason Shaik are two of my favourite theoretical chemists. They have featured in a number of my blog posts. I particularly appreciate their concern with using computations to elucidate chemical concepts.

In Angewandte Chemie there is a fascinating article, One Molecule, Two Atoms, Three Views, Four Bonds that is written as a three-way dialogue including Henry Rzepa.

The simple (but profound) scientific question they address concerns how to describe the chemical bonding in the molecule C2 [i.e. a diatomic molecule of carbon]. In particular, does it involve a quadruple bond?

The answer seems to be yes, based on a full CI [configuration interaction] calculation that is then projected down to a Valence Bond wave function.

The dialogue is very engaging and the banter back and forth includes interesting digressions such the role of Rzepa's chemistry blog, learning from undergraduates, the relative merits of molecular orbital theory and valence bond theory, the role of high level quantum chemical calculations, and why Hoffmann is not impressed by the Quantum Theory of Atoms in Molecules.

In Angewandte Chemie there is a fascinating article, One Molecule, Two Atoms, Three Views, Four Bonds that is written as a three-way dialogue including Henry Rzepa.

The simple (but profound) scientific question they address concerns how to describe the chemical bonding in the molecule C2 [i.e. a diatomic molecule of carbon]. In particular, does it involve a quadruple bond?

The answer seems to be yes, based on a full CI [configuration interaction] calculation that is then projected down to a Valence Bond wave function.

The dialogue is very engaging and the banter back and forth includes interesting digressions such the role of Rzepa's chemistry blog, learning from undergraduates, the relative merits of molecular orbital theory and valence bond theory, the role of high level quantum chemical calculations, and why Hoffmann is not impressed by the Quantum Theory of Atoms in Molecules.

Tuesday, December 10, 2013

Science is broken II

This week three excellent articles have been brought my attention that highlight current problems with science and academia. The first two are in the Guardian newspaper.

How journals like Nature, Cell and Science are damaging science

The incentives offered by top journals distort science, just as big bonuses distort banking

Randy Schekman, a winner of the 2013 Nobel prize for medicine.

Peter Higgs: I wouldn't be productive enough for today's academic system

Physicist doubts work like Higgs boson identification achievable now as academics are expected to 'keep churning out papers'

How academia resembles a drug gang is a blog post by Alexandre Afonso, a lecturer in Political Economy at Kings College London. He takes off from the fascinating chapter in Freakanomics, "Why drug dealers still live with their moms." It is because they all hope they are going to make the big time and eventually become head of the drug gang. Academia has a similar hierarchical structure with a select few tenured and well-funded faculty who lead large "gangs" of Ph.D students, postdocs, and "adjunct" faculty. They soldier on in the slim hope that one day they will make the big time... just like the gang leader.

The main idea here is highlighting some of the personal injustices of the current system. That is worth a separate post. What does this have to do with good/bad science? This situation is a result of the problems highlighted in the two Guardian articles. In particular, many of these "gangs" are poorly supervised and produce low quality science. This is due to the emphasis on quantity and marketability, rather than quality and reproducibility.

How journals like Nature, Cell and Science are damaging science

The incentives offered by top journals distort science, just as big bonuses distort banking

Randy Schekman, a winner of the 2013 Nobel prize for medicine.

Peter Higgs: I wouldn't be productive enough for today's academic system

Physicist doubts work like Higgs boson identification achievable now as academics are expected to 'keep churning out papers'

How academia resembles a drug gang is a blog post by Alexandre Afonso, a lecturer in Political Economy at Kings College London. He takes off from the fascinating chapter in Freakanomics, "Why drug dealers still live with their moms." It is because they all hope they are going to make the big time and eventually become head of the drug gang. Academia has a similar hierarchical structure with a select few tenured and well-funded faculty who lead large "gangs" of Ph.D students, postdocs, and "adjunct" faculty. They soldier on in the slim hope that one day they will make the big time... just like the gang leader.

The main idea here is highlighting some of the personal injustices of the current system. That is worth a separate post. What does this have to do with good/bad science? This situation is a result of the problems highlighted in the two Guardian articles. In particular, many of these "gangs" are poorly supervised and produce low quality science. This is due to the emphasis on quantity and marketability, rather than quality and reproducibility.

Monday, December 9, 2013

Effect of frustration on the thermodynamics of a Mott insulator

When I recently gave a talk on bad metals in Sydney at the Gordon Godfrey Conference, Andrey Chubukov and Janez Bonca asked some nice questions that stimulated this post.

The main question that the talk is trying to address is: what is the origin of the low temperature coherence scale T_coh associated with the crossover from a bad metal to a Fermi liquid?

In particular, T_coh is much less than the Fermi temperature of for non-interacting band structure of the relevant Hubbard model [on an anisotropic triangular lattice at half filling].

Here is the key figure from the talk [and the PRL written with Jure Kokalj].

It shows the temperature dependence of the specific heat for different values of U/t for a triangular lattice t'=t. Below T_coh, the specific heat becomes approximately linear in temperature. For U=6t, which is near the Mott insulator transition, T_coh ~t/20. Thus, we see the emergence of the low energy scale.

Note that well into the Mott phase [U=12t] there is a small peak in the specific heat versus temperature. This is also seen in the corresponding Heisenberg model and corresponds to spin-waves associated with short-range antiferromagnetic order.

So here are the further questions.

What is the effect of frustration?

How does T_coh compare to the antiferromagnetic exchange J=4t^2/U?

The answers are in the Supplementary material of PRL. [I should have had them as back-up slides for the talk]. The first figure shows the specific heat for U=10t and different values of the frustration.

t'=0 [red curve] corresponds to the square lattice [no frustration] and t'=t [green dot-dashed curve] corresponds to the isotropic triangular lattice.

The main question that the talk is trying to address is: what is the origin of the low temperature coherence scale T_coh associated with the crossover from a bad metal to a Fermi liquid?

In particular, T_coh is much less than the Fermi temperature of for non-interacting band structure of the relevant Hubbard model [on an anisotropic triangular lattice at half filling].

Here is the key figure from the talk [and the PRL written with Jure Kokalj].

It shows the temperature dependence of the specific heat for different values of U/t for a triangular lattice t'=t. Below T_coh, the specific heat becomes approximately linear in temperature. For U=6t, which is near the Mott insulator transition, T_coh ~t/20. Thus, we see the emergence of the low energy scale.

Note that well into the Mott phase [U=12t] there is a small peak in the specific heat versus temperature. This is also seen in the corresponding Heisenberg model and corresponds to spin-waves associated with short-range antiferromagnetic order.

So here are the further questions.

What is the effect of frustration?

How does T_coh compare to the antiferromagnetic exchange J=4t^2/U?

The answers are in the Supplementary material of PRL. [I should have had them as back-up slides for the talk]. The first figure shows the specific heat for U=10t and different values of the frustration.

t'=0 [red curve] corresponds to the square lattice [no frustration] and t'=t [green dot-dashed curve] corresponds to the isotropic triangular lattice.

The antiferromagnetic exchange constant J=4t^2/U is shown on the horizontal scale. For the square lattice there is a very well defined peak at temperature of order J. However, as the frustration increases the magnitude of this peak decreases significantly and shifts to a much lower temperature.

This reflects that there are not well-defined spin excitations in the frustrated system.

The significant effect of frustration is also seen in the entropy versus temperature shown below. [The colour labels are the same]. At low temperatures frustration greatly increases the entropy, reflecting the existence of weakly interacting low magnetic moments.

Friday, December 6, 2013

I don't want this blog to become too popular!

This past year I have been surprised and encouraged that this blog has a wide readership. However, I have also learnt that I don't want it to become too popular.

A few months ago, when I was visiting Columbia University I met with Peter Woit. He writes a very popular blog, Not Even Wrong, that has become well known, partly because of his strong criticism of string theory. It is a really nice scientific blog, mostly focusing on elementary particle physics and mathematics. The comments generate some substantial scientific discussion. However, it turns out that the popularity is a real curse. A crowd will attract a bigger crowd. The comments sections attracts two undesirable audiences. The first are non-scientists who have their own "theory of everything" that they wish to promote. The second "audience" are robots that leave "comments" containing links to dubious commercial websites. Peter has to spend a substantial amount of time each day monitoring these comments, deleting them, and finding automated ways to block them. I am very thankful I don't have these problems. Occasionally, I get random comments with commercial links. I delete them manually. I did not realise that they may be generated by robots.

Due to the robot problem, Peter said he thought the pageview statistics provided by blog hosts [e.g. blogspot or wordpress] were a gross over-estimate. I could see that this would be the case for his blog. However, I suspect this is not the case for mine. Blogspot claims a typical post of mine gets 50-200 page views. This seems reasonable to me. Furthermore, the numbers for individual posts tend to scale with the number of comments and the anticipated breadth of the audience [e.g. journal policies and mental health attract more interest than hydrogen bonding!] Hence, I will still take the stats as a somewhat reliable guide as to interest and influence.

A few months ago, when I was visiting Columbia University I met with Peter Woit. He writes a very popular blog, Not Even Wrong, that has become well known, partly because of his strong criticism of string theory. It is a really nice scientific blog, mostly focusing on elementary particle physics and mathematics. The comments generate some substantial scientific discussion. However, it turns out that the popularity is a real curse. A crowd will attract a bigger crowd. The comments sections attracts two undesirable audiences. The first are non-scientists who have their own "theory of everything" that they wish to promote. The second "audience" are robots that leave "comments" containing links to dubious commercial websites. Peter has to spend a substantial amount of time each day monitoring these comments, deleting them, and finding automated ways to block them. I am very thankful I don't have these problems. Occasionally, I get random comments with commercial links. I delete them manually. I did not realise that they may be generated by robots.

Due to the robot problem, Peter said he thought the pageview statistics provided by blog hosts [e.g. blogspot or wordpress] were a gross over-estimate. I could see that this would be the case for his blog. However, I suspect this is not the case for mine. Blogspot claims a typical post of mine gets 50-200 page views. This seems reasonable to me. Furthermore, the numbers for individual posts tend to scale with the number of comments and the anticipated breadth of the audience [e.g. journal policies and mental health attract more interest than hydrogen bonding!] Hence, I will still take the stats as a somewhat reliable guide as to interest and influence.

Thursday, December 5, 2013

Quantum nuclear fluctuations in water

Understanding the unique properties of water remains one of the outstanding challenges in science today. Most discussions and computer simulations of pure water [and its interactions with biomolecules] treat the nuclei as classical. Furthermore, the hydrogen bonds are classified as weak. Increasingly, these simple pictures are being questioned. Water is quantum!

There is a nice PNAS paper Nuclear quantum effects and hydrogen bond fluctuations in water

Michele Ceriotti, Jerome Cuny, Michele Parrinello, and David Manolopoulos

The authors perform path integral molecular dynamics simulations where the nuclei are treated quantum mechanically, moving on potential energy surfaces that are calculated "on the fly" from density functional theory based methods using the Generalised Gradient Approximation. A key technical advance is using an approximation for the path integrals (PI) based on a mapping to a Generalised Langevin Equation [GLE] [PI+GLE=PIGLET!].

In the figure below the horizontal axis co-ordinate nu is the difference in the O-H distance between the donor and acceptor atom. Thus, nu=0 corresponds to the proton being equidistant between the donor and acceptor.

In contrast, for the Zundel cation, H5O2+ the proton is most likely to be equidistant due to the strong H-bond involved [the donor-acceptor distance is about 2.4 A]. In that case quantum fluctuations play a significant role.

The most important feature of the probability densities shown in the figure is the difference between the solid red and blue curves in the upper panel. This is the difference between quantum and classical. In particular, the probability of a proton being located equidistant between the donor and acceptor becomes orders of magnitude larger due to quantum fluctuations. It is still small (one in a thousand) but relevant to the rare events that dominate many dynamical processes (e.g. auto-ionisation).

On average the H-bonds in water are weak, as defined by a donor-acceptor distance [d(O-O), the vertical axis in the lower 3 panels] of about 2.8 Angstroms. However, there are rare [but non-negligible] fluctuations where this distance can become shorter [~2.5-2.7 A] characteristic of much stronger bonds. This further facilitates the quantum effects in the proton transfer (nu) co-ordinate.

There is a nice PNAS paper Nuclear quantum effects and hydrogen bond fluctuations in water

Michele Ceriotti, Jerome Cuny, Michele Parrinello, and David Manolopoulos

The authors perform path integral molecular dynamics simulations where the nuclei are treated quantum mechanically, moving on potential energy surfaces that are calculated "on the fly" from density functional theory based methods using the Generalised Gradient Approximation. A key technical advance is using an approximation for the path integrals (PI) based on a mapping to a Generalised Langevin Equation [GLE] [PI+GLE=PIGLET!].

In the figure below the horizontal axis co-ordinate nu is the difference in the O-H distance between the donor and acceptor atom. Thus, nu=0 corresponds to the proton being equidistant between the donor and acceptor.

In contrast, for the Zundel cation, H5O2+ the proton is most likely to be equidistant due to the strong H-bond involved [the donor-acceptor distance is about 2.4 A]. In that case quantum fluctuations play a significant role.

The most important feature of the probability densities shown in the figure is the difference between the solid red and blue curves in the upper panel. This is the difference between quantum and classical. In particular, the probability of a proton being located equidistant between the donor and acceptor becomes orders of magnitude larger due to quantum fluctuations. It is still small (one in a thousand) but relevant to the rare events that dominate many dynamical processes (e.g. auto-ionisation).

On average the H-bonds in water are weak, as defined by a donor-acceptor distance [d(O-O), the vertical axis in the lower 3 panels] of about 2.8 Angstroms. However, there are rare [but non-negligible] fluctuations where this distance can become shorter [~2.5-2.7 A] characteristic of much stronger bonds. This further facilitates the quantum effects in the proton transfer (nu) co-ordinate.

Tuesday, December 3, 2013

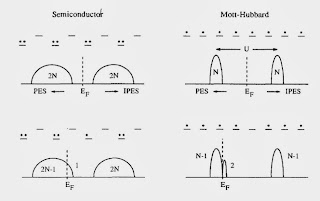

Review of strongly correlated superconductivity

On the arXiv, Andre-Marie Tremblay has posted a nice tutorial review Strongly correlated superconductivity. It is based on some summer school lectures and will be particularly valueable to students. I think it is particularly clearly and nicely highlights some key concepts.

For example, the figure below highlights a fundamental difference between a Mott-Hubbard insulator and a band insulator [or semiconductor].

There is also two clear messages that should not be missed. A minority of people might disagree.

1. For both the cuprates and large classes of organic charge transfer salts the relevant effective Hamiltonians are "simple" one-band Hubbard models. They can capture the essential details of the phase diagrams, particularly the competition between superconductivity, Mott insulator, and antiferromagnetisim.

2. Cluster Dynamical Mean-Field Theory (CDMFT) captures the essential physics of these Hubbard models.

I agree completely.

Tremblay does mention some numerical studies that doubt that there is superconductivity in the Hubbard model on the anisotropic triangular lattice at half filling. My response to that criticism is here.

Monday, December 2, 2013

I have no idea what you are talking about

Sometimes when I am at a conference or in a seminar I find that I have absolutely no idea what the speaker is talking about. It is not just that I don't understand the finer technical details. I struggle to see the context, motivation, and background. The words are just jargon and the pictures are just wiggles and the equations random symbols. What is being measured or what is being calculated? Why? Is there a simple physical picture here? How is this related to other work?

A senior experimental colleague I spoke to encouraged me to post this. He thought that his similar befuddlement was because he wasn't a theorist.

There are three audiences for this message.

1. Me. I need to work harder at making my talks accessible and clear.

2. Other speakers. You need to work harder at making your talks accessible and clear.

3. Students. If you are also struggling don't assume that you are stupid and don't belong in science. It is probably because the speaker is doing a poor job. Don't be discouraged. Don't give up on going to talks. Have the courage to ask "stupid" questions.

A senior experimental colleague I spoke to encouraged me to post this. He thought that his similar befuddlement was because he wasn't a theorist.

There are three audiences for this message.

1. Me. I need to work harder at making my talks accessible and clear.

2. Other speakers. You need to work harder at making your talks accessible and clear.

3. Students. If you are also struggling don't assume that you are stupid and don't belong in science. It is probably because the speaker is doing a poor job. Don't be discouraged. Don't give up on going to talks. Have the courage to ask "stupid" questions.

Subscribe to:

Comments (Atom)

A golden age for precision observational cosmology

Yin-Zhe Ma gave a nice physics colloquium at UQ last week, A Golden Age for Cosmology I learnt a lot. Too often, colloquia are too speciali...

-

This week Nobel Prizes will be announced. I have not done predictions since 2020 . This is a fun exercise. It is also good to reflect on w...

-

Is it something to do with breakdown of the Born-Oppenheimer approximation? In molecular spectroscopy you occasionally hear this term thro...

-

Nitrogen fluoride (NF) seems like a very simple molecule and you would think it would very well understood, particularly as it is small enou...